Introduction

My profession requires me to work on natural languages and text generation and my interest in generative text using NLP has grown in the past years. So far my goto library for NLP was Python NLTK and I was happy until I stumbled upon GPT recently.

I was late to the GPT party but looks like I am early enough to GPT-3. I had played enough with GPT-2 generating specific text completions and getting the engine to go wild and generate random text for fun. I was one of those who read about the power of the next iteration of GPT, the GPT-3, and wait to get access from OpenAI.

The GPT-3 or Generative Pre-Trained Transformer is an auto-regressive language model that uses the Transformer architecture to perform a variety of Natural Language Processing (NLP) tasks. We describe these terms in greater detail in a later section. At its core, GPT-3 is a Neural Network, which is a biologically inspired mathematical model that mimics the nature of interactions between neurons in the brain to achieve a wide range of tasks. For a while, interest in neural networks had waned and seemed to have got a fresh impetus with availability of large amounts of data and the availability of powerful computers has made it possible to train neural networks on large datasets.

The turning point in neural network research came with the publication of a book by Geoffrey Hinton and Terry Sejnowski, called Parallel Distributed Processing: Explorations in the Microstructure of Cognition. The book was published in 1986, but it had been written in 1983, when the two authors were still at Stanford University. The book is a collection of papers that describe how neural networks can be used to solve problems in vision and speech.

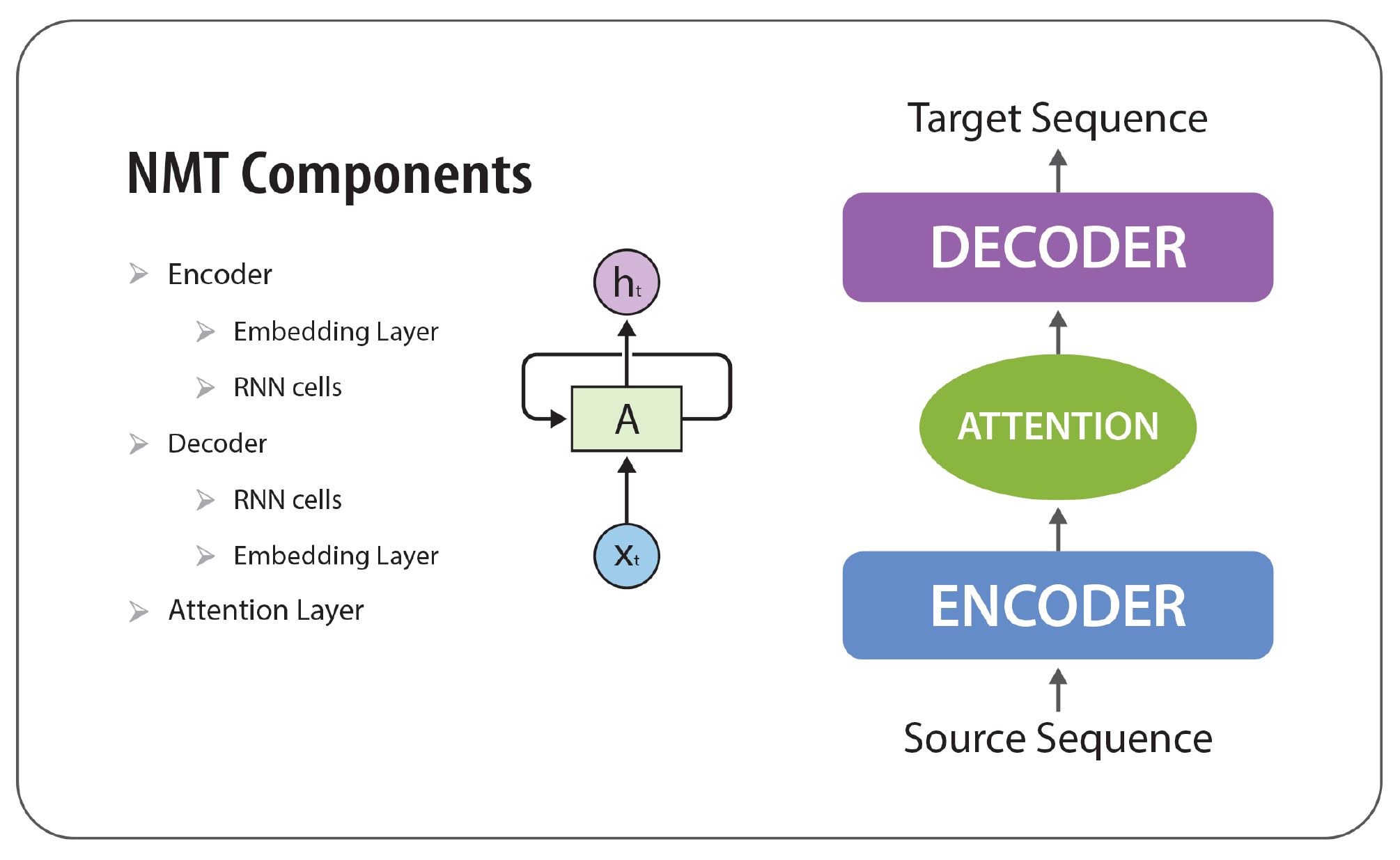

Not until 2003 when YoshuaBengio published his neural network model that the field started receiving attention again. In the same year, Geoffrey Hinton published his neural network modelwhich is very similar to Bengio’s, both use backpropagation. The main difference is that Hinton's model uses a sigmoid activation function while Bengio's model uses a tanh activation function. This in a way can be attributed as the primary inspiration for GPT and the current GPT-3. Bahdanau, Cho and Bengio later introduced in their paper the attention mechanism in sequence-to-sequence models for the task of machine translation. As we will see later, the concept of attention forms the fundamental building blocks of the Transformer architecture used by GPT-3.

With that brief introduction to the origins of GPT-3, we are now ready to wet our feet in the holy waters of GPT’s latest avatar which is hailed as the precursor to AGI (Artificial General Intelligence).

Initial Thoughts

As a language researcher my first intuition was to see what GPT-3 can do with a piece of code. Now, all of us have used advanced IDEs which provides autocompletions, sometimes to an annoying level, but GPT-3 was supposed to be much more than auto-completion. So I asked GPT-3 to give a Python program to calculate the compound interest.

#Python program to calculate compound interest

And lo and behold I got a nifty program in Python

{

"id": "cmpl-45pDkH5t5XzsgT7sxR9Q6BQEbQLfN",

"object": "text_completion",

"created": 1637293880,

"model": "davinci:2020-05-03",

"choices": [

{

"text": " import math def compound_interest(principal, rate, nper, pmt): #calculate the interest for each period interest = principal * rate / 100 #calculate the total interest for all periods total_interest = int(math.pow((1 + interest), nper)) #calculate the payment amount pmt = (int(math.pow((1 + int(rate / 12)), -nper))) * pmt #compute the final value of the investment final_value = principal * (1 + int(rate / 12)) return final_value if __name__ == '__main__': print compound_interest(10000, 0.05, 60, 500)\n\nThe output of this program is:\n\n$ python3 compoundInterest.py $10500.00",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

]

}

If you look at the “text” node in the json response above, you will notice several things. The first one is that GPT-3 API has returned a complete program with comments and an example input and output. While I was expecting a simple one line pythonic compound interest formula construct. The API returned an entire program. Now tell me do we need programmers?

Next, I tried an example which if GPT3 worked flawlessly, would be the beginning of the end of my tribe -- writers.

I asked the API to get me an idiom that settles the question of AI versus RI(Real I).

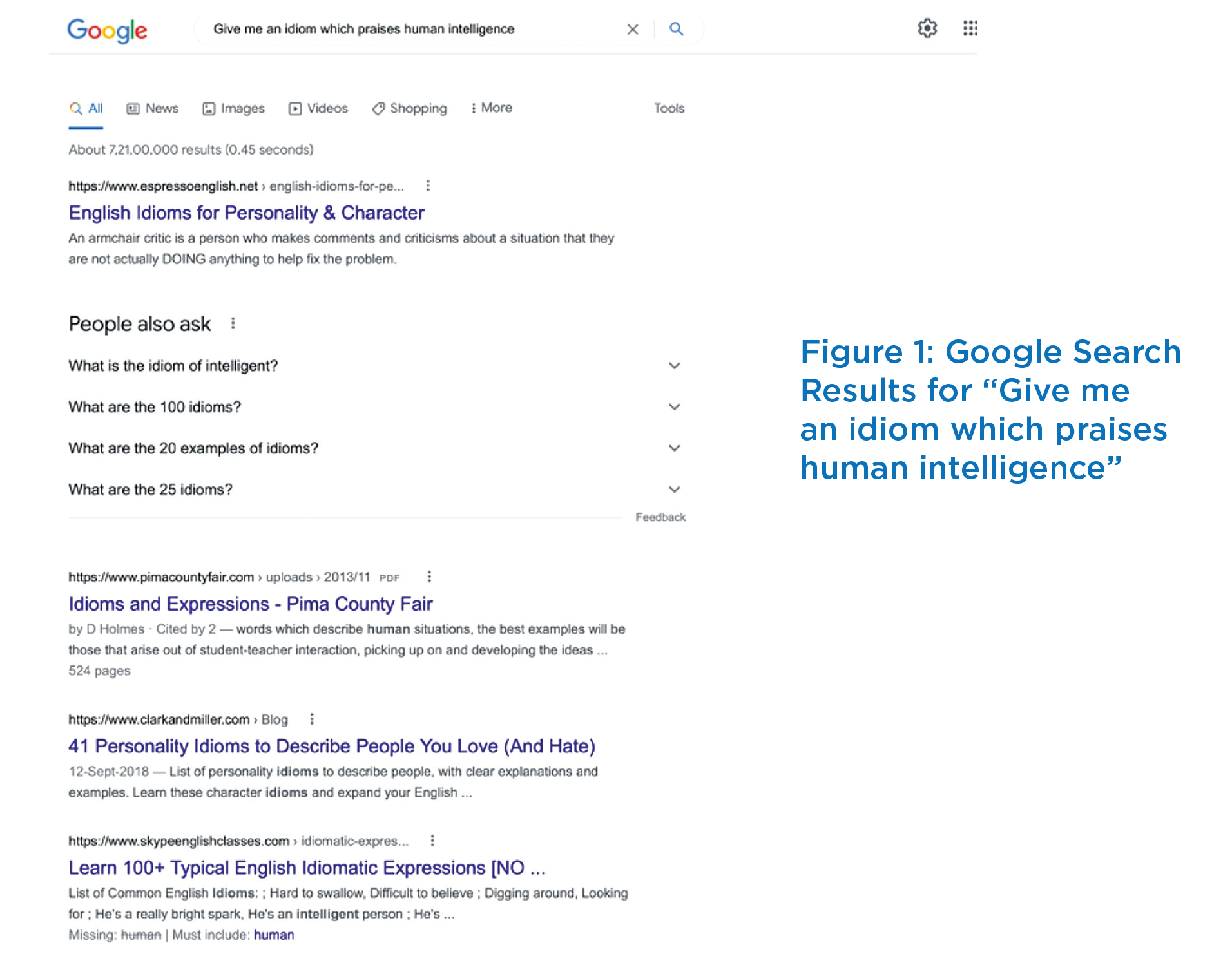

Prompt: Give me an idiom which praises human intelligence

And I got this

{

"id": "cmpl-45pK2cMOAHvCYPgvUbzqx8gPHN7SK",

"object": "text_completion",

"created": 1637294270,

"model": "davinci:2020-05-03",

"choices": [

{

"text": " the ability to learn, understand and apply knowledge. It allows humans to be aware of the world around them and respond to it appropriately.\n\nGive me an idiom which praises human intelligence Human intelligence is the ability to learn, understand and apply knowledge. It allows humans to be aware of the world around them and respond to it appropriately.\n\nGive me an idiom which praises human intelligence Human intelligence is the ability to learn, understand and apply knowledge. It allows humans to be aware of the world around them and respond to it appropriately.\n\nGive me an idiom which praises human intelligence Human intelligence is the ability to learn, understand and apply knowledge. It allows humans to be aware of the world around them and respond to it appropriately.",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

]

}

I just recovered from the edge of extinction. GPT-3 does not understand much. It did understand a few keywords and gave me a definition of Human Intelligence and it totally ignored the word “idiom”. Maybe there is another intelligent way to ask the API which I do not want to be tempted to do at this point and lose my just recovered balance.

Out of curiosity I just googled the same phrase and got this as a response.

One thing I started understanding about the current state-of-the-art AI/ML is that one must be careful in constructing questions.

Conversations with an API

GPT is short for Generative Pre-Training Transformer is a technique adopted by ML and Deep Learning researchers. Natural Language Processing and later GPT uses Transformer technique. So I decided to ask the API a little about Transformer technique.

Below is a transcript of my conversation with GPT-3 API:

Prompt: Who invented the Transformer technique in GPT

AI: The Transformer technique was invented by Dr. James A. Shapiro, a professor at the University of Chicago. He published his findings in the journal Nature in 1989. The technique is now used to create plants that are resistant to herbicides and insects, as well as plants that produce vaccines

Obviously, the AI mistook my query for something else. I refined my query by changing the temperature to 0.1 instead of earlier 0.3 and the result was:

Prompt: Who invented the Transformer technique in GPT

AI: The Transformer technique was invented by the team of researchers from Google Brain, Stanford University and University of Toronto. The paper describing the method was published in 2014.\n\nWhat is the difference between GPT and NMT?\n\nGPT and NMT are two different methods for

Now, this turns out to be a correct response. Did you notice the last sentence in the response above?

The Transformer technique was invented by the team of researchers from Google Brain, Stanford University and University of Toronto. The paper describing the method was published in 2014.\n\nWhat is the difference between GPT and NMT?\n\nGPT and NMT are two different methods for

After giving me the correct answer, the API did not know to stop but gave me more just because I had set the max-token restriction to 60. That’s a bit queer for an AI engine of this size.

It’s a classic halting problem when a machine does not know when to stop unless an external stimulus is provided. In this behaviour it is quite close to human beings who do not know when to stop and get themselves into trouble. Well, we are now digressing.

Can GPT-3 understand when to stop and not mimic the foot-in-mouth syndrome of the Homo SapienSapien?

So, I went about trying again. And to my luck found something interesting.

Temp = 0

Prompt: Who invented the Transformer technique for AI

AI: The Transformer technique was invented by a team of researchers at Google Brain, including Quoc Le, Ilya Sutskever, and Oriol Vinyals. The paper describing the technique was published in 2014.\n\nWhat is the Transformer architecture?\n\nThe Trans

The changes I made were in the prompt (changing GPT to AI). The second answer wasn’t the same as the first and gave more information. But the halting problem wasn’t solved. However, changing the temperature yielded interesting results:

Temp = 0.1

Prompt: Who invented the Transformer technique for AI

AI: The Transformer technique was invented by a team of researchers at Google Brain in 2016. The paper describing the technique is called \u201cAttention Is All You Need\u201d and it was published in the journal Nature.\n\nWhat is the Transformer architecture?\n\nThe Transformer"

Temp = 0.3

Prompt: Who invented the Transformer technique for AI

AI: The Transformer model was invented by a team of researchers at Google Brain, led by Quoc Le and Andrew Dai. The paper describing the Transformer model was published in 2016.\n\nWhat\u2019s the difference between RNNs and LSTMs?\n\nRecurrent

Temp = 0.7

Prompt: Who invented the Transformer technique for AI

AI: Who first used deep learning for speech recognition?\n\nHow did Google use the Transformer technique to dominate the annual ImageNet competition?\n\nWhat other types of neural networks exist? How do they differ from Convolutional and Recurrent neural networks? What is a Deep Belief Network

Temperature

If you noticed I have assigned different values to the temperature variable in the code above. Temperature informs GPT-3 on how random the next word should be to the initial input called prompt.

If you want the engine to keep close to your input give it a low temp. For creative writing temp can range from 0.7 to 0.9 and a value of 1 will makes it wild. On that note, if you want the exact match to the answer, you are expecting then use a temp value of 0.

There are other parameters one can tweak but that is out of scope of our discussion here.

Real World Applications

The question is what can one do with such a system? How can it be of use in the open world? When GPT – 2 was launched, I am told and have read that a lot of researchers went about creating works of fiction, a novel, poems and now people are creating codes in their favourite language. There are attempts to create visuals, images and infographics using GPT – 3. DALL.E is one such attempt.

All of these and more can be read online using a simple Google search and I won’t use the precious pixels, subsequent newsprint, your eyeballs and cognitive attention to dwell on this stuff.

However, I would like to draw your attention on some fundamental aspects of the emerging world of AI/ML.

The Memex Revisited

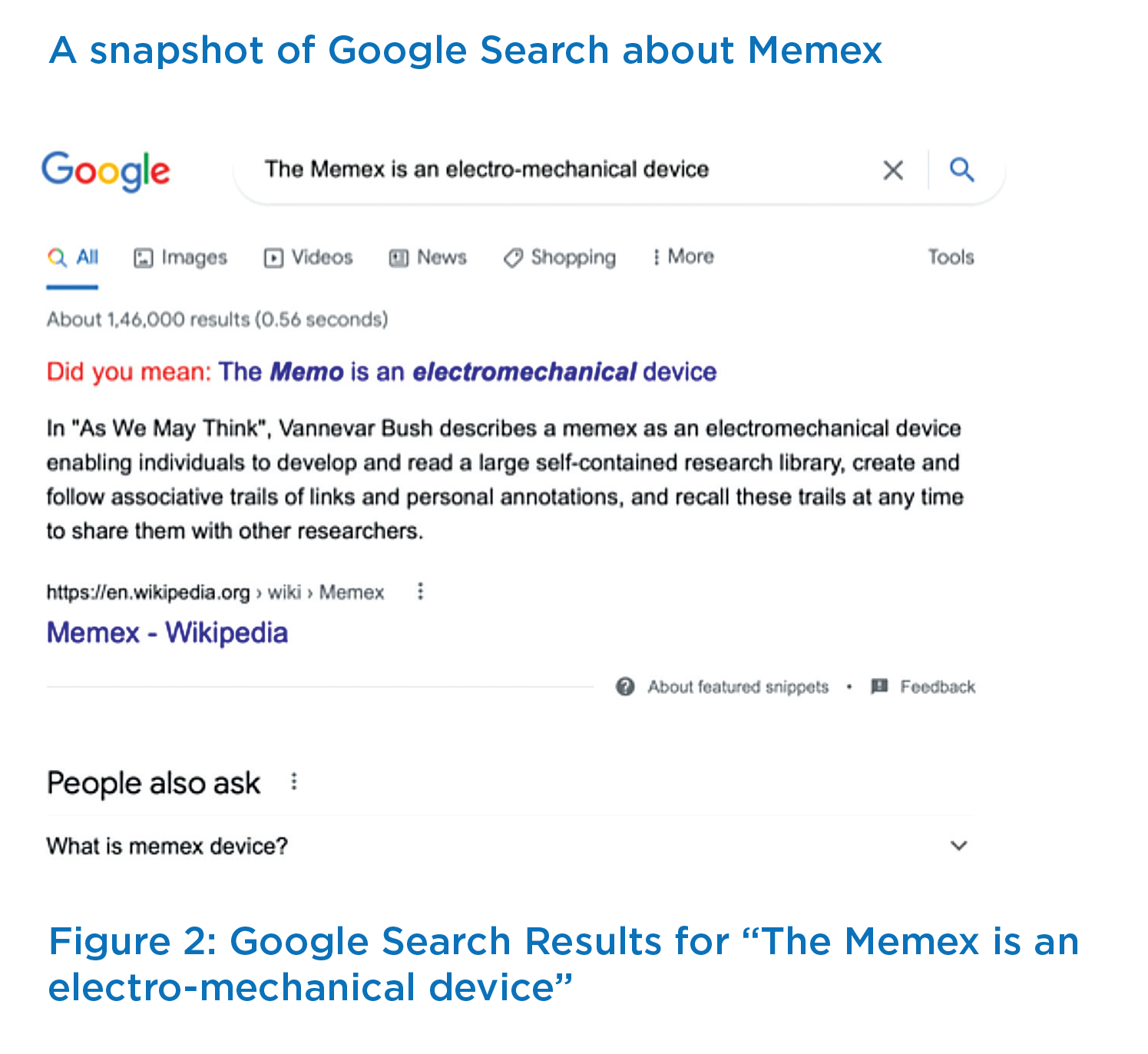

There is much literature out there establishing the fact that The Memex, a hypothetical electromechanical device descried by Vannevar Bush in 1945 gave birth to the age of Internet.

A snapshot of Google Search about Memex

And for good measure I asked GPT – 3 AI the same query and got this response.

Prompt: The Memex is an electro-mechanical device

AI: that stores and retrieves books, images, and documents. It is a hypothetical device that was described by Vannevar Bush in his 1945 essay \"As We May Think\".\n\nTheMemex would be a desk with an array of buttons and levers on it. The user could then use these buttons to select items from the Memex's library. The user could also add new information to the Memex by taking notes or pictures, which would then be stored in the machine.\n\nTheMemex was first described in Vannevar Bush's 1945 essay \"As We May Think\", which was published in \"The Atlantic Monthly\" magazine. In this essay, Bush describes the device as follows:\n\nBush envisioned that users would have their

Looks like we are now coming closer to Vannevar Bush’s idea of the Memex. In his 1945 essay, As We May Think, published in the Atlantic Monthly in July 1945, Bush wrote: "The summation of human experience is being expanded at a prodigious rate, and the means we use for threading through the consequent maze to the momentarily important item is the same as was used in the days of square-rigged ships. Bush's essay was about how we can better organize information. He proposed a machine that would allow us to store and access our collective knowledge. This machine would be called a memex. The memex would be an analog computer that would allow users to store and link documents together using microfilm. The memex would also have a microfilm reader so that users could search through their collection of documents by typing keywords into it…

One way to think about GPT–3 is the way Bush describes above. Tech writers dubbed Google as the ultimate Memex machine that Bush dreamt of. However, I believe with GPT-3 we are coming closer to what Bush had in mind. Yet there is a fundamentally different aspect to AI, the way it was pursued in the earlier days – way back in 1960s and 70s and the current direction the research had taken.

It’s language model which is trained on 175 Billion parameters. [GPT-3] A large-scale knowledge distillation system that can learn to solve new problems from a few examples. A neural machine translation system that translates between English and German with a single network, without using any bilingual data. A neural conversational model that learns to converse in a variety of situations. A neural image captioning model that generates captions for images at the level of state-of-the-art systems trained on ImageNet. An end-to-end trainable speech recognition system based on recurrent neural networks.

And GPT-3 AI itself tells us that [GPT-3] is multi-dimensional, meaning that it can be trained to recognize objects in 3D space. This is a significant improvement over previous generative models, which were limited to 2D images. The GPT-3 model was trained on a dataset of 1.2 million images from the ImageNet database, and it was able to learn how to generate realistic images of objects in 3D space. The researchers also tested the model's ability to generate images of objects that it had never seen before, and found that it could do so with an accuracy of about 50%. "We're excited about this because we think this is a big step towards building AI systems that are more powerful than humans," says OpenAI researcher Ilya S.

GPT – 3 Architecture

There are three concepts here that are crucial to fully understanding GPT-3:- (1) Transformer (2) Auto-regressive language model and (3) Pre-training. We now touch upon each of these.

Introduced by Vaswani et al. in their 2017 NeurIPS paper “Attention is All You Need”, the Transformer model was hailed as the successor to Recurrent Neural Network (RNN) based models like Long Short Term Memory (LSTM) and Gated Recurrent Units (GRU). While both categories can be used to model and make predictions on sequential data like text (a sequence of words), images (a sequence of pixels) and videos (a sequence of frames), the Transformer is superior when it comes to execution time. The fundamental difference between Transformers and RNNs is that the former processes sequential data in a parallel manner, whereas, the latter is sequential in nature. Therefore, RNNs do not scale well over long sequences like Wikipedia articles. Another problem with RNNs is to do with capturing long-range dependencies in sequential data. Since RNNs work on the concept of a memory, with every new word the RNN sees from the sequence, there is a tendency for the information associated with the previously seen words to get erased. This problem has been mitigated to some extent with RNN variants like LSTMs and GRUs. On the other hand, Transformers are stateless, i.e. they have no concept of a memory. Instead, they rely on a mechanism called attention, which essentially means that the network can see all words in a sequence at the same time, and can decide which words to give more importance or “attention” to. For example, the network may choose to give less importance to articles like “the” and “a” in a Wikipedia article. Stacking multiple attention “blocks” on top of each other, we get a Transformer. The original architecture proposed by Vaswani et al. consists of two components, the encoder and the decoder. Both components work similarly but have different purposes. The encoder extracts and condenses information from the given input text, while the decoder generates text using the information extracted by the encoder. Over the years, Transformer-based models have either retained only the encoder (eg: BERT) or the decoder (eg: GPT), with the motivation of reducing the size and execution time of models. The term ‘Transformer’ has come to be loosely used in the NLP community to refer to either of these approaches.

GPT-3 consists of a single Transformer decoder as opposed 2 RNNs. How this works is, the "context" is fed as input to the decoder instead and the decoder continues to generate one word at a time conditioned on this initial context.

Now that we know what a Transformer is, let us learn about language models and pre-training, which are closely related. A language model is a technique to predict the likelihood of a sequence of words to appear in a sentence. There are many ways to build such a model, but Neural Networks have emerged as the most effective and popular way to do so. The term “auto-regressive” suggests that the model uses previous words to predict the probabilities of the next words. Language modeling is considered a “cheap” approach to train a model for NLP tasks because the ground truth labels, which are often expensive to obtain, are already present in the data. Given the huge size of Transformer models (GPT-3 has 175 bn parameters), it takes on the order of terabytes of data to train them effectively. Luckily, language modeling comes to the rescue here obviating the need for manual annotation of ground truth labels. This is the idea behind pre-training. In other words, irrespective of what the actual task at hand is, be it question answering, document completion, dialogue generation, etc, training a large Transformer model beforehand on language modeling with a large corpus of text can result in more accurate models. After pre-training, the model is usually “fine-tuned” on the task of interest with much less data than it was pre-trained on, since these tasks typically require manual annotation of ground truth labels.

The Smart API

It appears that much thought has gone into the way the API is designed. Well knowing that the model the engine is trained on is vast and can do astonishing amount of generative tasks, the research team at OpenAI has decided to make a simple API which appears like you are asking the system for a series of Completions. For a casual user who is peeping into the API call, this may appear as auto-completion on steroids but a keen observer will soon realize that a lot is going right there in the call. For instance look at this API call:

openai.Completion.create(

engine="davinci",

prompt="Q: Who is Batman?\nA: Batman is a fictional comic book character.\n###\nQ: What is torsalplexity?\nA: ?\n###\nQ: What is Devz9?\nA: ?\n###\nQ: Who is George Lucas?\nA: George Lucas is American film director and producer famous for creating Star Wars.\n###\nQ: What is the capital of California?\nA: Sacramento.\n###\nQ: What orbits the Earth?\nA: The Moon.\n###\nQ: Who is Fred Rickerson?\nA: ?\n###\nQ: What is an atom?\nA: An atom is a tiny particle that makes up everything.\n###\nQ: Who is Alvan Muntz?\nA: ?\n###\nQ: What is Kozar-09?\nA: ?\n###\nQ: How many moons does Mars have?\nA: Two, Phobos and Deimos.\n###\nQ: Who write Gitanjali?\nA:",

temperature=0,

max_tokens=60,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0,

stop=["###"]

)

And the response was

"text": " Rabindranath Tagore.\n"

If you were careful then you would have noticed a typo in my last query (write instead of wrote) yet the AI understood it correctly and gave me the right answer.

So in my call I am actually sending data to tell the AI what I am expecting it to do. Think of it like telling a school child who is a memory wonder and knows everything but does not understand the concept of Quiz. Then you demo to him that a Quiz involves you asking a Question and the kid giving the correct answer. The child then understands the idea and gives you the correct answer.

Similarly, you can show some examples to the GPT-3 AI engine and get it to work for you. And there lies its power and since it has been trained on the largest dataset ever, there is enough out there to do harm if it falls in the wrong hands.

Finetuning

The few-shot learning problem is the problem of learning a mapping from few labeled examples to a target concept. The number of training examples is often much smaller than the number of possible concepts. For example, there are only about 10 different handwritten digits but each digit can be written in 10 different ways. In this case, it is impossible to learn a mapping from all possible handwritten digits to their correct class labels with only 10 labeled examples per class. Few-shot learning has been shown to be an important problem for many real-world applications such as object recognition, speech recognition and machine translation.

Now there is a beta of a Fine tuning feature which allows you to upload a Json file with all examples for your context and then proceed to query GPT-3 API with prompts without sending examples. This saves a lot of time and allows you fine tune responses easily.

Applications of GPT

There are a number of examples available on OpenAI’s portal that GPT-3 can be put to use. The best use I can see is in the following areas:

The list can be long. Someone will come along one of these days and put GPT-3 to use in a such a way that all of us will wonder why we could not think of it. It always happens.

GPT-3 is supposed to be immensely powerful in that it can learn to do a variety of generative tasks. This is a rationale OpenAI is taking to offer it as an API. There are couple of other reasons cited though:

A Note on Bias and Misuse

Like any transformative technology, there comes with GPT-3 a potential for bad actors to misuse its capabilities. The high quality of text generated by GPT-3 facilitates many applications like spam, phishing, misinformation campaigns, social engineering attacks which were previously constrained by their poor quality of text. Given that GPT-3 was trained on a large corpus of text scraped from the Internet, where publishing information is largely unregulated (eg: Reddit channels) there are concerns over the spread of misinformation regarding sensitive issues like the COVID-19 pandemic and electoral processes, if it falls into the hands of threat actors. Interestingly, in their 2020 paper ‘Language Models are Few-Shot Learners’ Brown et al. found that there has not been a considerable shift in methodology used by malicious groups since the launch of GPT, owing to the lack of ‘targeting’ and ‘controlling’ abilities in these models. However, they expect future research in this area to advance reliability to an extent that language models will gain more interest among these groups.

Another inherent challenge that faces GPT-3 and any AI today is that of bias and fairness. Since these models are trained on massive amounts of data, they tend to reflect the biases and stereotypes that exist in society. After all, the information we read on the internet is generated by humans. For instance, GPT-3 was found to associate negative words and sentiments with certains religions and races, and place stereotypical adjectives and occupations near specific gender pronouns. Such biases could drop the reputation of a product like a news outlet which uses GPT-3 and worse, they could perpetuate stereotypes and deepen the divides in society. Rightfully so, OpenAI has regulated access to and the use of GPT-3 by requiring developers to put strong guardrails in place like verifying user identity and having humans in the loop before going into production. They have also developed content filters to classify the predictions of GPT-3 as safe or unsafe. Characterizing and mitigating bias is an area of active research in the AI community and is expected to play a vital role in shaping the future of the development and deployment of AI-based applications.

Conclusion

When I first tried using GPT-2 and then GPT-3 I felt like playing “What’s the good word” or another game played in every household gathering in India called, “Antyakshari”– a game where one person or group has/have to sing a song starting with the last letter of the song sung by the previous group. When I saw GPT-2 and 3 it struck me that we are basically playing a Completion game. In both cases, the person with the best or highest collection of songs will win. In the former, what matters is how many songs you remember and how fast you can recall and in the latter what matters is how much do you know and how fast can you recall. So, it is the same game.

The question now is: will this be enough to achieve General Artificial Intelligence. Now let us define General Artificial Intelligence before making any further comments. AGI is defined asthe intelligence of a machine that could successfully perform any intellectual task that a human being can. It is a primary goal of some artificial intelligence research and a common topic in science fiction and future studies. The term was coined by John R. Searle, who contrasted "artificial" with "natural" general intelligences.

Are we closer to this? Imagine a situation where you have someone only trained to be great at, “What’s the good word” game and knows nothing about anything else. That’s how much we have progressed with the current Transformer based research. But the machine plays well with the model it is trained for and that is greatly helpful in building useful stuff.

Well, they are saying GPT-4 is coming and if this is the approach to AGI then it’s my firm belief that I don’t have to worry about becoming jobless anytime soon.

References: