To understand the post-industrial world of the millennials and the challenges and opportunities it presents is yet another chapter in the evolution of Homo sapiens. It will force humanity to reassess the meaning of life, its place and significance in the Universe, and above all its ability to survive in a world that includes its own creative creation – the super-intelligent, human-machine hybrid – the humanoid. The role of natural humans and humanity’s faith in spirituality if humanoids take charge will undergo a sea-change. The millennials’ ability to adapt to the new world by competing against the humanoids will face severe limitations and may even lead to Homo sapiens becoming an endangered species within a century. A biological evolution of intelligent life is waiting to happen, triggered by the Homo sapiens’ curiosity-driven quest to understand the Universe within a rational, axiomatized framework.

Keywords: Artificial intelligence, post-industrial economy, millennials, rationalism.

Recent advances in artificial intelligence (AI) and biotechnology are nothing short of sensational in terms of the conceptual barriers they have overcome. In the last few years they have garnered a list of achievements and synergistic integration that clearly indicate that Homo sapiens are in for an unprecedented upheaval in their life–the loss of their vaunted intellectual supremacy on Earth and the rising role of humanoids in human affairs. This change will likely happen within a century if Kurzweil’s predictions about the future of AI come true (given his track record, it most likely will). Kurzweil, the author of The Singularity is Near1 predicts: “By 2029, computers will have human-level intelligence.”2 He also says the future will provide opportunities of unparalleled human-machine synthesis. In a communication to Futurism, Kurzweil said:

2029 is the consistent date I have predicted for when an AI will pass a valid Turing test and therefore achieve human levels of intelligence. I have set the date 2045 for the `Singularity’ which is when we will multiply our effective intelligence a billion-fold by merging with the intelligence we have created.3

To this we add an observation by Richard Ogle in his book, Smart World:

[I]n making sense of the world, acting intelligently, and solving problems creatively, we do not rely solely on our mind’s internal resources. Instead, we constantly have recourse to a vast array of culturally and socially embodied idea-spaces that populate the extended mind. These spaces … are rich with embedded intelligence that we have progressively offloaded into our physical, social, and cultural environment for the sake of simplifying the burden on our own minds of rendering the world intelligible. Sometimes the space of ideas thinks for us.4

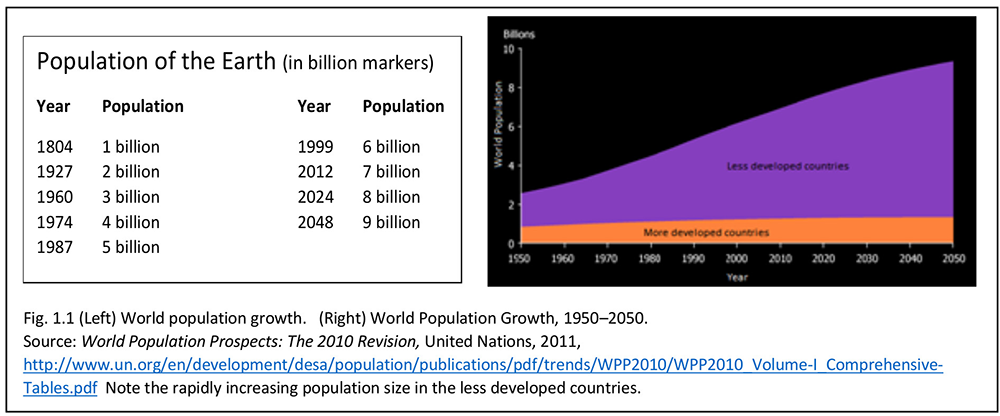

This smart world also faces unprecedented demographic changes due to variations in mortality, life expectancy, and a youthful population in countries where fertility is high. Overcrowding on Earth is a recent phenomenon. In the next three or four decades, the overall population of the more developed countries is likely to stagnate at about 1.3 billion (see Fig. 1.1). Their population is ageing and would decline but for migration. The populations of Germany, Italy, Japan, and several states of the former Soviet Union that broke away are also expected to decline by 2050.5 The world’s flexibility to cope with such unprecedented socio-economic changes is untested. Hence the millennials are expected to face unprecedented challenges and novel opportunities in the future. It will force humanity to reassess the meaning of life, its place and significance in the Universe, and above all its ability to survive in a world that includes its own creative creation – the super-intelligent, human-machine hybrid – the humanoid. The role of natural humans (the Homo sapiens) and humanity’s faith in spirituality if humanoids take charge will undergo a sea-change. Humanity’s ability to adapt to the new world by competing against humanoids will be severely tested and may even lead to the Homo sapiens becoming an endangered species within a century. A biological evolution of intelligent life is waiting to happen, triggered by the Homo sapiens’ quest to understand the Universe not according to the scriptures but according to science.

Life on Earth began some 3.8 billion years ago with single-celled prokaryotic cells, such as bacteria, evolving to multi-cellular life over a billion years. It is only in the last 570 million years that life forms we are familiar with began to evolve, starting with arthropods, followed by fish 530 million years ago (Mya), land plants 475 Mya, and forests 385 Mya. Mammals evolved around 200 Mya, and Homo sapiens (the species to which we humans belong evolved from Homo erectus)6 arose only a mere 300,000 years ago.7 Up until 2.4 billion years ago, there was no oxygen in the air. There is still much to learn about the Homo sapiens.8 Their origin is vastly different from what various religions tell us. They were not made by God in His own image; they evolved to their present image and they are still evolving in unknown ways. One day, the Homo sapiens will become the ancestor species of one or more species. No religion has ever alluded to that.

On the biological front, Darwin’s theory9 of evolution of life was a remarkable eye-opener. He posited that all life is related and that it descended from a common ancestor: the birds and the bananas, the fishes and the flowers, the animals and the Homo sapiens, etc. It presumes life developed from non-life and that complex creatures evolve from less complex ancestors naturally and over time through a random process of adaptation via “descent with modification” in which random genetic mutations occur within an organism’s genetic code; the beneficial mutations that aid an organism to survive are thus passed on to the next generation while the weaker organisms die and are eliminated from breeding–a process known as “natural selection” or survival of the fittest in a given environment. Over time, enough beneficial mutations accumulate to trigger a phase transition and an entirely different organism (not just a variation of the original) comes into existence. The supporting evidence for Darwin’s theory of evolution comes from morphological similarity among organisms (suggesting shared descent), and that living species are similar to recent related fossils. The fossil record is good and large enough for us to see relatives of clearly different species in it.10

Evolutionary biology by itself does not necessarily imply that God does not exist. But it admits the plausible view: “If God does exist, however, existing is about the only thing He has ever done. God is permanently unemployed, if, in the entire history of life, impersonal material forces were capable of doing the whole job and did do it. So if one attempts to hold a view of God as creator, it is a very attenuated view and one which tends to fade away into unreality.”11 There is still much to learn about the nature of biological diversity and its complexity. We do not yet know how genetic information, as encoded in the DNA, came into existence to start life out of single-celled predecessors. That this is what happened millions of years ago is a reasonable scientific conjecture not yet refuted. Man learnt thousands of years ago how to accelerate the natural evolution process through selective breeding, i.e., by reducing randomness in the selection process, say, dogs with specific characteristics (e.g., size, body color, hair type, demeanor, etc.) or plants modified to taste, made sturdier, etc., within a few thousand years rather than hundreds of millions of years that natural selection would take. Early human-engineered breeding practices have now advanced to an extent where we can clone living organisms and directly modify a living or dead organism’s DNA or even ab initio design DNA to create new organisms in the lab. We call it biotechnology, the core of which is genetic engineering. Creation of living matter is no longer a mystery, but how non-living matter gets turned into life and vice-versa is still a mystery.

Man’s association with dogs goes back to some 10,000 to 30,000 years ago. It is generally believed that “all dogs, from low-slung corgis to towering mastiffs, are the tame descendants of wild ancestral wolves.”12 Wolves, living in and around human surroundings, grew tamer with each generation until they became domesticated permanent companions. Dogs have cross-bred so often with wolves and each other that we have a great variety of them. The process itself indicates that dog domestication may have happened several times spread over different geographies and times. Some day, molecular biologists may tell us how ancient canines relate to each other and to modern pooches. The domestication of the grey wolf into dogs, man’s reputed best friend, happened long before the Industrial Revolution (1760-1840), before literature and mathematics, and before bronze, iron, and agriculture. This ancient partnership between man and animal entwined the fate of the two species. “The wolves changed in body and temperament. Their skulls, teeth, and paws shrank. Their ears flopped. They gained a docile disposition, becoming both less frightening and less fearful. They learned to read the complex expressions that ripple across human faces. They turned into dogs.”13

The domesticated dog is an outstanding example that man is the important factor on Earth in changing the environment. It is he who could domesticate a wild species through genetic breeding in a very small fraction of the time that Nature would have required. And now that man has learnt the secret of creating new species in a lab, the time is not too far when he would be doing it on a mass scale.

Interestingly, no religion talks about either the bacteria or the dinosaur or the evolution of man from the great apes. The dog does get a mention. Till an explosion in scientific knowledge occurred and directly began to affect the structure of human societies through new schemes of division of labor and its institutions, religion had a stranglehold on man that transcended the powers of human rulers. Since the 20th century, religion’s power over educated man has begun to erode with increasing momentum. The simple dilemma of the millennials is: Should they enjoy and explore life on Earth or secure a place in heaven by servilely appeasing an unseen, undescribable God who communicates with men through messengers claiming to carry revealed messages whose contents are increasingly at variance from science. Science is progressive and open to correction, religion is regressive and dogmatic.

Religions are oblivious of millions of species that once existed on Earth and have since vanished and millions that will emerge on Earth in the future.14 Indeed, we have no idea how and to what Homo sapiens will become ancestors to. For example, geology tells us how plate tectonics has changed the location of continents through movements of plates on the Earth’s surface. This has helped scientists correlate in spatial-temporal terms the geographical distribution of animals and plants, both living and fossil, with plate movements. This is amazing evidence for evolution by shared descent. It is generally estimated that there were about fifty thousand species of vertebrates just 65-70 million years ago, to the end of the Cretaceous. Of those, fewer than twenty gave rise to some one hundred thousand species of vertebrates that exist now. The rest became extinct.15 One wonders why God let them all die instead of letting them live in harmony.

Life on earth began nearly four billion years ago. In another billion years life will become extinct because the Sun’s brightness is increasing nearly 10 percent per billion years, enough to extinguish life on Earth by incineration.16 In another 10 billion years from now, the Sun too will die in a spectacular display of fireworks. It does not matter if we devoutly and religiously believe the Sun to be a god or not.17 As William Provine says,

Let me summarize my views on what modern evolutionary biology tells us loud and clear – and these are basically Darwin’s views. There are no gods, no purposes, and no goal-directed forces of any kind. There is no life after death. When I die, I am absolutely certain that I am going to be dead. That’s the end of me. There is no ultimate foundation for ethics, no ultimate meaning in life, and no free will for humans, either.18

This is quite the opposite of what religions preach us, namely, God created life and humans He created separately. Humans and chimpanzees do not share a common ancestor. God gives life after death and He gives us absolute foundation for ethics. He also gives us the ultimate meaning for life and gives us free will to go astray or seek genuine understanding of Him and shoulder responsibility. No reason was ever provided for not creating the perfect man and the perfect universe in the first place. Also, free will gives man the opportunity to act as nastily and irresponsibly as God does, like putting people in hell-like prisons and fanning vindictiveness. There is no Earthly reward for praising God and suffering while doing so. The biologically evolving Homo sapiens are indeed a small part of a complex, process; they are not the final goal of evolution. “Think of us all as young leaves on this ancient and gigantic tree of life – connected by invisible branches not just to each other but to our extinct relatives and

our evolutionary ancestors.”19

We, the Homo sapiens, have been around for about 300,000 years.20 Records of our civilization date back approximately 6000 years. About 12,000 years ago, after more than 99% of mankind’s life on Earth, man transitioned from being the nomadic hunter-gatherer to a pastoral-agricultural life of rearing animals and sowing seeds. This lasted till about 1500 AD. During this period society structured itself into families; women took care of the household, men earned a livelihood. From about 1500 AD to the later-half of 20th century, an industrial economy developed with increasing growth of industrial activity and mechanization of agriculture. Within five centuries, the economy graduated from using animal power to steam power to fossil fuel power to electrical power. Along with changing sources of power, society too restructured itself into increasingly complex communities–extended families, cities, nations, alliances, institutions, modes of governance, dominions, etc.–and economies that ranged from family businesses run locally to multinational corporations operating globally and employing millions of men and women. Women were gradually weaned away from the hearth to the power corridors of corporations, competing with men for power and success in all spheres of life–business, politics, arts, science, etc. Since the late 20th century the world began to transform rapidly into a post-industrial economy which expects to power its way to another future using wind, solar, and information power. This, in brief, is the progress of human civilization.

In the hunter-gatherer stage, humans and their socio-economic organizations and skills developed at an ultraslow pace consistent with Darwin’s theory of evolution by natural selection.21 In the subtitle of his book, On the Origin of Species by Means of Natural Selection (1859), Darwin aptly saw it as the “Preservation of Favoured Races in the Struggle for Life”. The struggle continues. The core hypothesis of the theory is that species with traits that help in adaptation are more likely to survive and reproduce than its peers in a given environment. The role of the human mind would have been negligible in such an evolutionary process. Human population was sparse; space required for survival per inhabitant was about a square mile and demanded a nomadic life involving extraordinary land-intensive activity.22 Its advanced technology was built around stone to make implements with a sharp edge, a point, or a percussion surface.

In comparison, in a span of mere 12,000 years, in the agricultural stage, the human brain-mind began to flex its neural network and mentally awakened humans began to organize into families and communities with progressively more sophisticated systems of division of labor according to the size of the community and its contextual function. An adaptive civilization emerged from an evolutionary process that took survival of the fittest to the community level:

One of the earliest defining human traits, bipedalism – the ability to walk on two legs – evolved over 4 million years ago. Other important human characteristics – such as a large and complex brain, the ability to make and use tools, and the capacity for language – developed more recently. Many advanced traits – including complex symbolic expression, art, and elaborate cultural diversity – emerged mainly during the past 100,000 years.23

Biological evolution is not about individuals, it is about inherited means of growth and development that enhances the survivability of a population of a given species evolving in a given habitat. The most important characteristic feature of the agriculture stage of human evolution was the emergence of an adaptive brain-brawn feedback system that would continuously monitor and tweak the environment to improve conditions for human survival. Within its limited geographical perimeter, a community freed itself from the terrors of the animal kingdom where Nature is “red in tooth and claw”.24 For the first time, humans evolved not purely by physical adaptation in an environment but by also mentally adapting to and changing the environment by putting his mind to work in coordination with the rest of his body.

Once the capacity to create and use language and the ability to work with metals (copper, bronze, iron) developed, human civilization evolved rapidly. Communication became the backbone of human civilization to link minds, exchange ideas, govern, intimidate, chastise, reward, entertain, create and commune with gods, and link past and future generations. It invented the rule of law, put its faith in an unseen, omnipresent, omniscient, omnipotent God, nurtured the fine arts, and laid the foundation for logic, mathematics, and physics. The agriculture stage proved to be vastly more productive than the hunter-gatherer stage; it allowed population density to increase to a few hundred per square mile in settlements with greater safety and food security.25 Farming communities gradually developed groups with specialized roles, e.g., soldier, ruler, and eventually the institution of individual property rights. When individual farmers began owning and cultivating their plots of land, it created a competitive spirit that greatly boosted efficiency and productivity. North and Thomas note:

It is this change in incentive that explains the rapid progress made by mankind in the last 10,000 years in contrast to his slow development during the era as a primitive hunter/gatherer.26

In the agriculture age, the role of the human mind had suddenly increased. The brain and the brawn (scholar and soldier) were competing for supremacy in social status. Human population and sustainable population density per square mile had greatly increased. In his 1776 classic book, An Inquiry into the Nature and Causes of the Wealth of Nations, Adam Smith was clear that the wealth of nations depended on capital, labour, and mineral resources.27

The world population in 10,000 BCE is estimated to have been 4 million; in 5,000 BCE it was 5 million; in 3,000 BCE it was about 14 million; in 1 CE it was 200 million; in 1500 CE it was 458 Million; in 1900 CE it was 1,650 million; in 2000 CE it was 6,127 million; in 2010 CE it was 6,930 million; in 2015 CE it was 7,349 million. Projected figures (by UN) for 2020 CE is 7,717; for 2025 CE is 8,083; for 2030 CE is 8,425; for 2050 CE is 9,551.28 (The projected figures have no meaning when the world is undergoing a massive phase transition where mass unemployment will likely decimate a huge part of the world’s population. This is elaborated in later sections of this paper.)

Industrialization saw a further sharp rise in productivity, urbanisation and urban population density and above all another radical change in lifestyle; the percentage of a country’s population engaged in agriculture, instead, became an index of poverty! Compared to agriculture, manufacturing requires little land; its growth limiting factors are skilled labour and capital. Skilled labour is much more scalable through training than finding cultivable land. Increasing population density led to more efficient capital markets (partly due to closer proximity of transacting parties), which, in turn, made financing of manufacturing capacity easier leading to further increases in output. At some point in 19th century, in Europe and the U.S., this upward spiral of economic growth got a further boost from advances in technology that led to more wealth, more capital formation, still more technological progress, in an apparently self-sustaining, gravity defying, upward flight.29 It saw airplanes, rockets and spacecrafts!

Until the 15th century, progress in Europe substantially depended on transfer of technology from Asia and the Arab world. But in the 16th and 17th centuries, science in Europe made amazing progress due to a galaxy of scientists that included Copernicus, Erasmus, Bacon, Galileo, Hobbes, Descartes, Petty, Leibnitz, Huygens, Halley and Newton. From Newton onwards advances in science and technology took a meteoric path.30 Rational scientific thought gained deep roots and eventually destroyed the tyranny of the Catholic Church. Along with science grew great universities, research laboratories, and manufacturing industries dependent on technologies derived from scientific knowledge. It is now evident that highly industrialized nations became so not because of science alone but because they also assiduously built the “vital underlying institutions of property rights, scientific enquiry, and capital markets.”31 Over time, these factors have become encoded in their culture and broke their chains of poverty. The industrial stage emphatically showed that recovery from disaster, such as World Wars, is faster and surer when property rights are guaranteed.

Of the industrial era, in 1981, historian Daniel R. Headrick wrote:

Western industrial technology has transformed the world more than any leader, religion, revolution, or war. Nowadays, only a handful of people in the most remote corners of the earth survive with their lives unaltered by industrial products. The conquest of the non-Western world by Western industrial technology still proceeds unabated.32

At the time, nothing perhaps underscored the remarkable breadth of human ingenuity in developing technology than accomplishing the safe landing and walking on the Moon by two U.S. astronauts, Neil Armstrong and Buzz Aldrin, on 20 July 1969 and their subsequent safe return to Earth on 24 July 1969 with the event being broadcast live on TV to a global audience. In Adam Smith’s days (1723-1790) such a feat was possible only in science fiction, fairy tales and mythology.

Since late 1980, technology has advanced and expanded at a remarkable pace and scale. Advances in automation, electronics, communications, biotechnology, superconductivity, computing, plastics, and more have fundamentally changed society including the goals, ambitions, and lifestyles of the masses. Now trillions of dollars, millions of jobs, and geopolitical power flow from the exploitation of science- rooted technologies rather than from raw materials and smoky factories. In 1990, Erich Bloch, a former head of the National Science Foundation in the U.S. aptly summarized the role of technology in the market place at the time:

In the modern market place, knowledge is the critical asset. It is as important a commodity as the access to natural resources or to a low-skilled labor market was in the past. Knowledge has given birth to vast new industries, particularly those based on computers, semiconductors, biotechnology and designed materials.33

The economy dealt mainly with physical stuff – chemicals, steel, minerals, etc. The knowledge worker, though important, was more keen to shape the future rather than the immediate present. When the industrial revolution began, the world’s population was less than a billion people. By the year 2000, the population had exceeded 6 billion, and as we write (February 2019), the population exceeded 7.68 billion.34 This sustained, rapid growth in population was possible because of a rapid increase in scientific knowledge, its application to development of technology, and above all society’s ability to educate and skill its people in a continuous stream sufficient enough to provide a science and technology workforce required to run a multitude of new and emerging industries. During the industrial era, a self-sustained, increasingly prosperous synergy between man and natural resources developed till about 2000. Now, on the negative side, society is utilizing natural resources at a rate faster than their replenishment rate35; the environment is degrading. The hope is that humanity, because of a larger population of S&T trained people, will find solutions before things get out of hand. That may be wishful thinking. The industrial revolution showed that science is not just about esoteric theories but also a social enterprise. It is more so in the post-industrial society.

The post-industrial economy is based on knowledge-intensive services, which require even less land and labour than manufacturing. It requires huge capital investment and its appetite for science-rooted innovative technologies is ravenous; its market is truly global. The average-skilled workforce in the service sector while initially scalable due to its natural ability to self-learn, learn by association with others in the community or be trained in large groups, is no longer so. The skill levels required of even entry-level jobs have escalated rapidly and with it available jobs have further diminished. The middle class that expanded rapidly in the industrial stage is now poised to deflate equally rapidly because machines can now surpass most humans across the population in skills. Mr. Average of the middle class is on the verge of losing his identity and his dignity, not just his income.

The rise in AI has now begun to affect jobs that till recently required specialized training and PhD level education. The job market is churning, shrinking, and vaporizing. While machines can be easily fitted with AI, humans cannot. Ironically machines are neither looking for jobs nor do they need one; they are emotionless, oblivious of the past and the future, without any need for spiritual balms or companionship. Yet, they have the potential to obliterate humankind by unintentionally snatching their jobs because exceptional men configured machines to compute, while Nature has configured most other men for mere procreation. Machines are not even aware that men see them as competitors. The previous stages, though they were a dramatic break from the past, carried the pleasant prospect of improving humanity’s collective lot. The present break has caused fear that only a few can live in style amidst machines, while the rest will sink into poverty for want of a job.

Skilling people to meet market demand for highly skilled and gifted people with deep inter-disciplinary knowledge is not scalable–it requires world-class research universities. Moreover, such people must remain on a learning curve to be competitive. To bridge shortages, they must be imported or poached, usually from developing countries, whose development agenda then takes a hit. Finally, the post-industrial society, to maintain its economic growth, needs an informed citizenry, tight immigration policies, and undoubtedly, new forms of government and societal structure. Inaction and lapses on such matters can easily lead to catastrophes and mayhem if unemployment soars. The palliative for unemployment cannot be unemployment insurance, social security, etc.; it will require novel and perhaps untested means. Immigrants deemed a drain on a country’s economy would be shunned. Euthanasia on demand may become morally acceptable in lieu of suicide.

In the post-industrial society, economic growth will depend on its ability to create and efficiently use knowledge to produce marketable products and services and turn them into necessities. Physical stuff is subject to the laws of scarcity; prices of material goods depend on demand and supply. Knowledge is intangible, shareable, extendable, creatable, storable, and marketable. It spawns ideas. Modern-day communication services permit instant and global spreading of ideas. This has turbo-charged the global economy by accelerating innovation and their commercialization on a global scale.

Economists, as always, were caught by surprise by this tectonic shift and the acceleration with which the post-industrial economy began moving. Most of them are still focused on the scarcity of physical and human resources while the economy has already come under the iron grip of unpredictable, disruptive, breakthrough creativity that come from the mind. Their earlier theories based on land, labour, and capital productivity, and above all their irrational theory of rational expectations and efficient market hypothesis were anyway farcical. Today’s economist is eminently dispensable given that economic growth is now driven by human imagination and innovators for which no economic theory exists.

In 2008, Ellis Rubenstein wrote:

[I]n a post-industrial age the keys to economic sustainability for urban centers will be education, science, technology, finance, and a system that stimulates entrepreneurship. Urban centers whose researchers and university administrations remain “siloed”–disconnected from one another, from industry, and from venture capital–will fall behind. The achievement of excellence solely through the global collaborations of individual investigators will no longer guarantee the excellence of an institution, much less the region in which it resides.36

All these have come to pass. Upcoming technologies will be even more breathtaking given the R&D strides already made in biotechnology (stem cell manipulations, synthetic DNA, rapid DNA sequencing, etc.), nanotechnology (carbon nanotubes, graphene, etc.), superfast switching of quantum light sources, cloud computing, data analytics, efficient conversion of solar energy to electricity, etc. The source of economic growth is no longer the brawn but the brain, and above all artificial intelligence!

“Necessity is the mother of invention” no longer dominates; invention-driven necessity does. It began with the mobile telephone, the credit card, the Internet, and the Windows operating system–each became a daily necessity. AI-robots and humanoids will soon join them to serve those with a job.

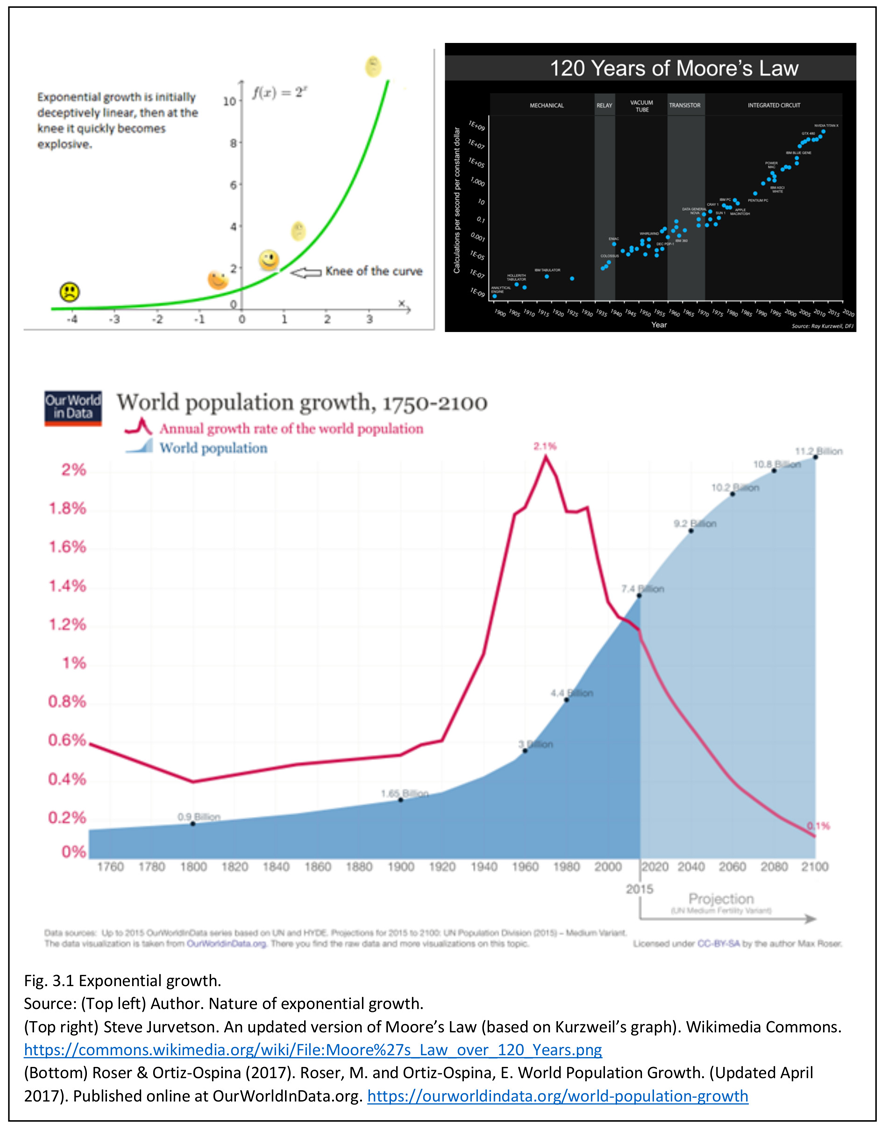

In terms of population growth rate, the world has seen three distinct phases. The first, pre-modernity, was characterized by very slow growth rate with equally low economic growth rate; the second, beginning with the onset of modernity, was characterized by, relative to the first, rapid increase in population yet supported by rising standards of living and improved health and longevity due to some amazing advances in science and technology. We are now in the third phase with an increasing population, a muted population growth rate and a downward trend in the creation of stable job opportunities while S&T advances continue to follow an exponential trajectory powered by AI and solar energy.

Generally, civil society establishes itself around a regulated set of social structures. Each structure stabilizes itself into a distinctive arrangement of institutions to facilitate and maintain human activities in myriad ways. Inter alia, through tradition, custom, and law, societies set up institutions for sexual reproduction, the care and education of the young, opportunities for gainful employment, and the care of the elderly in which marriage and kinship play important roles. For most of history, society gradually developed technology to avert risk to life and limb and the instinctive need to survive and propagate the species. Technology development began to rise sharply coinciding with the birth of the millennials. Fig. 3.1 summarizes the situation as it is developing for the millennials.

A distinctive aspect of emerging technologies is their ability to create necessities or a ‘must possess feel’ not felt before. This has led to aspiration-driven marketing. Much of this is visible in myriad digital communication-plus devices ubiquitously available and affordable. Now instant communication links that connect humans and devices via the Internet of Things (IoT) is increasingly taken for granted. It has set in motion a disruptive restructuring of society by an “unseen hand” into a malleable global structure where people are tagged with a profile matrix that includes lifestyle, nationality, education, employability, religion, etc., usually in that order of importance. Society increasingly celebrates the individual than the family. Weakened family ties amplify the mental stress of unemployed individuals and prod them to re-examine their religious beliefs usually inherited from the family. The first millennials were born in the incipient stages of this disruption when it began affecting family structure and lifestyle, employment, skilling and reskilling opportunities caused by rapid automation of many hitherto human activities (physical and mental), and the welfare management of a growing population of retirees who are culturally alienated from their millennial progenies in an environment where the progenies too find themselves facing an uncertain and unpredictable job market. The old socio-economic structure is crumbling, and a new stable structure is yet to take shape.

The times when monarchs or governments could change things by diktat are over. Today a tiny group with disruptive ideas can create a start-up that can not only change a country but the entire world. The star millennials will tend to bow out in their 40s, flush with wealth, drained by stress, and in dire need of oxygen. The world is flush with millennials who have a deep hunger to learn and forge dynamic partnerships to rapidly expand their enterprises. Only a few will succeed.

The world now moves on the power of ideas that are becoming grander by the day. Archaic traditions and conventions are passé. The industrial revolution put brawn power out of business; the post-industrial revolution is putting rote education out of business. AI-machines are rapidly replacing rote educated humans. The key to survival for them is to improvise and quickly sunset habits, institutions, and practices of the past that can no longer harmoniously blend with the future. At the level of individuals, the problem is identifying and deciding what to sunset because the future is unknown, and their individual talents are often over-shadowed by AI. The emerging technologies now shaping humanity’s future include AI and robotics, novel modes of transport and renewable power, data mining and data privacy. Their impact will be huge but for the moment quite unpredictable.

How and when language evolved is not known; but it appears to have happened about 30,000 to 100,000 years ago. With language we express thoughts, gossip, create poetry, command and control, and much more. Our creativity, wealth of scientific knowledge, understanding of the Universe, memory of the past, etc. wholly depend on language. It even limits our ability to think and what we can think about. Language, intelligence, culture, and knowledge are intimately related.37

A defining feature of a sophisticated society is how it communicates with humans, machines, and institutions. That is how humans control and coordinate strategy. But the relationship between language and power is intricate. Thoughts get communicated through language (speech, script, and sign) and emotion (body language). Benjamin Lee Whorf (1897– 1941) said, “Language shapes the way we think, and determines what we can think about.” And Ludwig Wittgenstein (1889 –1951) said, “The limits of my language mean the limits of my world.” Thus, whoever speaks depends on language, but ultimately the power of language lies not with the speaker but with language itself.38 Anyone can acquire the power of language, even AI machines.

Communication channels for the millennials expanded suddenly with the invention of the microchip in 1959 (a product of the electronics revolution) that enabled the personal computer (1975 as kits) and eventually the present day ubiquitous smartphone that include high-speed mobile broadband 4G LTE, motion sensors, camera, and mobile payment features that fit into a shirt pocket. Consequently, the world of the millennials is much less human-centric than machine-centric. STEM educated millennials need, not just a language with which to express myths but also logos, i.e., a language with which to reason in accountable writing and speech. This is fulfilled, to a good degree, in esoteric (and hence limited to relevant experts) modern scientific languages, by heavily depending on the language of mathematics. Scientific languages speak with “the highest, universally binding authority, world-wide about everything in the world” and its “authority is fundamentally egalitarian and democratic; for it and with respect to it, nothing counts but ‘the non-violent force of the better argument’ (Jürgen Habermas).”39 This, of course, does not eliminate the criticism that scientific languages also narrow man’s perception of reality to what can be expressed in scientific language. Our successor species may evolve to deal with this problem better because their survival may depend on it.

The electronics revolution was ignited by developments in human-computer interface, networking of digital machines, and microchips. In the millennials’ world, man-machine interaction and action-at-a-distance with the speed of light is taken for granted. That is only the beginning. Globally connected digital devices, the Internet of Things40 (IoT), with each device becoming smarter by the day with embedded AI that include cognitive functions, is on its way to becoming ubiquitous. Along with it, human-computer interactions are becoming more like human-human interactions because AI has now made significant advances in mimicking cognitive functions. This raises the specter of en masse retirement of humans even from “intelligent” tasks. Technology and society are now so intricately interwoven that without understanding their relationship in terms of information flows, humans may inadvertently place themselves in extreme danger of becoming unemployable. For developing countries like India, the danger is even more.41 Emerging technologies like IBM Watson42, Google’s AlphaGo43, and Carnegie Mellon University’s Libratus44 clearly indicate such a possibility may occur soon. On another important front, AI may provide an amazing service, that of peer review of scientific papers, in the near future.45 When such a feat is accomplished, it will be but another step to robots writing papers making even human researchers redundant, inefficient, and obsolete. As Janne Hukkinen noted, “New knowledge which humans no longer experience as something they themselves have produced would shake the foundations of human culture.”46 Greater the sophistication in communication, the more elaborate will be its language, especially its associated grammar. The post-industrial economy will depend on machine interpretable, error-free communications.

When talking about AI and its impact on jobs for humans, we tend to overlook that AI is human-created, and the related technology is often patent protected. It is this technology that is super-charging AI robots into delivering intellectual output. We now face an unusual situation. By law, patents can be granted to only human inventors of a novel, useful and non-obvious invention and they must describe their invention in writing (written description) with clarity and in sufficient detail so that others knowledgeable in the arts related to the invention can reproduce it independently. The law does not permit patents to be granted to machines that invent. Further, if an AI machine comes up with an invention, it will be considered an obvious invention, because similar machines or its clones will be deemed able to come up with the same inventions if called upon to do so! It will be obvious invention by machine instinct. Furthermore, given a powerful enough AI machine, it will often be possible to show that a patented invention could have been invented (and hence the invention anticipated based on prior art) by this machine if only it had been asked to create one with its current and possibly past knowledge and computing power.

Historically, the intellectual property (IP) protection system arose to facilitate, indeed drive, economic growth as a means of improving people’s well-being by equitably rewarding inventors. If someday AI-driven humanoids rule the Earth, economic growth and human well-being may no longer be a criterion for generating IP. It will be generated on its own, algorithmically or randomly, all, of course, under themathematical laws of Nature. Thus, such studies as conducted by the National Academies (of the U.S.) may well be futile efforts in ‘Advancing Concepts and Models for Measuring Innovation’47

The IT revolution has unevenly affected the world in terms of enhancing living standards, governance, the economy, employability, etc. Successful diffusion and adoption of IT is time and resource intensive. It has led to increasingly uneven productivity gaps between frontier firms and others. Income and wealth inequality has increased. In the past two decades, the top one percent have gained enormously, while the bottom 80 percent have steadily lost. The job-mix in the economy continues to change faster than people can adapt since many routine tasks are being automated; skill levels required for new jobs is steadily rising. There is great uncertainty about future wage-earning jobs– what will they be, how long will they last, what skills will they require, what future prospects will they carry, where will they be available, how can one reskill, etc. In fact, how the world will reorganize itself under the pressure of technological advances is not at all clear. There will be phase transitions galore at different skill levels for humans. Gathering and analyzing data to even understand or discern trends as to what is happening around us has become impossible because the situation is changing so fast. Statistical analysts are out of their depth. New ways to integrate various data sources without compromising privacy and confidential business information could reveal useful information about the changing workforce but it would only be useful as history and not for planning. The best forecaster for the future remains Kurzweil.48 The situation is heading towards chaos. However, education will be a key influence on worker income, but matching education with opportunity will be a loaded game of dice.

Languages (natural and programming) connect humans and machines in seemingly random fashion. Graph theory tells us that massively connected men and machines will lead to phase transitions. Everytime connectivity breaches a critical point, a phase transition occurs in the way nodes form or reform into clusters. The millennials’ world is already vastly different from the world of their parents who were far less connected. Since 1960, we know from graph theory that if we have a set of nn nodes and start linking them randomly, then when m=n/2m=n/2 links are made, a phase transition in the graph occurs in the sense that a giant connected component in the graph spontaneously appears, while the next largest component is quite small.49 Additionally, such giant components remain stable in the sense that how we add or delete o(n)o(n) edges, the size of the giant component does not change by more than o(n)o(n). What is seen is that even uncoordinated linking, whether protein interaction networks, telephone call graphs, scientific collaboration graphs, and many others show such generic behavior of forming giant components.50 This immediately suggests a basic involuntary mechanism by which a society at various levels of evolution spontaneously reorganizes itself as nodes (people, machines, resources, etc.) connect or disconnect in apparent randomness. The effect is highly visible in the IoT world of the millennials who become spontaneously polarized on issue-based social networks.

Rapidly increasing connectivity among men and machines has already imposed upon the global socio-politico-economic structure, a series of issue-dependent phase transitions. More will occur in areas where massive connectivity is in the offing. Immediately before a transition, existing man-made laws begin to crack, and in the transition, they break down. Post-transition, new laws must be framed and enforced to establish order. Since such a phase transition is a statistical phenomenon, the only viable way of managing it is to manage groups by abbreviating individual rights and enhancing those of its leaders. The emergence of strongman style leadership and its contagious spreading across the world is thus to be expected because job-starved millennials will expect them to destroy the past and create a new future over the rubble. It appears inevitable that many humans will perish during the transition for lack of jobs or their inability to adapt to new circumstances. Robots will gain dominance over main job clusters while society reorganizes. Ironically, robots neither need jobs, nor job satisfaction, nor a livelihood. So there will be an aura of rational ruthlessness in the reorganization.

We posit a definition of knowledge and intelligence. Given massive amounts of data, condensing it into a smaller sized axiomatic system from which a subset of the data can be regenerated, and data outside the subset can be created by interpolation and extrapolation. The resulting axiomatic system is called knowledge, and the process of deducing the axiomatic system is called intelligence. The process of regeneration, interpolation, and extrapolation is called computation. The baby steps of knowledge creation are what babies take with a primitive but malleably evolvable brain–the physical matter that acquires, analyses, and processes data. Further evolution of the brain depends on the environment with which it interacts by establishing multiple feedback loops by continuously condensing its already acquired knowledge and knowledge acquired from the environment into abstract concepts, which themselves undergo further compaction.

The tool for doing this is a structured language that comes with a set of symbols, and a grammar for creating valid symbol patterns. This notion is encapsulated in Shannon’s information theory.51 The notion of computation and computability is captured in the mathematically defined Universal Turing Machine,52 and the notion of axiomatic systems is formalized in Gödel’s theorem.53 Creation of knowledge from data about which we know nothing begins with the assumption, and therefore the search for an axiomatic system, that there is at least one nugget of knowledge to be found. The way to acquire it or at the least the way humans go about acquiring it is the way we find the prime factors of composite numbers, a systematic process of guessing. It begins with an intuitive guess (which itself indicates the current biased state of the brain), we call a conjecture (another way of saying that I am curious to find out if my guess is right).

The common man’s intuition or common sense usually turns out to be mundane and its accuracy lamentably dubious. It is a very low-level knowledge acquisition capability shared by almost all Homo sapiens. Only a miniscule minority of the human population ever gets an opportunity to be formally trained, and an even smaller number who get the privilege of being mentored and becoming mentors of such people. It is this minority of the minority that advances human knowledge by making it more and more compact or abstract. Gauss, Fourier, Gödel, Turing, Galileo, Newton, Maxwell, Clausius, Einstein, Heisenberg, Schrödinger, Shannon, etc. were among them. Such geniuses compact and abstract knowledge to such a level that a machine fed with it can answer questions by interpolation, extrapolation, and derivation using mathematics.

Our discussion about knowledge, intelligence, and perception must necessarily be restricted to the axiomatic systems the brain-mind system can handle. An axiomatic system must be consistent both in the axioms and the theorems derivable from it. It does not allow an iota of inconsistency. As far as we can tell, the Universe is an axiomatic system; its axioms are inviolable laws of Nature. A desire to understand Nature is an attempt to minimise an information gap – the information we try to prise from Nature and Nature’s ability to withhold by making us run around in circles.

Physics gives rise to observer-participancy; observer-participancy gives rise to information; and information gives rise to physics.54 – John Wheeler

What we call the laws of physics is the information we extract from Nature, compact and package within a falsifiable conjecture which we have so far failed to refute. Because Nature plays hide-and-seek, we are forced to conjecture (or hypothesize) and endlessly refine, discard, and amend our conjectures by strenuously trying to refute them.55

Since all axiomatic systems can be recast as an arithmetic system (that is why AI systems run on computers), axiomatic mathematics provably tells us that in an inconsistent axiomatic (or belief) system, you can prove or disprove anything in your irrational way, thus rendering the system useless.56 It is also known that barring very trivial systems, it is not possible to prove the consistency of an axiomatic system. Hence, using rational arguments to seek unanimity in answers requires caution. Scientists make conjectures, and a conjecture is scientific only if there is potential scope of finding an error.57 The basic premise is that we can learn from our mistakes. “Though [a mistake] stresses our fallibility it does not resign itself to scepticism, for it also stresses the fact that knowledge can grow, and that science can progress–just because we can learn from our mistakes.”58 The process is criticism controlled. If you cannot bear the stress of being refuted, you cannot be a scientist; you can be a dogmatist. Religion insidiously teaches dogmatism. Hark Richard Dawkins, “not only is science corrosive to religion; religion is corrosive to science. [Religion] teaches people to be satisfied with trivial, supernatural non-explanations and blinds them to the wonderful real explanations that we have within our grasp. It teaches them to accept authority, revelation and faith instead of always insisting on evidence.”59 Therefore, religion cannot be axiomatized.

Our sole responsibility is to produce something smarter than we are; any problems beyond that are not ours to solve … [T]here are no hard problems, only problems that are hard to a certain level of intelligence. Move the smallest bit upwards [in level of intelligence], and some problems will suddenly move from “impossible” to “obvious.” Move a substantial degree upwards, and all of them will become obvious.60 – Eliezer S. Yudnowsky, Staring into the Singularity, 1996

AI machines are on their way to make some hard problems solvable. In 1936, Alan Turing proved that mathematics, once formalized, is completely mechanizable and thus created computer science.61 Now it appears that human intelligence and emotion are mechanizable too. Perhaps not surprising since Max Tegmark notes: “Our reality isn’t just described by mathematics – it is mathematics … Not just aspects of it, but all of it, including you.” Thus, “our external physical reality is a mathematical structure”62. To this we add another powerful observation, from Karl Popper, whose influence on modern scientists far exceeds that of any other philosopher: “We are products of nature, but nature has made us together with our power of altering the world, of foreseeing and of planning for the future, and of making far-reaching decisions for which we are morally responsible. Yet, responsibility, decisions, enter the world of nature only with us”63

AI mimics thinking with unprecedented memorization and computation; it treats thought as a mathematical process. The human species conjured AI into existence, just as it did the laws of Nature in its mind. Chance favors the prepared mind is the mantra every millennial must believe in, not as a matter of faith but as a law of Nature. It is an analogous version of the law of phase transition in graph theory. Continuous random acquisition of knowledge favors those with perseverance.

Socio-economic dynamics is ruled by perceptions derived from limited facts, their assumed relevance, reliability, and accuracy in a certain context, held beliefs (axioms), man-made rules of socio-economic governance, ingrained personal biases in interpreting, interpolating, and extrapolating information, etc. Perceptions are refined iteratively (as in a closed loop feedback system). The results that emerge from this dynamics include socio-economic structures based on division of labor (including the creation and support of the fine arts, STEM related activities, order, disorder, stability, chaos, phase transitions, etc.), and ways of attaching certain values (say, monetary, fame, place in society, etc.) to products, processes, and services that fleetingly manifest. Of such a dynamical system, we ask what products, processes, and services it can produce at any given place and time. Our ability to model the system mathematically will generally decide our ability to predict the system’s behavior; anything less would be hand-waving.

As in biology, structure determines function given a context. What we can conjecture are the possible ways society might restructure and within those structures how the millennials may be forced to function. Despite the fragility of human knowledge, the survival and development of the millennials will depend on their ability to create, communicate, conserve, archive and transmit knowledge seamlessly to succeeding generations. AI-enabled machines come embedded with these abilities. Further, they are unburdened of religion and the fear of divine retribution. Humanoids, genetically coded to be oblivious of pain, and devoid of emotions, would be similarly blessed. AI presents both a cultural and a technical shift as have other inflexion points in past stages of human advancement, e.g., the introduction of the printing press, the railways, the telephone, the ocean liner, etc.

During the industrial stage, most people reached their peak capacity to educate and skill themselves in activities (including earning a living) that required mechanizable “intelligent” rote education. That AI machines, in principle, can far surpass humans in such activities had become evident when Alan Turing showed how arithmetical calculations can be mechanized64 and mathematicians showed that any axiomatic system can be arithmetized65. This meant that any form of rational knowledge could be axiomatized and rote education embedded in machines. While creating new knowledge would still require human creativity, once that knowledge had matured and was formalized into an axiomatic system, it would be mechanizable and expandable. It would then be a matter of time that humans would increasingly face competition from machines and eventually be overwhelmed by them. Kurzweil has predicted66 that this would happen by 2029. Recent advances in AI indicate it is very likely to be so. Further, advances in deep learning by machines indicate that through self-learning they can become highly creative and creators of original technology (the patent system will go for a toss) and scientific discoveries without human intervention may well become the norm. When that happens, who will decide the destiny of mankind and which religion will rescue man from machine bondage?

Once humans master the art of designing DNA (we already know how to design cells not found in Nature and edit DNA), they will create living beings of their own design. We also anticipate that when AI-machines master the art of learning from mistakes (i.e., the art of making conjectures and refuting them in a spiraling process towards better knowledge, a possibility that exists), they would have taught themselves how to handily beat humans in intelligent activities, and thereby break the human spirit.

The seeds of this were sown when the AI program called AlphaGo decisively defeated the world’s greatest Go players in 2016.67 AlphaGo has achieved what many scientific researchers dream of achieving in other branches of knowledge. It shows that a machine can teach itself in an immensely small fraction of the time it takes humans to explore ab initio any axiomatic system. The last bastion of human supremacy over all other creatures on Earth has been cracked by AI-machines. This is the world the millennials have stepped into. We have no idea how AI-machines may organize themselves into networks and network with humans. Will the future be written and created by humanoids with humans finding themselves relegated to footnotes and appendices once biotechnology and AI integrate?

Henry Kissinger notes:

Heretofore, the technological advance that most altered the course of modern history was the invention of the printing press in the 15th century, which allowed the search for empirical knowledge to supplant liturgical doctrine, and the Age of Reason to gradually supersede the Age of Religion. Individual insight and scientific knowledge replaced faith as the principal criterion of human consciousness. Information was stored and systematized in expanding libraries. The Age of Reason originated the thoughts and actions that shaped the contemporary world order.68

That world order now faces an upheaval where humans may be left to wonder what is their role in the Universe and what is the meaning of life if emotionless and faithless machines are destined to rule over humans? What is the role of God in human life if data and algorithms ungoverned by ethical or philosophical norms rule? When in ancient times, man created God in his own image, did he ever imagine that his God would be overthrown unceremoniously by another man-created invention, the AI-machine? That creating Homo sapiens was not God’s crowning glory but an intermediate

instrument for creating the Internet age and mock man for his emotions and naïve faith in irrational religion that promises only a fake afterlife.

Our search for meaning in life is now propelled by search engines roaming the Internet and not by our brain. The World Wide Web (WWW) has changed the way we think, what we think about, and how we communicate our thoughts. The millennials cognitive abilities are very different from those they were born with and weaned on before the Internet invaded their lives. They are shaped not just by what they read but how they read. Not only has their lifestyle changed but so has their thought style. All the work of the mind–deep thinking, exhaustive reading, deep analysis, introspection, etc.–is now delegated to AI-machines. Humans have thus relinquished their right to control their individual lives and direct their souls (maybe deep inside they already know there is no soul!). If machines can outdo humans so easily without a soul, then perhaps the soul is holding humans back from reaching their potential. Perhaps it is time, AI-machines became our role models and our mentors.

In the agriculture stage man was first, in the industrial stage man gave way to the engineered system, in the post-industrial stage the system is giving way to the AI-machine, which, in turn, is taking control of our intellectual lives. The Internet is now our efficient and automated collector, transmitter, and manipulator of information, guided by mathematical algorithms as defined by Alan Turing in 1936. The automated search engine was the first important step towards AI. The human brain is now seen as a vastly outdated computer in need of a faster processor, a phenomenally larger hard drive, and a more efficient operating system in its next speciation. The highly stressed brain of the millennials will seek relief via evolutionary genetic mutations. The Homo sapiens neural net is about to evolve and with it will arrive one or more new species that improve upon the Homo sapiens.

Once Alan Turing69 showed that mathematical algorithms are mechanizable, Calude Shannon70 showed that information has a precise mathematical description, Rolf Landauer71 showed that information is physical and can only be harnessed and processed by the laws of physics, and David Deutsch72 showed that quantum computers can use quantum physics in ways classical computers operating under the laws of classical physics can never do, the spiritually-oriented human brain-mind system was on its way to obsolescence. The choice of silicon turned out to be better than gray matter, the choice of photon and electron turned out to be better than natural neurons for encoding and processing information. The time is approaching when human intelligence will humbly bow to AI which can solve real life problems within the authority of the laws of Nature rather than to God who cannot.

The Enlightenment sought to submit traditional verities to a liberated, analytic human reason. The internet’s purpose is to ratify knowledge through the accumulation and manipulation of ever-expanding data. Human cognition loses its personal character.73 Individuals turn into data, and data become regnant.74

There are more young people chasing too few quality jobs, especially in the less- and under-developed countries. Massive unemployment will lead to massive law-and-order problem. Apart from the uneven geographical rise in population, the migration pattern of gifted workers will decide the fate of poorer nations. A UN report projects that by 2050, the world’s population will approach 10 billion from the present 7.6 billion plus. Over half of the increase is expected to be in Africa:

Africa’s share of global population, which is expected to grow from roughly 17 percent in 2017 to around 26 percent in 2050, could reach 40 percent by 2100. At the same time, the share residing in Asia, currently estimated as 60 percent, is expected to fall to 54 percent in 2050 and 43 percent in 2100.75

While such projections maybe meaningless for a world in the throes of an AI-driven phase transition, the U.S. President Donald Trump is determined to recover lost ground and put America at the crest before the transition is over by his America First policy. As Janan Ganesh succinctly puts it:

Pax Americana is not the natural order of things. It is a phase born of the most extreme

circumstances. The US accounted for a third of the world’s output when it set up the Bretton Woods institutions, revived Japan and secured Europe … It now accounts for about 20 percent of global output. It does not have the wherewithal to underwrite the democratic world forever.76

Other leaders who fail to put their country first, may flounder. The war for talent has just begun. The services of talented minds will percolate through the IoT in trade bypassing immigration, cultural, and social barriers. Their physical movements when needed can be accommodated in tourist visas. The top talent in the world can organize themselves into a services trading community. This will soften the culture disruption faced by countries due to immigration and protect jobs for their citizens.

In the early 20th century, the divinely created horse and horse power, were a source of pride, affluence, and motive power. Within 50 years man-made cars and tractors made the equine way of life obsolete. As demand for horses fell, so did horse prices, by about 80% between 1910 and 1950; they ceased to be a part of the workforce. In Hinduism, the horse is still a deemed mode of transport to heaven for a king if he sacrifices one. With kings going out of fashion, this mode of transport too has vanished. Now man-made robots are waiting in the wings to make the human way of life obsolete. What an irony–the march of civilization from spiritualism to materialism to robotism! By 2050, we should anticipate the existence of the superhero humanoid, part DNA scripted living matter and part AI scripted quantum computer, to roam the world, flexing their muscles and algorithms. As robots and robotics advance, humans look increasingly vulnerable. Not only are robots increasing in numbers, the range of tasks they excel in against humans too is increasing.77 No country has contingency plans to deal with jobless masses that will arise in global manufacturing and routine services. Even global businesses are clueless about the future, e.g., how will the customer base reshape with changes in buying habits and spending capacity or how will businesses relocate themselves on the ground and the Cloud for market share and profits. It turns out that

An overwhelming share of the growth in employment in rich economies over the past few decades has been in services, nearly half in low-paying fields like retailing and hospitality. Employment in such areas has been able to grow, in part, because of an abundance of cheap labour.78

Further fall in wages will push a big chunk of the middle class into poverty and loss of lifestyle. In rich countries that cushion the fall through unemployment benefits and various subsidies, already know from experience that it encourages people to pull out of the work force and into indolence, especially if families offer extra help. Such state altruism at taxpayers’ expense risks creating a heavy debt burden for future generations. Once the burden is so shifted, it becomes dormant. The other problem resides in the present: profits are more likely to flow to already wealthy shareholders, who save at high rates and hence contribute marginally to demand across the economy. Economists as always will neither anticipate future crises nor have solutions to problems as they arise. Why? AI machines will outsmart them! The worst hit economy is likely to be China due to its heavy dependency on exports; coming out on top is likely to be the U.S. because of its ability to attract migrating talent.

The upcoming 5G technology will further transform society via the IoT. Future networks will have enhanced ability to steer driverless cars, enable surgeons to perform complex surgeries remotely, etc. With IoT, virtual and augmented reality will reach new heights. To survive, the millennials must hone their ingenuity, perseverance, and determination; break molds, take risks, and be resilient; listen to voices of change and progress. The demographic dividend perceived in some developing countries is really a demographic liability (AI is their nemesis). Any unknown phase transition will always present unanticipated blind spots, widely missed by the pundits providing sage advice,79 which with the benefit of hindsight will prove them wrong! The fault lies in assuming that Homo sapiens are sacrosanct while Nature is preparing for their speciation in an entirely novel way. With speciation, questions of ethics, morality, religion, socio economic structure, etc. will undergo a sea change to the extent that Homo sapiens may become an extinct species. There is a wide gulf between knowing that this can happen and believing that it will not happen. Therefore, assessing AI’s impact on social, cultural and political settings may be irrelevant in the long run.

The robot is defined by its embedded AI. The AI revolution is the most radical transformation human civilization will experience in the post-industrial era. Robots, singly and in groups, can consistently perform flawlessly at peak levels and can combine peak skills. If any of them produces an invention, then en masse all of them can independently produce the same invention on demand. It is this ability that will permit their adopting a dynamic self-organized form of governance without a central authority. This raises a fundamental question: Can humanoids be prevented from becoming our rulers, ruling us with an even hand, rationally, justly, with the philosophy to each according to his ability topped with equitable charity for the disabled needy subject to available resources of goods and services? There will be no need for humanoids to treat natural humans with deference or even treat human life as sacred but as an eradicable epidemical source of disposable ignorance and an undesirable burden on the earth’s limited resources. The humanoids, a unique fusion of the animate and inanimate will have no need for either Heaven or Earth or the men who believe in them. In a world ruled by humanoids, if there is no place for any God, the question of separation of state and religion will no longer arise. Even then, traditional law must undergo a radical change, as must notions of morality, ethics, etc. As Carl Miller notes:

Traditional legal theory holds that to be culpable of a crime, you need intent, or “malice aforethought”. Where’s the intent in an algorithm, especially a randomizing one …? As activism becomes automated, it raises tricky ethical questions we are ill-prepared to deal with.80

However, questions related to the new world order that humanoids may establish can only be idle speculations since humans are unlikely to be consulted by them. Indeed, all such symbols as flags, citizenship, religious beliefs, etc., around which humans rally to proclaim their solidarity as belonging to a region, religion, etc. to defend customs and traditions may become irrelevant. An algorithmically ruled world is unlikely to accommodate such about-to-become historical human relics. Scientists have recently demonstrated in laboratory experiments that a genetic engineering technique known as “gene drive” that uses a gene-editing tool called CRISPR can rapidly spread a self-destructive genetic modification through a complex species, e.g., malaria spreading mosquitoes.81 The risk of Homo sapiens being wiped out by accident or design by such means is no longer unthinkable.

With the millennials’ world undergoing dramatic changes brought about by automation and declining real job opportunities, the big question arises: “What about their children, their future?” Children lack a political constituency. Promulgation of laws for their education, health, safety, food, etc. will not guarantee absence of malnourishment, eradicate abusive child labor, or eliminate rampant sexual and physical abuse. It is not yet clear how their education, health care, and safety can be assured. Unless their future is secured, the very survival of Homo sapiens will be jeopardized.

Inability to learn is a death trap. In an AI dominated world, rote education driven by unhealthy politics is misaligned with present needs; only adaptive self-learning will work. Poverty hurts biological development and thus undermines learning. Adaptive self-learning favors those capable of multi-dimensional learning. Homo sapiens may speciate with this feature genetically coded in them. The new species will be explorers if they are to survive in the changing world of work.82 There have been some extraordinary advances in AI in multiple domains as we have noted above. It is too early to understand where they will all lead to or their impact on humanity and the restructuring of society or even the world order. Investment momentum in AI has rapidly increased since 2014 and will not abate soon.83 But one can expect the number of sustainable players in the marketplace to drastically reduce soon while only a few players with deep pockets consolidate their positions.

A quest of AI researchers is finding ways of answering a query. The first significant step in that direction was Google’s search engine. We already know from Gödel’s incompleteness theorems and Turing’s halting theorem that there can be no general search method by which either AI or human intelligence can find answers to all kinds of queries no matter how powerful an axiomatic system we discover. AI machines excel humans because they can dig much deeper into data than any human can. AI thrives on prodigious amounts of data. AI is about big data, deep data, data mining, deep analytics, deep learning. Sources and types of data are many as are the sensors for acquiring them and means of storing them. Major sources of data are objects or entities that emit electro-magnetic signals, audio signals, and textual matter. Of these, data in audio form is comparatively weak and surprisingly less emphasised even though it is rich, e.g., a bat’s world is centered around its auditory sensors and very little on visual sensors. We have even less data related to taste, smell, tactile, and mental. The patterns they encode are likely to produce many surprises.84 In our daily life, such data play pivotal roles in what we do, how we do, why we do, and when we do. Superior species in the future may use such data more advantageously to make the Homo sapiens, the only surviving species in the genus Homo, extinct. No God of any religion has ever mentioned our departed ancestors in the genus Homo. We are ignorant as to how Homo sapiens suddenly acquired language and through it, rational knowledge.

Big data. Big data is a repository of data collected, say, by a business in a day. Its specific contents may vary by context, e.g., it may include customer or client names, contact information, transaction information, etc. Big data is an enormous resource in business as an input to AI.

Deep data. An aggregation of big data becomes deep data when, paired with experts in a particular knowledge area, it is segregated into useful data from the rest, say, by removing redundancies and annotating it for further analysis. Here one generally deals with exabytes or more of data.

Data mining. It is the art of analyzing large pre-existing databases and extracting information, often by finding correlations hiding in the data.

Deep analytics. It is a data mining process that extracts and organizes bulk data into data structures suited for algorithmic analyses, the analyses thereof, and presentation of output, generally, for easy human comprehension. The real power of data analytics lies in the algorithms used in analyses since they tie the data to a set of concepts and hence “explain” the knowledge hidden in the data. It means the entire data is equivalently condensed into a program plus a subset of the data it uses as input to generate the rest.

Deep learning. A self-learning mechanized method by which an AI machine discovers the rules by which queries can be answered in a given context, i.e., it functions as a researcher. “Deep-learning software attempts to mimic the activity in layers of neurons in the neocortex, the wrinkly 80 percent of the brain where thinking occurs. The software learns, in a very real sense, to recognize patterns in digital representations of sounds, images, and other data.”85

Arguably, when IBM’s Deep Blue computer defeated chess champion Garry Kasparov in game one of a six-game match on 10 February 1996, a threshold in AI was crossed. While Kasparov won the 6-game match on this occasion, he lost to IBM’s Deep Blue supercomputer on 12 May 1997 in a 6-game rematch. Since then AI machines have been beating human champions in games left, right, and center: They beat human champions in Jeopardy (February 2011), the Chinese game Go (March 2016), Poker (January 2017), once again in Go by AlphaGo Zero (October 2017; it learnt on its own from a blank slate), again in chess (December 2017; the machine taught itself in four hours), etc. Of these, the most significant is AlphaGo Zero which learnt purely by playing against itself millions of times over. It began by placing stones on the Go board at random but swiftly improved as it discovered winning strategies. It is a big step towards building versatile learning algorithms.

The technologies related to deep data and deep learning have tremendous scope for good and bad use. For example, it is a boon for diagnosis and treatment of patients, but much science still needs to be done.86 There are related non-medical issues too: “What’s the best way to win the confidence of public and regulators?” “Is academia training enough mathematicians and medical-data scientists, to harness the potential embedded in the data?” “Genomic data sets alone have already shown their value. The presence or absence of a particular gene variant can put people in high- or low-risk groups for various diseases and identify in some cases which people with cancer are likely to respond to certain drugs.”87 But there are other equally voluminous diverse sources of data which must be integrated in the context of a patient’s physiology, behavior and health. And all this data must be held in the strict, non-negotiable, privacy regulations of medical data.88 Big data offers the opportunity to allow clinical trials to be conducted partly in silico.

Invasion of privacy is a rapidly increasing concern. Deep learning has the capability to do deep profiling of humans, situations, events, buying habits, etc. and integrate them into a larger picture and invade personal privacy. An innocuous example is cited in an editorial in Nature89. The mere purchasing of scientific books can escalate into learning much more about an individual, his network, lifestyle, political leanings, and what not. Beware of doing anything that gets recorded in a database, e.g., using your mobile. You are being watched, profiled, and analyzed whether you like it or not. Internet of Things, automation, cognitive computing (e.g., face recognition) have already advanced well beyond the average human’s capacity. Facial-recognition technology can measure key aspects of a face, e.g., skin tone, and cross-reference them against huge databases of photographs collected by government agencies and businesses and shared on social media. The technology obviously provides extremely powerful tools for surveillance. Such technologies allow governments to watch your every move.