In Part I we laid the foundation on which quantum algorithms are built. In part II we harnessed such exotic aspects of quantum mechanics as superposition, entanglement and collapse of quantum states to show how powerful quantum algorithms can be constructed for efficient computation. In Part III (the concluding part) we discuss two aspects of quantum computation: (1) the problem of correcting errors that inevitably plague physical quantum computers during computations, by algorithmic means; and (2) a possible underlying mechanism for the collapse of the wave function during measurement.

In designing quantum algorithms, we assumed an ideal, non-interfering, execution environment. This is far from reality. Superposition and entanglement are very fragile quantum states because of the difficulty of insulating quantum computers from a variety of causes — decoherence, cosmic radiation, and spontaneous emission. Maintaining the state of a qubit for prolonged periods is difficult enough leave alone preserving the states of entangled qubits. Inevitably the computer and the environment couple to vitiate the computer’s quantum state. Attempts to eliminate or minimize this problem by hardware and software means is an on-going research area. Here we restrict ourselves to software means, i.e., build error correction algorithms by creating information redundancies in an enlarged Hilbert space for error detection and correction.

A classical bit is in either state 0 or 1. Its physical state is defined by a large number of electrons, so a few electrons going astray will not affect its state identity.1 A physical qubit is often represented by a single or a few quantum particles (e.g., electrons), hence inadvertent errors are serious. Classical digital computers have inbuilt hardware bit-parity checks and self-correcting steps to restore a bit’s state if it inadvertently flips. A qubit, on the other hand, has a continuum of quantum states and it is not obvious that similar corrective measures are generally possible. For example, a likely source of error in setting a qubit’s state is over-rotation by an imperfect unitary gate (unitary gates rotate state vectors without changing the vector’s length). Thus, a state α|0⟩ + β|1⟩ instead of becoming α|0⟩ +βeiψ|1⟩, may become α|0⟩ + βei(ψ+δ)|1⟩. While δ may be very small, it will still be wrong, and if left uncorrected, will amplify to larger errors. Thus, not only must bit flips be corrected but also its phase must be correct, else, quantum parallelism, which depends on coherent interference between the superpositions, will be lost. Another source of error – inadvertent ‘measurement’ – occurs due to unexpected computer-environment interactions where the environment interferes with the Hilbert space reserved for computation in a manner that collapses the evolving state vector. This is generally seen as a hardware design problem.

1One may view this as a form of repetition code, where each bit is encoded in many electrons (the repetition), and after each machine cycle, it is returned to the value held by the majority of the electrons (the error correction).

In Section 3.4, Part I, we alluded to “clever error correcting algorithms encoded in software for certain situations exist.” We provide a glimpse of it now.

At one time it appeared the no-cloning theorem and the general impossibility of measuring the exact state of an unknown qubit would make error correction by algorithmic means impossible. In 1995, Peter Shor showed that for a certain class of errors (that also appear in classical computers) it is possible to restore a quantum state using only partial state information.2 Since then several error-correction codes have been devised. They have some similarities with, and some striking differences from classical error-correcting codes. Indeed, classical ideas have seeded the emergence of quantum error-correction codes. A salient feature of these codes is that the original state is recovered by a skillful measurement followed by a unitary operation determined by the measured outcome.

At the algorithmic (logical) level, a qubit is a two-dimensional subspace of a higher dimensional system. At a physical level, qubits are chosen such that it is possible to detect and correct unwanted changes in their state. Programmed manipulation of the information encoded in the qubits generally requires arbitrary and precise control over the entire computing system. Since individual elements in physical devices will always have residual interactions, these must be accounted for when designing logical operations.3 Our intention here is to provide a glimpse of the central ideas that led to the development of error correction algorithms which eventually strengthened our belief that practical quantum computers can be built without waiting for certain hardware limitations to be resolved by advancing hardware technology.4

Since straightforward replication of qubits in an unknown state |ψ⟩ is not possible due to the no-cloning theorem, i.e., we cannot perform such operations as |ψ⟩ →|ψ⟩ ⊗ |ψ⟩ ⊗ |ψ⟩,5 entanglement is used to spread the information held by designated qubits over multiple qubits through an encoding. This encoding is such that under evolution by a suitable operator the appropriate qubits will recover their original state. In short, we fight entanglement with entanglement. Entanglement has no classical analog. It encodes information in the correlations among the qubits in a given Hilbert space and this space explodes exponentially with the number of qubits.

Quantum information science explores, not the frontier of short distances as in particle physics, or of long distances as in cosmology, but rather the frontier of highly complex quantum states, the entanglement frontier.6

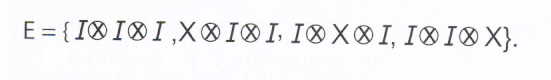

The simplest error conditions (e.g., bit flip, phase flip and their combination) assume that each qubit interacts with the environment independent of any other. This lets interaction operators to be tensor products of one-qubit interaction operators. Immediately Pauli matrices (or operators) become relevant because they span the space of 2 × 2 matrices, and the n-qubit Pauli group Pn (i.e., the group generated by tensor products of the four Pauli operators) spans the space of 2n × 2n matrices.

2Shor (1995).

3For some recent advances, see Heeres, et al (2017).

4See, e.g., Preskill (2012).

5In classical computing, 0 → 000 and 1 → 111 is no big deal.

6Preskill (2012).

The running of a quantum algorithm must be restricted to a carefully chosen sub-space of some larger Hilbert space. The trick is to detect and undo unintended anomalies as they develop in the chosen sub-space without upsetting intended computations. Fortunately, some useful correction algorithms are now known. Further, a theory of quantum error-correction has also developed which allows quantum computers to compute effectively in the presence of noise and allows communications over noisy quantum channels to take place reliably.

The interaction of the computational Hilbert space with the environment (e.g., ambient heat bath, cosmic rays, stray gas molecules, etc.) can be broadly placed under the headings dissipation and decoherence.7

Dissipation is a process by which a qubit loses energy to its environment. For example, if an excited state is used to represent a |1⟩ and a lower energy state as |0⟩, a qubit might spontaneously transition from |1⟩ to |0⟩ emitting a photon in the process. To see that dissipation is non-unitary, consider the following description of the bit-flip process:

Decoherence: Decoherence is more insidious. It is a coupling between two initially isolated quantum systems (say, the qubits and the environment) that tends to randomize the relative phases of the possible states of memory registers. This destroys the planned interference effects of a computational algorithm and entangles the state of the quantum computer with the environment. Decoherence usually occurs on a faster time-scale than dissipation.

In decoherence, information encoded in a quantum state “leaks out” to the environment. If the effects of the environment are not explicitly modeled, it would appear as if the logical qubits are no longer evolving in accordance with the Schrödinger equation. In fact, it is this coupling between a quantum system and its environment, and the resulting loss of coherence that prevents quantum effects from being evident at the macroscopic level. When that happens, that state will look locally like a classical state. Therefore, as far as a local observer is concerned, there is no difference between a classical bit and a qubit that has become hopelessly entangled with the rest of the universe.8 For example, suppose we have a qubit in the state

(|0⟩ + |1⟩)/√2 and further suppose that this qubit gets entangled with a second qubit so that the joint state of the two qubits is (|00⟩ + |11⟩)/√2. If we now ignore the second qubit, the first qubit will be in the maximally mixed state, i.e., no matter what measurement you make on it, you will get a random output. You will never see interference between the |00⟩ and |11⟩ branches of the wave function, because for interference to occur the two branches must be identical in all aspects and this cannot happen by changing the first qubit alone to make |00⟩ identical to |11⟩. To see an interference pattern it is necessary to perform a joint measurement on the two qubits together.

7Williams & Clearwater (1998), p. 214.

8See, e.g., Aaronson (2006).

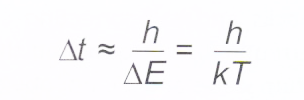

Decoherence is one of the most pervasive processes in the universe. Indeed, it is precisely because decoherence is so powerful that the quantum fault-tolerance theorem came as a shock to physicists.9 Decoherence is one more manifestation of the second law of thermodynamics. (Quantum states are very easy to destroy and very hard to put back together.) Decoherence times depend on the physical properties of the qubit, the size of the memory register, the temperature of the environment, the rate of collisions with ambient gas molecules, etc. A very rough estimate of decoherence time can be made on the basis of Heisenberg’s uncertainty principle in energy and time

where h is the Planck constant (h = 6.626070040(81)×10-34 joule-secs), k is the Boltzmann constant (k = 1.38064852(79)×10−23 joules/°K) and T is the absolute temperature of the environment.10 At room temperature (≈300 °K), this gives a typical decoherence time of about 10-14 sec. At lower environmental temperatures, decoherence times are longer. For example, at liquid helium temperatures, it is about 100 times longer than at room temperature. The obvious ways of increasing decoherence times is to chill the computer and seal it in as best a vacuum as we can, apart from choosing qubit materials which provided better decoherence times. It is imperative that any quantum computation be completed before decoherence starts and destroys valid superposition of states. Current decoherence times are typically a few microseconds.11 For an excellent tutorial on decoherence, see Marquardt & Puttmann (2008).

Presently available computing times before decoherence are very small and hence permit only a few computational steps. However, computing times can improve if suitable error correction algorithms are found. In 1996, A. M. Steane,12 and independently A. R. Calderbank and P. W. Shor13 found that some ideas used in the construction of classical linear codes can be used to correct errors in quantum computing by the clever use of quantum entanglement. The class of quantum error correction codes they devised are known as the Calderbank-Shor-Steane (CSS) codes. The codes are limited to correcting a group of errors that are unitary—spin and phase flips—which can be described by Pauli matrices; they are called depolarization errors. Such errors are large and discrete and hence the most tractable of all quantum errors.

The idea behind the CSS codes is relatively obvious, namely that quantum states could be encoded. In classical information theory, coding just refers to the use of a string of bits to stand in for the value of one bit (or perhaps a smaller block of bits). Embedding redundancy into the encoding allows at least some errors to be caught and repaired. This form of encoding is standard practice in digital communications. However, it was not at all obvious how redundancy could be used in quantum computation. The no-cloning theorem seemed to say that even the simplest kind of redundancy was not possible even in principle. Amidst skepticism, Shor14 and Steane15 independently discovered an ingenious way to use entanglement, in the service of redundancy and error correction. In fact, they used entanglement to fight entanglement!

9The fault-tolerance theorem says roughly that, if the rate of decoherence per qubit per gate operation is below a constant threshold, then it is possible, in principle, to correct errors faster than they occur and thereby perform an arbitrarily long quantum computation.

10NIST reference on Constants, Units, and Uncertainty: see https://physics.nist.gov/cgi-bin/cuu/Value?h for the Planck Constant, and https://physics.nist.gov/cgi-bin/cuu/Value?k for the Boltzmann Constant.

11Ball (2018).

12Steane (1996). See also: Steane (1996a), Steane (1998), Raussendorf (2012).

13Calderbank & Shor (1996). See also: Shor (1995).

14Shor (1995).

15Steane (1996a).

Encoding-decoding: Quantum error correction codes work by encoding quantum states in a special way and then decoding when it is required to recover the original state without error. Clearly, this assumption is vulnerable if the quantum gates used in the process are themselves noisy. Fortunately, the quantum fault-tolerance theorem mentioned earlier comes to our rescue. In fact, error correction can be implemented fault-tolerantly, i.e., in such a way that it is insensitive to errors that occur during the error detection operations themselves.

Finally, there is the threshold theorem that says that provided the noise in individual quantum gates is below a certain constant threshold it is possible to efficiently perform an arbitrarily large quantum computation. There are caveats. Nevertheless, it is a remarkable theorem indicating that noise likely poses no fundamental barrier to the performance of large-scale quantum computations. Error correction codes related to this theorem are called concatenated quantum codes. In these codes, each qubit is itself further encoded in a hierarchical tree of entangled qubits. In this way, concatenated codes allow correctable quantum computations of unlimited duration!

The key idea is that if we wish to protect a message against the effects of noise, then we should encode the message by adding some redundant information to the message. That way, even if some bits of the message get corrupted, there will be enough redundancy in the encoded message to recover the message completely by decoding. This redundancy is essential. The amount of redundancy required depends on the severity of noise.

Steps of error correction: The steps are encoding, error detection, and recovery.

Encoding: Quantum states are encoded by unitary operations into a quantum error-correcting code, formally defined as a subspace C of some larger Hilbert space. This code may subsequently be affected by noise.

Error detection: A syndrome16 measurement is made to diagnose the type of error which occurred. In effect, the measured value tells us what procedure to use to recover the original state of the code.

Recovery: Post-syndrome measurement, a recovery operation returns the quantum system to the original state of the code.

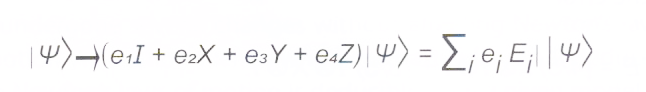

We assume that all errors are the result of quantum interactions between a set of qubits and the environment. In addition, the possible errors for each single qubit considered are linear combinations of the following: no errors (I), bit flip errors (X), phase errors (Z), and bit flip phase errors (Y). Note that these are describable by Pauli matrices. A general form of a single-bit error is thus

16Syndrome: a group or pattern of symptoms that together is indicative of a particular disease, disorder, or condition.

An error correcting code for a set of errors Ei consists of a mapping C that embeds n data bits in n + k code bits (without making any error) together with a syndrome extraction operator SC that maps n + k code bits to the set of indices of correctable errors Ei such that i = SC (Ei (C(x))). The k bits of C(x) provide the desired redundancy in the n bit message.

In the encoding stage, given an error correcting code C with syndrome extraction operator SC, an n-bit quantum state |ψ⟩ is encoded in a n + k-bit quantum state |Φ⟩ = C|ψ⟩ . Now assume that |Φ⟩ has been corrupted to ΣieiEi|Φ⟩.

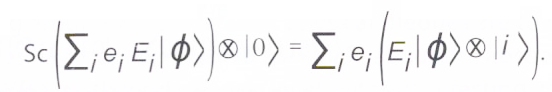

In the error detection stage, apply SC to ΣieiEi|Φ⟩ padded with sufficient |0⟩ bits,

Quantum parallelism gives a superposition of different errors each associated with their respective error index i. Next, measure the |i⟩ component of the result. This will yield a (random) value i0 and project the state to Ei0|Φ, i0⟩ . Finally, in the recovery stage, apply the inverse error map Ei0-1 to the first n + k qubits of Ei0|Φ, i0⟩ to get the corrected state |Φ⟩.

Example

Consider the simple error correcting code C that maps |0⟩ to |000⟩ and |1⟩ to |111⟩. C can correct single bit flip errors

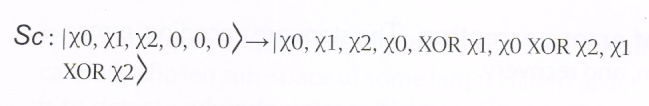

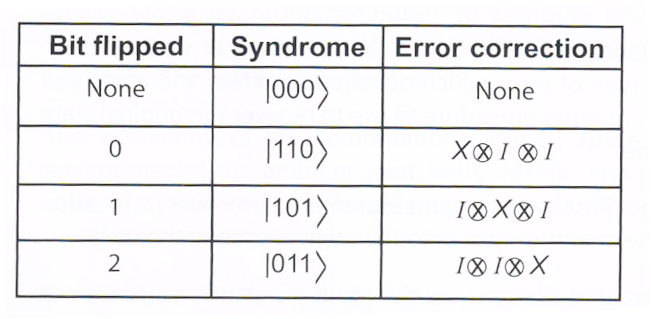

The syndrome extraction operator is

with the corresponding error correction operators shown in the table below. In this example Ei = Ei-1. Note that operations like x0 XOR x2 are parity checks.

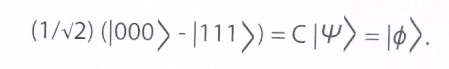

Consider the quantum bit |ψ⟩ = (1/√2) (|0⟩ – |1⟩), which is encoded as

C|ψ⟩ = |Φ⟩ = (1/√2) (|000⟩ – |111⟩),

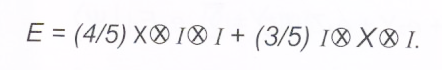

and the error

The resulting error state is

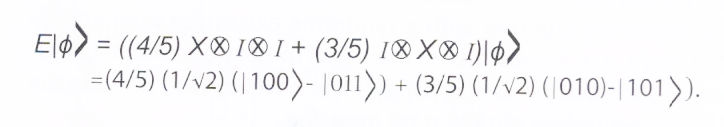

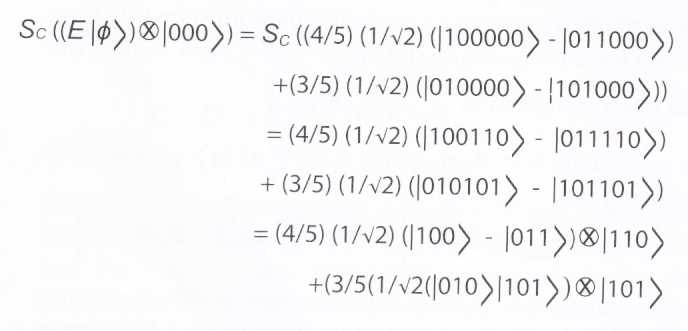

Next apply the syndrome extraction17 to (E|Φ⟩) ⊗ |000⟩,

Measuring the last three bits will yield either |110⟩ or |101⟩. Assume that |110⟩ is measured, then the state becomes

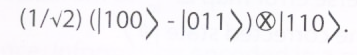

Note that almost magically a part of the error has disappeared. The remaining part of the error can be removed by applying the inverse error operator X ⊗ I ⊗ I, corresponding to the measured value |110⟩, to the first three bits, to produce

What we have demonstrated just now is that it is possible to use several entangled qubits to represent one logical qubit. Such entanglement spreads out the state of the qubit in a way that errors in any “part” of the entangled qubit can be detected, diagnosed, and corrected. Thus, while entangling qubits with the environment may introduce errors, entangling qubits with themselves might immunize them from such errors. A remarkable aspect of the CSS codes is that the process of error correction has an essential digital character to it, even though a qubit can be in a continuum of possible states. Error detection involves the performance of a series of binary-valued quantum measurements. Then these bit values provide an instruction for an error detection step, which involves a discrete rotation of a specific state. This digital character derives from the fact that any error which the environment can cause on a single qubit acts in a subspace orthogonal to the state space of the coded qubit itself. This leaves the complex coefficients, to a very high accuracy, untouched by the error process (error containment), and allows the error detection and correction steps to work in a way which is oblivious to their values.

The need for error correction will, of course, diminish as the technology required to build reliable quantum computer improves. At one time it appeared that building a 1,000 qubit computer may be out of reach. Advances in fabrication techniques, and improved control and measurement techniques, it no longer appears so.

17This is the operator, SC : |x0, x1, x2, 0, 0, 0⟩ → |x0, x1, x2, x0 XOR x1, x0 XOR x2, x1 XOR x2⟩.

There is an interesting situation where it is possible to provided passive protection against errors — as opposed to the active error protection of the quantum error correction codes discussed earlier. The model assumes that all qubits in the register are affected by the same error at the same time. This is very different from an independent error model. Does such collective dephasing occur? It does in situations where the physical dimensions of the register are smaller than the shortest wavelength of the field.

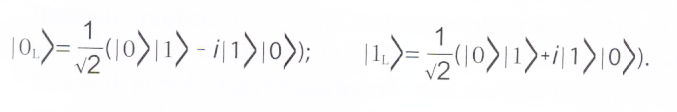

Consider the following encoding:

The states |0⟩L and |1⟩L form an orthonormal basis. Note also that |0⟩*L = |1⟩L.

Decoherence can be modeled by an operation called collective dephasing. The operation transforms |1⟩ into eiθ|1⟩ for both physical qubits at the same time and leaves |0⟩ unchanged for both of them. This results in

If all operations are carried out on qubits encoded in the same way, collective dephasing only produces a global phase change, and hence does not affect measurements. Recall that in quantum mechanics only phase differences between qubits matter.

In February 2001, Wineland’s group18 reported an experiment in which they encoded a qubit into a decoherence free subspace of a pair of trapped 9Be+ ions. They used encoding exactly like the one shown above. Then they measured the storage time under ambient conditions and under interaction with an engineered noisy environment and observed that the encoding increased the storage time by up to an order of magnitude.

The macroscopic world is classical.

The microscopic world is quantum.

Every physicist agrees that despite its amazing weirdness quantum mechanics works brilliantly. The weirdness is shrugged off except by those who are incurably curious. If you want to calculate what experiments will reveal about subatomic particles, atoms, molecules and photons, then quantum mechanics will provide you the answer. It uses mathematical formulas and axioms that have withstood innumerable tests conducted with extraordinary rigor but were essentially pulled out of a hat by its creators in the early 20th century. It does not answer questions with certainties but with probabilities. Thus, the probability of measuring its observable properties is found by applying a mathematical operator to the wave function. The wave function contains all the information that we can know about the quantum system it describes. The rule for calculating probabilities was a bold intuitive guess by Max Born as was the wave equation by Schrödinger. Neither is supported by rigorous derivation. Quantum mechanics is not just a complex framework, but also an adhoc patchwork that lacks obvious physical interpretation or justification.19 At its core, quantum mechanics is probabilistic.

18Kielpinski, et al(2001).

19Ball (2017).

By contrast, Albert Einstein’s theory of relativity rests on two intuitively simple and physically understandable principles: (1) the speed of light is constant, and (2) the laws of physics are the same for two observers moving at constant speed relative to one another. From these the rest of the theory follows. Physicists yearn to be able to state quantum theory with similar, intuitively understandable simplicity, perhaps without discarding its unique probabilistic aspect, but nevertheless explains the contradictory presence of superposition and wave function collapse in a measurement. Figure 3.1 in Part I provided a tantalizing, partial glimpse of such a possibility. Measurement is the mystery. a quantum object generally offers more encoded, revelatory options for measurement than can be seen in practice. It is this aspect that we are concerned with here. In short, our attempt is to speak about the “underlying reality” that creates those measurement probabilities.

Note that a mathematical theory is abstract. It describes any system by some indexed list of properties and their possible values and the rules by which the properties can change. It is devoid of semantics. Our approach therefore neither addresses nor alters any underlying physics measurable at the quantum mechanical level. It just suggests a mechanism for relating inputs with outputs at the subquantum level. In a sense, we are assuming that quantum mechanics approximates a deeper theory. At the level of quantum computing and the no go theorems, it is obvious that quantum superposition and entanglement enable us to encode and preserve information and retrieve it from space and time provided all processes are unitary operations.

While the formalism of quantum mechanics is widely accepted, it does not have a unique interpretation due to the incompatibility between postulates 2 and 3 (see Part I). Indeed, without postulate 3 telling us what we can observe, the equations of quantum mechanics would be just pure mathematics devoid of physical meaning. Any interpretation can come only after an investigation of the logical structure of the postulates of quantum mechanics is made. For example, Newtonian mechanics does not define the structure of matter. Therefore, how we model the structure of matter is largely an independent issue. However, given the success of Newtonian mechanics, any model we propose is expected to be compatible with Newton’s laws of motion in the realm where it rules. Since Newton, our understanding of the structure of matter has undergone several changes without affecting Newton’s laws of motion. A question such as whether a particular result deduced from Newton’s laws of motion is deducible from a given model of material structure is therefore not relevant.

Likewise, as long as our interpretation (or model) of superposition, entanglement, and measurement does not require the postulates of quantum mechanics to be altered, all the predictions made by quantum mechanics will remain compatible with our interpretation. This assertion is important because we make no comments on the Hamiltonian, which captures the detailed dynamics of a quantum system. Quantum mechanics does not tell us how to construct the Hamiltonian. In fact, real life problems seeking solutions in quantum mechanics need to be addressed in detail by physical theories built within the framework of quantum mechanics. The postulates of quantum mechanics provide only the scaffolding around which detailed physical theories are to be built. This gives us an opportunity to speculate about the abstract state vector |ψ⟩ at the sub-Planck level without affecting the postulates of quantum mechanics. The sub-Planck scale gives us the freedom to construct mechanisms for our interpretation that need not be bound by the laws of quantum mechanics because they are not expected to rule in that scale. The high point of the interpretation is that it explains the measurement postulate as the inability of a classical measuring device to measure at a precisely predefined time.

Following the principle of Occam’s razor that “entities should not be multiplied unnecessarily” or the law of parsimony, the interpretation adopted by Bera and Menon20 posits that the sub-Planck scale structure of the state vector is such that its eigenstates are dynamically time-division multiplexed. To this is added a probabilistic measurement model which determines only the instantaneous eigenstate of the system at the instant of measurement. The instant of measurement is chosen randomly by the classical measurement apparatus, once activated, within a small interval. The measured result is regarded as the joint product of the quantum system and the macroscopic classical measuring apparatus. Measurement is complete when the wave function assumes the measured state.

In the dynamic time-division multiplexing, superposed states appear as time-sliced in a cyclic manner such that the time spent by an eigenstate in a cycle is related to the complex amplitudes appearing in the state vector. Entangled states binding two or more particles appear in this interpretation as the synchronization of the sub-Planck level oscillation of the participating particles. Unlike the Copenhagen interpretation, in this interpretation it is not meaningless to ask about the state of the system in the absence of a measuring system. Indeed, the interpretation can be related to the macroscopic world we live in and hence appears more intuitive to the human mind than the other interpretations in the literature.

Consider a qubit with the state vector |ψ⟩ = α|0⟩ + β|1⟩. Its hypothesized structure in the sub-Planck scale is illustrated in Figure 13.1.

In a cycle time of Tc the qubit oscillates between the eigenstates |0⟩ and |1⟩. We assume Tc to be much smaller than Planck time (<< 10-43 sec) to allow us to interpret the state vector independently of the Schrödinger equation. (The implicit assumption here is that in some averaged sense, perhaps with some additional information, these oscillations will represent the state vector |ψ⟩, say, analogous to the case of a volume of gas in classical mechanics, where random molecular motions, appropriately averaged, represent classical pressure, temperature, and density of a volume of gas.) It is not necessary for us to know the value of Tc. We only assert that it is a universal constant. Within a cycle, the time spent by the qubit in state |0⟩ is T0 = |α|2 Tc and in state |1⟩ is T1 = |β|2Tc so that Tc = T0 + T1. Superposition is interpreted here as the deterministic linear sequential progression of the qubit’s states |0⟩ and |1⟩. A measurement on this qubit will return the instantaneous pre-measurement state of the qubit and collapse the qubit to the measured state.

20Bera & Menon (2009).

The measurement device is modeled as follows. Let Δtm be the interval during which the measuring device measures, where Δtm is only assumed to be orders of magnitude greater than Planck time. During Δtm, at some random instant, the device measures |ψ⟩. This avoids temporal bias. Thus, the source of indeterminism built in postulate 3 is posited as the classical measuring device’s inability to measure with temporal precision. The measurement concurrently induces the state vector to collapse to the measured state.

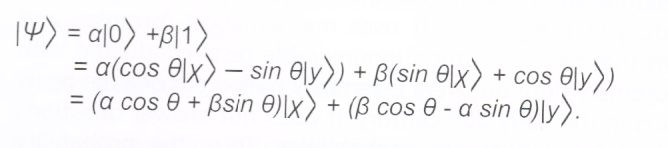

If the measurement basis differs from {|0⟩, |1⟩}, say, it is {|x⟩, |y⟩} obtained by rotating the basis {|0⟩, |1⟩} anti-clockwise by the angle θ, then |0⟩ = cos θ|x⟩ – sin θ|y⟩ and |1⟩ = sin θ|x⟩ + cos θ|y⟩ and conversely

|x⟩ = cos θ|0⟩ – sin θ|1⟩ and |y⟩ = sin θ|0⟩ + cos θ|1⟩. Figure 13.2 shows how the vectors |0⟩, |1⟩ will be observed by a measuring device in the basis {|x⟩, |y⟩} and the probabilities with which it will measure |x⟩ or |y⟩. Choosing a basis different from {|0⟩, |1⟩} means changing the values of t0 and t1 to tx and ty and correspondingly re-labeling the eigenstates to |x⟩ and |y⟩.

Thus, we can easily verify that

Further, Tx and Ty corresponding to the time durations the system will be in state |x⟩ and |y⟩, respectively, with respect to Tc in {|0⟩, |1⟩} basis is given by

Thus, if the measurement basis is {|x⟩, |y⟩}, the system, when measured, will randomly collapse to |x⟩ or |y⟩ with probability Tx/Tc = |α cos θ + β sin θ|2 or Ty/Tc = |β cos θ – α sin θ|2, respectively.

Finally, the posited model of entanglement between qubits requires that any unitary operation that causes entanglement, say, between two qubits, also synchronizes their sub-Planck level oscillations. This is shown in Figure 13.3 for the two-qubit Bell states,

Figure 13.3 Two-particle entangled systems;|ψ1⟩ (left), |ψ2⟩ (right).

Note that while entanglement results in a synchronous state for the two particles, the converse is not necessarily true. When a measurement is made on one of the entangled particles, both will collapse simultaneously. According to our model, the particles will collapse to the state they are in at the instant of measurement (such as τ1 or τ2 in Figure 13.3), which is in accord with postulate 3. We do not know how Nature might accomplish the required synchronization. It is, of course, clear that this interpretation cannot violate the uncertainty principle since Postulate 3 is not violated. Everything rests entirely on the notion of external observations because without it there are no means to ascribe a physical interpretation. We now use the teleportation algorithm (see Section 10.6 in Part II) to elucidate the interpretation.

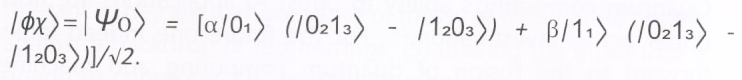

Alice wishes to teleport a qubit, labelled by subscript 1, of unknown state |Φ⟩ = α|01⟩ + β|11⟩, to Bob. In addition, there is an entangled pair of auxiliary qubits designated by subscripts 2 and 3 in the state

|x⟩ = (|0213⟩ – |1203⟩)/√2. Alice holds the qubit with subscript 2 in addition to the one with subscript 1 while Bob holds the qubit with subscript 3. Thus, the initial state of the three-qubit system is (see Figure 13.4a where the qubit subscripts (in this and subsequent Figures 13.4b and 13.4c) have been omitted since they can be inferred from their position in the state |…⟩) given by

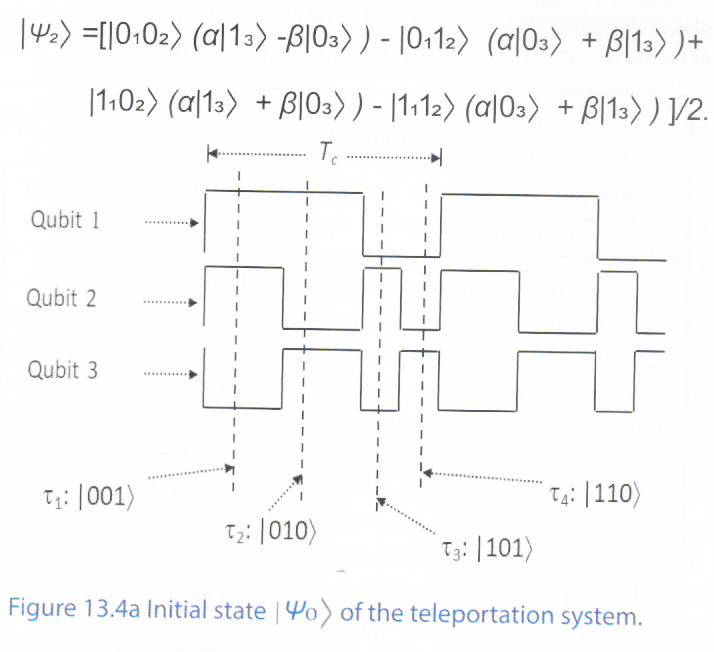

Alice now applies the Cnot gate (with qubit 2 as the target) to the qubits held by her. This changes the state of the three-qubit system to (see Figure 13.4b)

![]()

Next, Alice applies the Hadamard gate to qubit 1 which puts the three-qubit system in the state shown in Figure 13.4c.

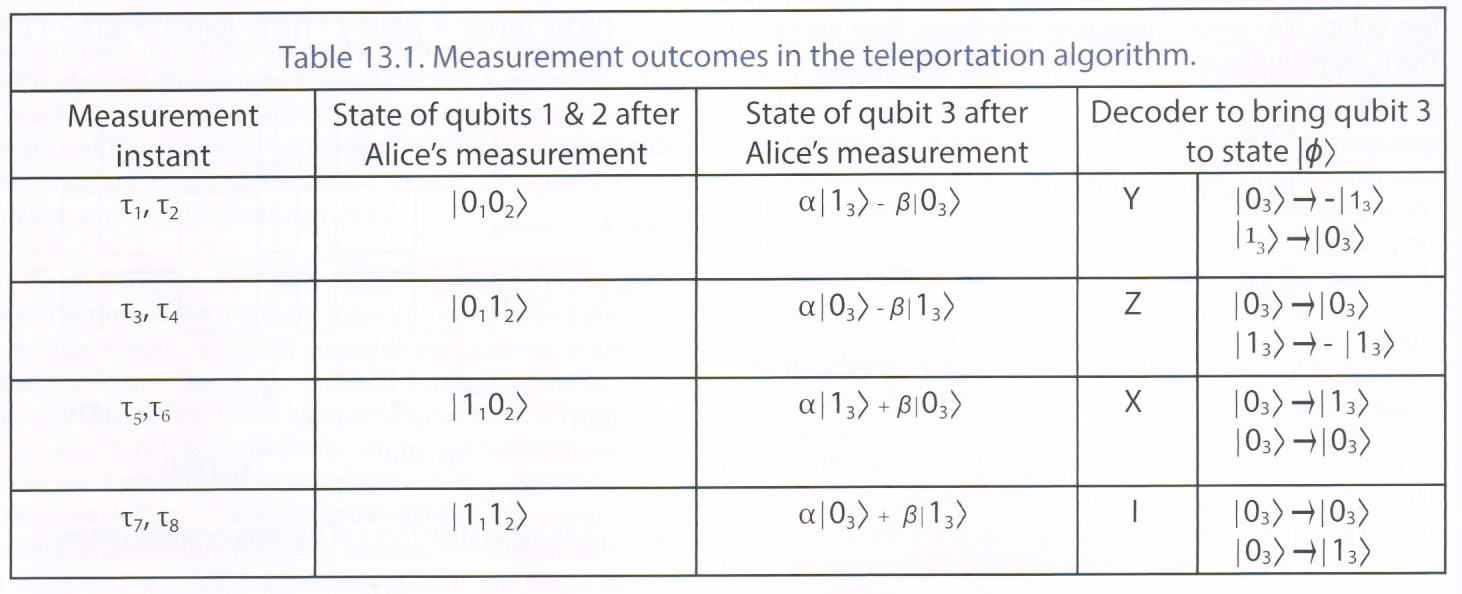

Finally, Alice makes a “combined” measurement on the two qubits she holds. Such a measurement gives access to some combined (or global) information on both qubits, but none on a single qubit, i.e., no distinction between the two qubits can be established. Her measurement will lead the pair to collapse to one of the four possible states |0102⟩, |0112⟩, |1102⟩, or |1112⟩, while the third qubit, correspondingly, will immediately collapse to the state α|13⟩ – β|03⟩, α|03⟩ – β|13⟩, α|13⟩ + β|03⟩, or α|03⟩ + β|13⟩, respectively, since it is also entangled with qubits 1 and 2. Table 13.1 shows the measurement result Alice will get depending upon the instant the measurement actually occurred, along with the post-measurement state of qubit 3 held by Bob.

Alice communicates the classical result of her “combined” measurement (|0102⟩, |0112⟩, |1102⟩, or |1112⟩) to Bob (using classical means such as telephone, email, etc.). Bob then uses the decoder (a unitary transformation) listed in Table 13.1 corresponding to the state of qubits 1 & 2 conveyed to him by Alice to bring his qubit to state |Φ⟩ = α|03⟩ + β|13⟩.

Quantum mechanics works exceedingly well in all practical applications. No example of conflict between its predictions and experiment is known. Without quantum physics, we could not explain the behavior of the solids, the structure and function of DNA, the color of the stars, the action of lasers, or the properties of super-fluids. Yet nearly a century after its inception, the debate about the relation of quantum physics to the familiar physical world continues. Why is a theory that seems to account with precision for everything we can measure still deemed lacking?21

The dream is that one day we will produce quantum computers and prove their supremacy over classical computers by their super-classical behavior. Such computers may still require quantum error corrections to achieve scalable quantum computing. There are plenty of challenges to be overcome along the way, namely the difficulty of controlling quantum systems accurately. Even small errors caused by imperfect physical quantum gates will eventually corrupt computations. There is the problem of decoherence arising from unintended correlations with the environment. This is not a problem in classical computers but can be lethal for a quantum computer if quantum superposition is irreparably altered. This is where quantum error-correcting codes play a pivotal role. Compared to error correction codes for classical computers which need only protect against bit flips, such codes for quantum computers are much more difficult because they must, in addition, protect against phase errors.

But there are a few fundamental questions that physicists would also like to answer. Two such questions are: “(1) What quantum tasks are feasible? (2) What quantum tasks are hard to simulate classically?”22 Given that we do not yet have a bridge that spans across classical and quantum physics, what if ultimately Nature really admits succinct classical descriptions of quantum states? The fact is that we are reluctant to believe that such a bridge does not exist. But researchers will not wait. Once quantum computers become technologically mature, there will be no dearth of important applications for quantum computing. Waiting in the wings are simulation of highly entangled matter, e.g., quantum antiferromagnets, exotic superconductors, complex biomolecules, bulk nuclear matter, and space-time near singularities.23 There will be plenty of opportunities to discover new and unimagined natural phenomena. Surely, there are new algorithms waiting to be discovered other than those that do no more than quantum versions of classical ones.

Quantum computing’s ability to boost AI applications are now under active investigation.24 For the future one indeed looks forward to the fusion of quantum computing and machine learning and what such a fusion may lead to. One expects some amazingly disruptive technologies to follow that may finally and convincingly show that it can outdo or at least tenaciously compete with the best of human minds in creativity and problem solving. In mere computing, computers handily outbeat all humans. Vast computing power and algorithm development has also ensured that in such things as chess and data-mining humans are easily overshadowed by classical computers. In matters such as face recognition, language translation, and medical diagnosis they are improving by the day that respective present day human experts are likely to become unemployed within a few years. Quantum computers can not only manipulate vast arrays of data in a single step (one of its principal advantages) but also spot subtle patterns that classical computers cannot because of a natural connection between the statistical nature of computing and machine learning, say, using neural networks which are designed to mimic human brains in pattern recognition.

21Zurek (2002).

22Preskill (2012).

23Preskill (2012).

24See, e.g., Musser (2018). “Neural networks and other machine-learning systems have become the most disruptive technology of the 21st century.”

An artificial “neuron” can be as simple as a bit or a qubit or as complex as you wish to make it. A neural net is a network of neurons, typically structured in arrayed layers where the first layer accepts inputs (e.g., image pixels), and the intermediate layers create various combinations of the input (say, representing structures such as edges, geometric shapes, etc.), and a final output layer that produces, say, a high-level classification of the image (say, dog, human, tree, etc.). Neural nets must first be trained on vast amounts of data to develop its “intuition” in a given area of knowledge before it makes its debut following which it can continue its learning process as it goes along its process of becoming smarter and smarter. During the learning phase, the neural net learns to make connections between inputs and outputs, and uses that learning to determine the output of an unknown input. The path that connects the input with the output through layers of neurons essentially means doing massive amounts of matrix algebra and such manipulations are exponentially faster with qubits than with classical bits. A typical neural net that solves real-life problems will have billions of neurons. Further, unlike a set of classical bits that store information locally, a set of qubits store far more information in the collective properties of those qubits. While a set of n classical bits can encode 2n different pieces of information, at any given time it can represent and process only one of them, the same number of qubits can concurrently represent and process 2n pieces of information by spreading the information among themselves.

So, it is not surprising that Google, IBM, Microsoft and several others are rushing into quantum computing and pouring money into it to resolve the technical hurdles that lie in its way of comprehensively surpassing humans. Those hurdles include (1) quantum computers operate on quantum states, not on human-readable data, and translating between the two presently is a giant task; (2) machine-learning algorithms must be noise-tolerant if they are to make sense of messy or incomplete input against a backdrop of red-herrings; (3) loading the input into a quantum computer, i.e., putting classical data into a quantum state is a task horrendous enough to be shunned; (4) once the data is fed into the computer, it must be stored in a manner that a quantum system can interact with it without collapsing the ongoing calculation; and finally (5) how to measure (read) the output because measurement will return only a single number at a time, and that too probabilistically and collapse the system in the process This means running the problem multiple times before all the information can be extracted.

Of course, as we have seen in Part II, all is not lost. For certain classes of problems exploiting quantum interference through cleverly choreographed unitary and measurement operations it is possible to eliminate incorrect answers and only the correct answer remains, waiting to be measured. Another important case is where brute force input data entry is not needed because the data is the product of a physics or chemistry experiment and data flows into the computer seamlessly. An even more intriguing possibility is that quantum machine learning systems may help in designing their successors. It is intriguing because there is enough reason to believe that human brains may themselves be hybrid quantum-classical computers, with the classical part deftly handling input-output. It may well be that quantum machine-learning systems is a better way to study human cognition, which depends on context, beliefs (biases), perceived available choices (wave function collapse?) based on the questions we ask and the sequence in which they are asked.

The central issue here is the war between calculatingly rational machines versus rationalizing humans.

Now that quantum computing, biotechnology, artificial intelligence (AI), and novel materials have all crossed their initial teething troubles stage and have matured to a level where their potential in industrial applications is no longer doubted, they are ready for exponential growth in applications. The world in now seeing a unique confluence of these advanced technologies whose potential to serve mankind beneficially vastly overshadows any other in the history of the world.

Automation in agriculture and industry caused mass extinctions of jobs and brought about profound societal changes that led to rapid urbanization. However, those job losses and those later brought about by the Internet and World Wide Web (WWW) combine were handsomely compensated for by new kinds of better paid and more interesting jobs in the service sector and high-tech industries during the industrial era. The fledgling post-industrial era, starting around 1990, saw the rapid evolution of the Internet and digital technologies that opened the way for upward mobility of the masses, but brought with it often indiscriminate surveillance by government agencies, easier violation of principles of privacy and the indiscriminate right to dissent from anywhere in the world on any issue. Future AI advances could make such activities more widespread and more powerful. That is only the beginning.

The world had not anticipated, much less prepared itself for such rapid convergence of multiple and powerful technologies—AI, robotics, quantum computing, biotechnology, cloud computing, 3D precision manufacturing, etc. Their convergence is rapidly approaching a tipping point at which significant technological changes are likely to occur suddenly and without adequate warning. Already, Google, Toyota, Facebook, Microsoft and some others with deep pockets have collectively invested billions of dollars into AI, robotics and quantum computing research because they see it as the next frontier for profits. Significant breakthroughs in AI will have a profound effect on society and social structure. The minimal upgradable science, technology, engineering and mathematical (STEM) skills required for future employment will be dramatically different and substantially higher. The emerging crisis: How will society organize itself when emotionless, rational machines and robots (with embedded synthesized biological components in them) outperform, self-improve, out-think humans, and can no longer be controlled by humans because of unshared values, interests, and contested command and control mechanisms?

The first wave of AI has already stealthily pervaded our lives via speech recognition, search engines, and image classification, and is about to do so via self-driving cars, and applications in health care. The continuing march of AI and AI-aided automation will undoubtedly transform and restructure society on a global scale. What we do not know is how the transition will pan out—who will benefit and who will be heavily marginalized, and in either case, for what duration and with what probability of bouncing back. A society dependent on indifferent AI that knows neither brutality, compassion nor any form of emotion and is essentially algorithmically driven and answerable to no human constructed institutions related to law and order, is a pristinely alien phenomenon. In this society, there will be enormous potential to create wealth through increased productivity independent of a human labor force. Currently, wealth gains derived from technology are concentrated in companies and shared by their shareholders and the C-suite. They comprise a miniscule percentage of the global population, which includes the infamous 1% highlighted by Oxfam25. The sharing of enhanced productivity generated wealth with the rest of the global population has no easy answer to the question: “What norms should we adopt that would make equitable wealth sharing possible?” What socio-economic reforms and community assistance will allow those unemployed because of AI, to contribute to society, and if necessary, to be reskilled?

The new science and technology advances in AI and robots (equipped with mimicking human sensory sensors) are now set to replace repetitive but skilled jobs hitherto performed by humans. It is not at all clear what new compensating jobs for humans can be created or even from where the jobs would come from to permit a dignified, basic life style that includes food, clothing, and shelter. All past socio-economic reforms assiduously put in place since the agricultural era are in danger of falling apart. The specter of mass employment, strife and mayhem in an era of abundance of food and automated mass manufactured luxuries may well become far too real for lack of mass purchasing power. The crisis will begin with increased inequality that hits hardest along lines of class, race and gender. Those lacking employable skills may become destitute. We have entered the era of continuous learning, just to stay employable. That may not be good news for those wishing to live a long life. These are religion-threatening, man-made developments. Calculatingly rational machines rather than rationalizing humans are on the ascendancy. It is not clear how classical human minds can divine the future or cope with it.

Turing showed that given an algorithm its execution is mechanizable and Landauer showed that computing requires a physical system (information is physical). Developing algorithms requires intelligence and a knowledge of the laws of Nature. Our present knowledge shows there is a world of difference in the way we understand Nature in terms of classical physics and quantum physics. These differences lead to fundamental differences in the way we can design and efficiently execute algorithms on physical computers. Interest in quantum computers emerged when it was shown that reversible Turing machines were possible and therefore Turing machines could be mimicked using unitary operators in the quantum world. Today, physical quantum computers exist, and the technologies needed to improve and scale them are advancing rapidly.

The algorithms described in this paper will, of course, come efficiently coded into reusable libraries for quantum computers, but there are many other novel and non-obvious algorithms waiting in the wings to emerge. It is the excitement of developing and discovering them that induces people to delve into quantum computing in the hope that phenomenally powerful algorithms beyond the reach of classical computing machines will emerge if quantum superposition, entanglement, teleportation, and state vector collapse are intelligently used in the Hilbert space. We now know that quantum computers are not just faster versions of ordinary computers, but something much stranger.26

25Oxfam (2018). “Eighty two percent of the wealth generated last year [2017] went to the richest one percent of the global population, while the 3.7 billion people who make up the poorest half of the world saw no increase in their wealth”.

26Brandt, Yannouleas & Landman (2018).

Cited URLs were last accessed on 10 May 2018.

Aaronson (2006). Aaronson, S. PHYS771 Lecture 11: Decoherence and Hidden Variables. University of Waterloo. Fall 2006, http://www.scottaaronson.com/democritus/lec11.html

Ball (2017). Ball, P. Physicists Want to Rebuild Quantum Theory from Scratch. Science, 09.02.17 https://www.wired.com/story/physicists-want-to-rebuild-quantum-theory-from-scratch/

Ball (2018). Ball, P. The Era of Quantum Computing Is Here. Outlook: Cloudy. Quanta Magazine. 24 January 2018. https://d2r55xnwy6nx47.cloudfront.net/uploads/2018/01/the-era-of-quantum-computing-is-here-outlook-cloudy-20180124.pdf

Bera & Menon (2009). Bera, R. K., and Menon, V. A New Interpretation of Superposition, Entanglement, and Measurement in Quantum Mechanics. arXiv:0908.0957 [quant-ph], 07 August 2009. https://arxiv.org/pdf/0908.0957.pdf

Brandt, Yannouleas & Landman (2018). Brandt, B. B., Yannouleas, C., and Landman, U. Interatomic Interaction Effects on Second-Order Momentum Correlations and Hong-Ou-Mandel Interference of Double-Well-Trapped Ultracold Fermionic Atoms. arXiv:1801.02295 [cond-mat.quant-gas], 16 March 2018. https://arxiv.org/pdf/1801.02295.pdf or https://arxiv.org/abs/1801.02295

Calderbank & Shor (1996). Calderbank, A.R., and Shor, P.W. Good Quantum Error-Correcting Codes Exist. Physical Review A, 54 (2), 1098-1105 (1996). http://www-math.mit.edu/~shor/papers/good-codes.pdf Also as arXiv:quant-ph/9512032. https://arxiv.org/pdf/quant-ph/9512032.pdf

Heeres, et al (2017). Heeres, R. W., Reinhold, P., Ofek, N., Frunzio, L., Jiang, L., Devoret, M. H., and Schoelkopf, R. J. Implementing a Universal Gate Set on a Logical Qubit Encoded in an Oscillator. Nature Communications, 8 (), Published online 21 July 2017. https://www.nature.com/articles/s41467-017-00045-1

Kielpinski, et al (2001). Kielpinski (2001). Kielpinski, D., Ben-Kish, A., Britton, J., Meyer, V., Rowe, M. A., Sackett, C. A., Itano, W. M., C. Monroe, C., and Wineland, D. J. Recent Results in Trapped-Ion Quantum Computing at NIST. arXiv:quant-ph/0102086v1, 16 February 2001. https://arxiv.org/pdf/quant-ph/0102086v1

Marquardt & Püttmann (2008). Marquardt, F., and Püttmann, A. Introduction to Dissipation and Decoherence in Quantum Systems. arXiv:0809.4403v1 [quant-ph] 25 September 2008. https://arxiv.org/pdf/0809.4403.pdf

Musser (2018). Musser, G. Job One for Quantum Computers: Boost Artificial Intelligence. Quanta Magazine, 08 February 2018. https://www.dwavesys.com/media-coverage/quanta-magazine-job-one-quantum-computers-boost-artificial-intelligence

Oxfam (2018). Richest 1 Percent Bagged 82 Percent of Wealth Created Last Year – Poorest Half of Humanity Got Nothing. Oxfam. 22 January 2018. https://www.oxfam.org/en/pressroom/pressreleases/2018-01-22/richest-1-percent-bagged-82-percent-wealth-created-last-year

Preskill (2012). Preskill, J. Quantum Computing and the Entanglement Frontier. arXiv:1203.5813v3, 10 November 2012. https://arxiv.org/abs/1203.5813 (Abstract), https://arxiv.org/pdf/1203.5813.pdf (Paper)

Raussendorf (2012). Raussendorf, R. Key Ideas in Quantum Error Correction. Philosophical Transactions of the Royal Society of London. Series A, 370, 4541–4565, 2012, http://rsta.royalsocietypublishing.org/content/roypta/370/1975/4541.full.pdf

Shor (1995). Shor, P. W. Scheme for Reducing Decoherence in Quantum Computer Memory. Physical Review A, 52 (4), R2493-R2496, October 1995.

Steane (1996). Steane, A.M., Multiple Particle Interference and Quantum Error Correction, Proceedings of the Royal Society of London. Series A, 452, 2551-2577 (1996). Also at arXiv (1995), http://arxiv.org/pdf/quant-ph/9601029.pdf

Steane (1996a). Steane, A.M. Error Correcting Codes in Quantum Theory. Physical Review Letters, 77, 793 (1996), https://users.physics.ox.ac.uk/~Steane/pubs/Steane_PRL95.pdf

Steane (1998). Steane, A. M. Introduction to Quantum Error Correction. Philosophical Transactions of the Royal Society of London. Series A, 356, 1739-1758, 1998.

Williams & Clearwater (1998). Williams, C. P., and Clearwater, S. H. Explorations in Quantum Computing. Springer-Verlag, New York, 1998.

Zurek (2002). Zurek, W. H. Decoherence and the Transition from Quantum to Classical—Revisited. Los Alamos Science, (27), 1-25, 2002. Available at https://arxiv.org/ftp/quant-ph/papers/0306/0306072.pdf