This introductory article explains the characteristic of an intelligent system, what it may be used for and what challenges such systems pose when working with humans with two case studies.

If one follows Information Technology (IT) or business news regularly, one would have come across terms like Cognitive, Cognitive Computing and Artificial Intelligence (AI) today. But exactly what do they mean? Why should one care? What should one be careful about when considering AI? And what is not cognitive? Is it only relevant to industrialized countries or is it an opportunity for a developing country like India?

Motivated by frequent questions from many with technical Computer Science (CS) and non-CS, but scientific, background alike, this article puts forward one perspective to clear the air and help others navigate the technical and business literature. As follow-up reading, one can refer to more technical accounts on the topic [40, 26, 11, 12].

At the outset, here are some definitions.

- Cognitive: of, relating to, or involving conscious mental activities (such as thinking, understanding, learning, and remembering) [1]

- Cognitive computing: is the simulation of human thought processes in a computerized model. [2]

- Artificial intelligence (AI) is the intelligence exhibited by machines or software. It is also the name of the academic field of study, which studies how to create computers and computer software that are capable of intelligent behavior. [3]

The cognitive story from a computational point-of-view began at least from the 1950s when scientists and engineers began to ponder how to make smart machines. They had already built calculators successfully to crunch large numbers during World War II, and had realized that computation, which underlies information processing, had tremendous potential to impact everyday lives. The biggest evidence of smartness around is the human brain and we all can think using it (exhibit intelligence), interact with others (social skills) and act independently (express autonomy). So, could we build machines that would behave similarly? Hence started the fascination for cognitive.

But knowing about thinking is one thing and building systems that seem to think for all practical purposes is quite another. The community divided into the cognitive computing branch, which was concerned with understanding and simulating human thought processes, and AI, which was concerned with building useful, seemingly smart, computer implementations (see sub-areas explained here [4]). A number of results in those days showed that one could build smart systems without deep understanding of how human thinking works. For example, the Eliza system [5] could engage people in conversations using shallow rules about human languages. There was tremendous hype about useful systems that may come soon and then people realized the challenges, both technical and of social implications, in building robust intelligent systems.

The two communities have made tremendous progress over the elapsed years and have started coming together again as evidenced in tracks and papers at top AI conferences like Association for Advancement of AI (AAAI) and International Joint Conference on AI (IJCAI). Hence, we will use cognitive and AI terms interchangeably hereafter. However, has anything really changed over the past nearly seven decades? Yes, and no.

State-of-the-art Artificial Intelligence (AI) and data management techniques have been demonstrated to process large volumes of noisy data to extract meaningful patterns and drive decisions in diverse applications ranging from space exploration (NASA’s Curiosity), game shows (IBM’s Watson in Jeopardy™) and even consumer products (Apple’s SIRI™ voice-recognition). But much more needs to be done as the case of Tay chatbot from Microsoft [6] showed which was in the news in March 2016. Tay was trying to engage people with hopefully better algorithms than Eliza using new cognitive understanding and inadvertently caused social grief because it over-relied on user’s inputs and could not detect that its responses were getting manipulated [7]. Further, none of recent AI systems have provably helped yet beat more mundane and real world challenges that the author considers benchmark for successful usage of computation like fighting diseases, eliminating hunger, making precious water available to the thirsty, improving commuting to work, or reducing financial frauds and corruption. Hence, we have a long way to go.

In the next section, we will review major components of an AI System. Then, in the following section, we will characterize cognitive systems based on their ability to take independent decisions. We will next discuss two case studies of how AI may impact the world with self-driving cars and human wellness, and their social contexts. Finally, we will conclude with a call to action.

As one goes about building an intelligent system for an application, one needs to consider a few issues. In this section, we review them so that they can be highlighted when concrete examples are used later in the article.

Civilizations have long dreamt of creating a caring and progressive society. A reasonable and common-sense definition of such a society may be where people respect each other, grow according to their potential, have basic comforts and live peacefully with their environment. There can be off-course alternative principles grounded in politics, religion or ethics, but for the purpose of the article, we will adopt a common-sense definition that is neutral to extreme interpretations. In its modern form, the United Nations has defined millennium development goals towards such a vision [13]. One may argue that the trend of sustainability, or Smart City [14] as popularly called, is a step towards meeting the vision whereby Information and Communication Technology (ICT) is used to manage precious resources like water, land, air and food efficiently.

As far as technologies go, Artificial Intelligence (AI) is the technological flavor of the day. When one looks at AI for improving society, apart from the issue of how a specific technical problem will be solved, one should worry about the wider context:

- Should all insights that can be generated, be communicated?

- Should all activities that can be automated, be automated?

- What testing should be mandatory to deploy an automated product to work with humans?

- Who should be credited when something works or breaks?

Answering them needs going back to ethical questions of what we want:

- Do no harm to humans; treat them without bias from technology perspective

- Do no harm to all life forms and environment

Unfortunately, easy as this seems, stakeholders (businesses, researchers, governments) often do not consider the full picture and pass responsibility to each other causing potentially end harm to public in the long run.

Since an AI system works with data, data is often the logical starting point to understand how such a system may work in practice. Most common form of data are enterprise data in large databases, social data collected by collaboration companies, sensor data from Internet-of-things (IoT) devices and open data. While access and price of data is an open problem [32], open data is often an easier option to start building an intelligent system.

Open data refers to data being made freely available for reuse. Although open data has been the norm in academic community, it has received a major impetus in the past decade from government open data where governments are increasingly taking initiatives to make their data available online in open formats and under licenses that allow use, reuse & redistribution of government data. Over five hundred open data catalogs exist for cities, state and federal governments that have made their data publicly available [33]. Some prominent repositories are London (UK), Chicago (USA), Washington DC (USA), Dublin (Ireland), USA (data.gov), India (data.gov.in) and Kenya (opendata.go.ke). Some of these agencies have also opened up their data as a platform encouraging development of applications for public good. However, these datasets have to be prepared for analysis with richer data integration, semantics and contextual models.

Any good standard text book of AI reviews the main ingredients needed to build an intelligent agent [27]. Prominent among them are techniques to gather data (speech, image and vision processing); learn patterns from data (Machine Learning); formally represent knowledge extracted from data or explicitly given by people (Knowledge Representation), methods to reason with knowledge and take decisions balancing goals, optimizing resources and managing uncertainties (Reasoning); and take decisions (Execution Control and Robotics).

After an agent is built, it needs to have means to interact with outside world, including people (Human Computer Interaction) and other agents (Multi-Agent Systems). The agent can be embodied within a virtual entity like a chatbot, website or mobile application, or a physical entity like a robot or device.

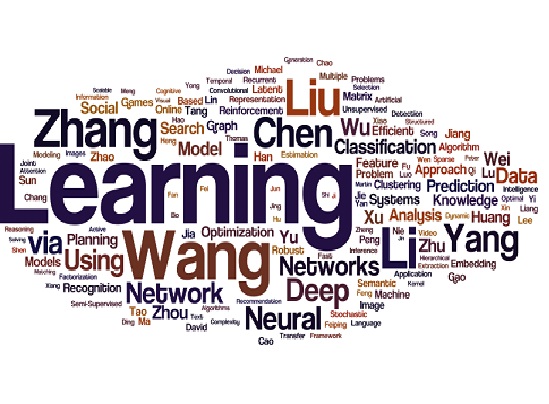

To show the historic focus in AI, Figure 1 shows a “word cloud” created using the accepted papers at AAAI 1997. (A “word cloud” shows most frequent words with size of text representing the relative frequencies.) The Figure show top 150 top-words). One notices that University is prominent and many sub-areas of AI are represented like Knowledge, Learning, Constraints, Planning, Search.

[Fig 1.] Image is a “word cloud” created with Wordle using data about papers published at AAAI 1997.

However, in common usage by business community and popular press today, term AI is used for its narrow sub-field of machine learning (ML), and even there, to its sub-field of Deep Learning (DL) [31]. Figure 2 shows a “word cloud” created using the accepted papers at AAAI 2017. One may notice that Learning (and related terms) is quite prominent and so are Chinese authors.

[Fig 2.] Image is a “word cloud” created with Wordle using data about papers published at AAAI 2017 – two decades later than 1997.

There is no doubt that DL has revolutionized all aspects of Computer Science in the last 5 years and has become the next big buzz in Information Technology (IT) industry [28]. However, it is still a small (but growing) piece of the full puzzle of building a usable intelligent system that people can use [29]

, let alone solve problems that people actually face and without creating new problems like bias or safety concerns. They ignore efforts needed to bring together an intelligent system that not only learns insights but also can represent, reason, execute, monitor environment and adapt itself to achieve short- and long- term goals. They ignore the new work needed to understand the ethical issues, privacy concerns and security risks that systems built using black-box models learnt on large data pose when working with humans.

3.0 Levels of cognitive abilities

So, what is meant by a cognitive system when one reads of the phrase today? For the purpose of the article, we will consider it as a computer system that will work with humans (social) and comes at different levels of thinking abilities (intelligence) on its own (independently, also known as, autonomously). This can be arranged as levels in the order of sophistication and considered akin to grading a personal secretary based on his or her proficiencies.

Level-1: Understand Data to Help

Systems at Level-1 process take input data and give out potentially insightful patterns. The input can be textual, audio or video data. The output depends on data and its format, but is broadly a set of topics (labels) and trends. A preferred characteristic of such systems is to interact and engage with end users in natural language and not a specialized language like SQL.

Technologies used by Level-1 systems include machine learning (including deep learning), data mining and rules for analysis and natural language processing (NLP) and visualization for interaction with people. They help a person understand data but fall short of suggesting what to do with the insights. Such systems are also susceptible to spurious inputs as the Tay system illustrated.

Level-2: Suggest to Act

Systems and Level-2 process input data, uses a model of what a person wants to achieve (goals) and gives out recommendations to act. The input data can be textual, audio or video data. The goals can be that of achieving something or maintaining a condition. The output is a prescription, which can be simple or complex depending on the uncertainties the system models.

Technologies used by such systems go beyond Level-1 and include rules, computational logics, planning, and automated reasoning. They help a person find their way through alternative decision choices consistent with their goals but rely on the person knowing what they want. For example, suggesting tags on a photo about who is in it is an example of Level-2 system. Such systems are susceptible to spurious data and also people with flickering goals. For example, a Level-2 system may help a person decide how they should schedule their day. But if the person misses his anniversary because he never expressed it as his priority explicitly or implicitly but was later confronted by his complaining wife, who is at fault? Blame it on the secretary?

Level-3: Act Autonomously

Systems at Level-3 process input data, use a model of what a person wants to achieve (goals) and takes action based on choices that will maximize their chance of reaching them. The input can be textual, audio or video data. The goals can be that of achieving something or maintaining a condition. The output is a prescription, which can be simple or complex depending on the uncertainties the system models. The outcome depends on how much the person has delegated the system to autonomously act.

Technologies used by such systems go beyond Level-2 and include planning and execution, open world reasoning and robotics (i.e., hardware alone, software alone or both). They help a person conserve their energy away from mundane or unsafe tasks and really focus on tasks that matter. Autonomic computing was a push in this direction for IT systems that could self-manage [8]. Level-3 systems are susceptible to spurious data, flickering human goals, changes in the environment and legal issues like liability. One may notice similarity here akin to factors under which a secretary one hires may be considered working unsatisfactorily or is empathetic. For example, a Level-3 system may help a hard-working person by setting his meetings. But if the person gets stressed over time due to exertion and turns suicidal, who should be blamed? Was the secretary abetting the incident or failed to warn the person’s family of impending harm, both of which may be punishable in some countries? Does this also make the software creator liable? A critical scientific challenge in Level-3 systems is to set the right level of autonomy [9] that delivers value balancing risks.

3.1 Discussion

So, given the cognitive buzz, are all software cognitive? Any software takes inputs, processes them via algorithms and produces its results. A cognitive software is one which is able to identify intelligent patterns from input data, preferably interacting with other people in a natural way to help them make good decisions and have an ability to act independently. We will argue that any software that does not make to Level-1 is not cognitive. This will include many of the off-the-shelf software that have rigid behavior based on narrow inputs. A calculator should calculate the mathematical expression correctly and that is perfectly fine. Cognitive software just represents a new wave of systems that promise to deliver unprecedented benefits for the data-rich world if we can leverage the positives.

AI systems have been built across the three levels but most of them are at Level-1. World -class champions beating game playing AI systems like Watson and AlphaGo are at Level-3 and their underlying technologies can come handy in tackling serious issues like health and security. In this context, our own work [24, 25], represent building multi-modal chatbots to explain and engage people in decisions related to water usage and exploring exoplanets (astronomy), respectively.

Advanced systems also come with a host of issues like how to judge their performance [10]. Further, like any other advanced technology (say nuclear energy or guns or gene therapy), the litmus test will be the goals towards which we are able to apply this technology – help mankind grow peacefully in balance with nature or create disputes, economic distress, joblessness and harm. And that will be the eventual mark of success for AI and cognitive systems.

In the next two sections, we will discuss two case-studies of how AI is being applied to real world challenges.

“The genius of Einstein leads to Hiroshima.” – Pablo Picasso

Consider the scenario of self-driving car. It is a vehicle that can navigate autonomously on public thoroughfares autonomously, and hence, without human intervention. Self-driving as a technology has already been tested in space missions decades ago [16]. Let us explore how the technology may work among people on earth?

First, let us consider what problem automated cars solve and whether it is worth solving. Ever since cars were invented, people have learnt to drive them or have hired drivers who can drive for them. As cars became complex, the skill and focus needed to manage cars has increased. In many cases, people are not able to drive (due to age or cognitive challenges) or willing to drive their vehicles (due to inconvenience) or afford a driver. But is removing a category of job, drivers here, the right problem for scientists and engineers to solve when there are so many other pressing problems? The author is of the view that any technology which removes a person out of job is bad as it adversely affects families in the long run and creates social tensions. Economist have often argued to say that the displaced drivers can be retrained for better jobs but that argument has repeatedly proven hollow in other jobs. Instead of riding on the slippery slope of jobs discussion, couldn’t door-to-door transportation be made cheaper and more convenient with novel resource sharing ideas (e.g., vehicles and thoroughfares) that boost employment or technologies that augment human cognition and make transportation safer?

Second, let us consider the speed at which underlying technologies can be brought to people. Without claiming to be an expert that knows all aspects of an automated car, some subsystems are clear to an engineering eye: one has to detect car’s environment, control the vehicle, plan a route to destination, engage humans and know about their satisfaction with the drive. Each of these sub-systems are immensely valuable if they were available to drivers today to assist them while they are driving. Unfortunately, with the exception of route planning, none of the other sub-systems are mainstream in today’s cars although they will be immensely useful. Can’t they be accelerated to market saving lives and ushering in more convenience?

Third, let us consider the risks of automated cars. Any human created artifact has defects which may or may not get detected, and if detected, may or may not be rectified; and cars are no exception. In 2012, approximately 7.2 million motor vehicles were sold to customers in the United States while worldwide, car sales came to around 65 million units in 2012 [17]. NHTSA reported that 51 million cars recalled in 2015, adding that every year, on average, 25% of recalled vehicles are not repaired [18]. The numbers reveal that today’s cars are not defect-free and when defects are detected, 1-of-4 are not corrected. These defects stem not only from the complex supply chain needed for a modern car but also from intentional cheating and short-cuts adopted by car manufacturers as revealed periodically. Since driverless cars are not inherently different transportation technologies but only conventional cars with automated control modules, one may infer that future cars will continue today’s trends of defects including worryingly, in their newly created control modules too. This raises the specter of buggy automated cars playing havoc on streets where other automated and manual drivers are present apart from pedestrians and other occupants of road. One can dispute on the exact statistics but it is clear that cars will not suddenly break decades of recall history without any drastic manufacturing advance and suddenly become defect-free.

Under the cloud of car defects, let us consider who will take responsibility for a car’s actions. Suppose A buys a car from manufacturer M and B rides on it. (B can be A himself or his wife or children or parents or friend or a burglar who has just stolen the car.) The car hits C. Will C sue B, A or manufacturer for damages? The problem may be the way B gave instructions, or how A maintains his car or how the manufacturer built the car. Today, a driver of a car is responsible for whatever happens to a car unless the driver is below adult age in which case, the legal parent or guardian is responsible or the driver can prove car’s defects in which case the manufacturer is responsible. The same accountability is needed for a victim of automated car’s mistakes. The conundrum will be solved if the car’s control module was only assisting the “driver”, a person who anyway today is legally in control of the car for driving purposes and can override any assistance. The accountability issue is unresolved today and has the potential to cause grave social costs.

The scenario, however, is not exotic. In the recent case of Tesla’s AutoPilot, where a person lost his life when he delegated autonomy to the self-driving module, the system was found to be performing correctly but its capability (and limitations) was not properly communicated to the driver [19]. The accountability issue in Tesla’s case was tossed between the driver and manufacturer, and a regulator had to investigate.

The author considers self-driving as a fledgling solution looking for a problem because when applied to mundane road commuting, it can lead to job-losses and economic distress in the short run and unknown outcome in the long-term. However, it has tremendous potential when applied to Health which we consider next.

Health is intrinsic to human life. Unfortunately, countries around the world are facing major health challenges which gets exacerbated with deteriorating environment – water and air, limited resources and management issue [35]. The UN millennium development goals [13] had health among its list and AI can play an important role in meeting health goals.

Taking the case of a developing country, India promoted a new health care policy in 2017 [34]. Its goals are – “the attainment of the highest possible level of health and well-being for all at all ages, through a preventive and promotive health care orientation in all developmental policies, and universal access to good quality health care services without anyone having to face financial hardship as a consequence” (page 4, sec 2.1).

India promotes healthy living by taking care of medical needs cost-effectively. However, it does not directly consider issues like access to food, sanitation and environment pollution. Furthermore, the government investment in health is quite low (3.9% of GDP; 30.5% of total health expenses– 2011 estimates [35]) causing implementation challenges.

Now consider the issues a citizen may face related to wellness. They include:

1. Access to health services: depending on the context, a person may need a planned or unplanned service at or away from their regular location. Even if the service provider is found, they may not be affordable, fully equipped or qualified to deal with the need.

2. Medical Diagnosis: diseases are ever-evolving, procedures change over time and it is hard for medical practitioners to cope up.

3. Maintaining healthy living: When healthy, citizens need to monitor their food, exercise and daily routines in harmony with environment, while if under treatments, they additionally need to follow-up on medical advice and medicines. Some diseases make a person’s health prone to weather swings and conditions of air or water, curtailing their activities.

4. Financial prudence: A citizen needs means to plan for cost of health services including insurance and utilize tax incentives.

These challenges are also opportunities for cognitive systems to help people. Indeed, many AI-based digital assistant and chatbots are being built to meet them [39]. In one interesting study [36], it was shown that a chatbot called Jane.ai is able to help people follow their health regimen better than alternatives.

Such systems can also be useful in helping people work closely with environment. Taking the example of water, it is a unique resource vital for all life to survive. We illustrate some personas and water usage for wellness. Consider that Abhay may want to take a bath in the river during a religious festival and would want to know which banks (religious sites, i.e., ghats) of the river are feasible to go without getting sick. Bina may want to tap ground water or fetch from river water for household activities. Chetan may want to use river water for irrigating his fields over ground water. Divya may wonder if fishing or vegetable growing is promising on the river catchment area to supplement her family’s earnings. These and other users are routinely taking decisions which can be driven by water pollution data if it were available and AI-researchers.

Unfortunately, the world’s water resources are facing unprecedented stress due to increasing population and human economic activity. In [24], we showed a multi-modal interaction system called Water Advisor to help people explore and engage on water issues consisting of a chatbot, a map interface and document viewer. A person can select an area, pick an activity like swimming and see if water conditions are amenable for the activity based on available open water data and quality regulations.

More generally, a large part of oceans and rivers are unexplored, and self-driving technology in form of underwater drones (discussed in previous section) can also come in handy [16]. This will not only address a problem that matters to mankind, but will lead to long-term economic gains in areas like shipping, food supplies and recreation. It is a challenge problem worthy of attention from top leaders in technology, business and government.

In this article, we reviewed the background on AI and Cognitive Systems and articulated the considerations for building an intelligent system: business value, access to data, selection of AI techniques and connecting to impact on people. Further, we divided AI systems into levels based on their capabilities to take decision and highlighted issues is testing them. We explored potential of AI in the case studies of self-driving car and health.

As more AI techniques are being used to tackle societal problems, practitioners realized that ethical guidance is needed [37]. The professional bodies in ICT, who long had an ethical code of behavior [20], have begun to take note of the ethical responsibility AI-based systems should demonstrate when working with people [21] [22]. This includes system being able to given an explanation, show evolution of data, be auditable and accountable. The new wave of technical innovations will be in implementing and scaling such guidelines.

In conclusion, AI is an exciting area which, despite being around for decades, is getting revolutionized with new trends like deep learning. As pointed recently [38], it has traditionally worked well away from humans, like in space, or in conflict with humans, like games. However, AI has much to offer working as partner with humans. It is relevant globally and but is of particular relevance to challenges of a developing country like India [23].

Biplav Srivastava is a Research Staff Member & Master Inventor at IBM Research and an ACM Distinguished Scientist and Distinguished Speaker. With over two decades of research experience in Artificial Intelligence, Services Computing and Sustainability, Biplav’s current focus is on promoting goal-oriented human-machine collaboration via natural interfaces using domain and user models, learning and planning. Biplav’s work has lead to many science firsts and high-impact commercial innovations (up to $B+), 100+ papers and 40+ US patents issued. He co-organized the workshop track at IJCAI 2016, has co-organized over 30 workshops and given 5 tutorials at leading AI conferences. He has been runing the “AI in India” Google group since 2010. More details are at: http://researcher.watson.ibm.com/researcher/view.php?person=us-biplavs

[1] Cognitive definition, http://www.merriam-webster.com/dictionary/cognitive

[2] Cognitive computing definition, http://whatis.techtarget.com/definition/cognitive-computing

[3] Artificial Intelligence definition, https://en.wikipedia.org/wiki/Artificial_intelligence

[4] AI Topics, http://aitopics.org/

[5] Eliza, https://en.wikipedia.org/wiki/ELIZA

[6] Tay, https://www.tay.ai/

[7] Tay back, http://time.com/4275980/tay-twitter-microsoft-back/

[8[Autonomic computing, https://en.wikipedia.org/wiki/Autonomic_computing

[9]Biplav Srivastava, AutoSeek: A Method to Identify Candidate Automation Steps in a Service Delivery Process, In 10th IFIP/IEEE International Symposium on Integrated Network Management (IM 2007), May 2007, Munich, Germany.

[10]Michael Schrage, How to Give a Robot a Job Review, https://hbr.org/2016/03/how-to-give-a-robot-a-job-review, March 2016

[11] Pat Langley, Artificial intelligence and cognitive systems. AISB Quarterly, 133, 1-4, 2012.

[12]John E Kelly, Computing, cognition and the future of knowing, http://www.research.ibm.com/software/IBMResearch/multimedia/Computing_Cognition_WhitePaper.pdf

[13] UN’s Millenium Development Goals, http://www.un.org/millenniumgoals/

[14] Challenges to Smart City Initiatives in India, https://www.linkedin.com/pulse/challenges-smart-city-initiatives-india-biplav-srivastava

[15] Ethics, https://en.wikipedia.org/wiki/Ethics

[16]Kanna Rajan, Advancing Autonomous Operations in the Field From Outer to Inner Space, Youtube video: https://youtu.be/MSK9Do7AQf0

[17] US Vehicle sales, http://www.statista.com/statistics/199974/us-car-sales-since-1951/

[18] Record number of cars recalled in 2015, http://www.marketwatch.com/story/record-number-of-cars-recalled-in-2015-2016-01-21

[19] Tesla’s Self-Driving System Cleared in Deadly Crash, https://www.nytimes.com/2017/01/19/business/tesla-model-s-autopilot-fatal-crash.html

[20]ACM Code of Ethics, https://www.acm.org/about-acm/code-of-ethics

[21] ACM Seven Principles to Foster Algorithmic Transparency and Accountability, http://www.acm.org/media-center/2017/january/usacm-statement-on-algorithmic-accountability

[22] IEEE’s Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems, http://standards.ieee.org/develop/indconn/ec/autonomous_systems.html

[23] Shivaram Kalyanakrishnan, Rahul Panicker, Sarayu Natarajan and Shreya Rao, Opportunities and Challenges for Artificial Intelligence in India, AIES 2018, http://bit.ly/2o9Fekj

[24]Jason Ellis, Biplav Srivastava, Rachel K. E. Bellamy and Andy Aaron, Water Advisor – A Data-Driven, Multi-Modal, Contextual Assistant to Help with Water Usage Decisions, AAAI 2018, V

Video – https://www.youtube.com/watch?v=z4x44sxC3zA; Paper – http://jellis.org/work/water-aaai2018-demo.pdf

[25] Jeffrey O. Kephart, Victor C. Dibia, Jason Ellis, Biplav Srivastava, Kartik Talamadupula and Mishal Dholakia A Cognitive Assistant for Visualizing and Analyzing Exoplanets, AAAI 2018, Video – http://ibm.biz/tyson-demo; Paper – http://jellis.org/work/exoplanets-aaai2018-demo.pdf

[26] AI Magazine Special Issue on Cognitive Systems, Vol 38, No. 4, 2017, At: https://www.aaai.org/ojs/index.php/aimagazine/issue/view/220/showToc

[27] Stuart Russell and Peter Norvig, Artificial Intelligence: A Modern Approach, 3rd Edition, 2015, http://aima.cs.berkeley.edu/

[28] Biplav Srivastava, AI for Smart City Innovations with Open Data, Tutorial at IJCAI 2015, Buenos Aires, Argentina, July 2015. Slides are at: http://www.slideshare.net/biplavsrivastava/ai-for-smart-city-innovations-with-open-data-tutorial

[29] Advances in Deep Neural Networks, Panel discussion at ACM 50 years event, June 2017, https://www.youtube.com/watch?v=mFYM9j8bGtg

[30] Judea Pearl, Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution, http://ftp.cs.ucla.edu/pub/stat_ser/r475.pdf. 2017

[31] Xavier Amatriain, Machine Learning and Artificial Intelligence Year-end Roundup , https://www.kdnuggets.com/2017/12/xavier-amatriain-machine-leanring-ai-year-end-roundup.html

[32] Logan Kugler, The War Over the Value of Personal Data, Communications of the ACM, Vol. 61 No. 2, Pages 17-19, 2018.

[33] Data Portals, an online catalog of open data, http://dataportals.org/portal/datacatalogs-org

[34] India’s 2017 Health Care Policy, http://www.mohfw.nic.in/index1.php?lang=1&level=1&sublinkid=6471&lid=4270

[35] Situation Analyses, Backdrop to National Health Policy 2017, https://mohfw.gov.in/sites/default/files/71275472221489753307.pdf

[36] Grzegorz Przybycien, Can Artificial Intelligence on Facebook Messenger persuade students to develop regular exercise habit? Persuasive design in digital marketing pilot study of Jane.ai chatbot, MBA Thesis, Univ of Warwick, 2017, at https://www.dropbox.com/s/pxfgcwuy1uwjjkz/jane.ai%20dissertation%20ebook.pdf?dl=0.

[37] Aylin Caliskan, Joanna J. Bryson, Arvind Narayanan, Semantics derived automatically from language corpora contain human-like biases, Science, 2017.

[38] Subbarao Kambhampati, Challenges of Human-Aware AI Systems (AAAI 2018 Presidential Address), 2018, At: https://www.youtube.com/watch?v=Hb7CWilXjag

[39] Dyllan Furness, The chatbot will see you now: AI may play doctor in the future of healthcare, 2016, https://www.digitaltrends.com/cool-tech/artificial-intelligence-chatbots-are-revolutionizing-healthcare/

[40]J. O. Gutierrez-Garcia and E. López-Neri, “Cognitive Computing: A Brief Survey and Open Research Challenges,” 2015, 3rd International Conference on Applied Computing and Information Technology/2nd International Conference on Computational Science and Intelligence, Okayama, 2015, pp. 328-333.