Population growth, increasing incomes, and rapid urbanization in developing countries are expected to cause a drastic hike [1] in food demand. This projected rise in food demand poses several challenges to agriculture. Owing to a continuous decline in global cultivable land [2], increasing the productivity of the existing agricultural land is highly necessary. This need has led to the scientific community focusing their efforts [3, 4, 5] on developing efficient and sustainable ways to increase crop yield. To this end, precision agriculture techniques have attracted a lot of attention. Precision agriculture is a set of methods to monitor crops, gather data, and carry out informed crop management tasks such as applying the optimum amount of water, selecting suitable pesticides, and reducing environmental impact. These methods involve the usage of specialized devices such as sensors, UAVs, and static cameras to monitor the crops. Accurate crop monitoring goes a long way in assisting farmers in making the right choices to obtain the maximum yield. Plant phenotyping, a rapidly emerging research area, plays a significant role in understanding crop-related traits. Plant phenotyping is the science of characterizing and quantifying the physical and physiological traits of a plant. It provides a quantitative assessment of the plant’s properties and its behavior in various environmental conditions. Understanding these properties is crucial in performing effective crop management.

Research in plant phenotyping has grown rapidly thanks to the availability of cost-effective and easy to use digital imaging devices such as RGB, multispectral, and hyperspectral cameras, which have facilitated the collection of large amounts of data. This influx of data coupled with the usage of machine learning algorithms has fueled the development of various high throughput phenotyping tools [refs] for tasks such as weed detection, fruit/organ counting, disease detection and yield estimation. A machine learning pipeline typically consists of feature extraction followed by a classification/regression module for prediction. While machine learning techniques have helped build sophisticated phenotyping tools, they are known to lack robustness. They rely heavily on handcrafted feature extraction techniques and manual hyperparameter tuning methods. As a result, if feature extraction is not carefully done under a domain expert’s supervision, they tend to perform poorly in uncontrolled environments such as agricultural fields where factors such as lighting, weather, exposure, etc. often cannot be regulated. Hence, feature extraction from data has been one of the major bottlenecks in the development of efficient high throughput plant phenotyping systems.

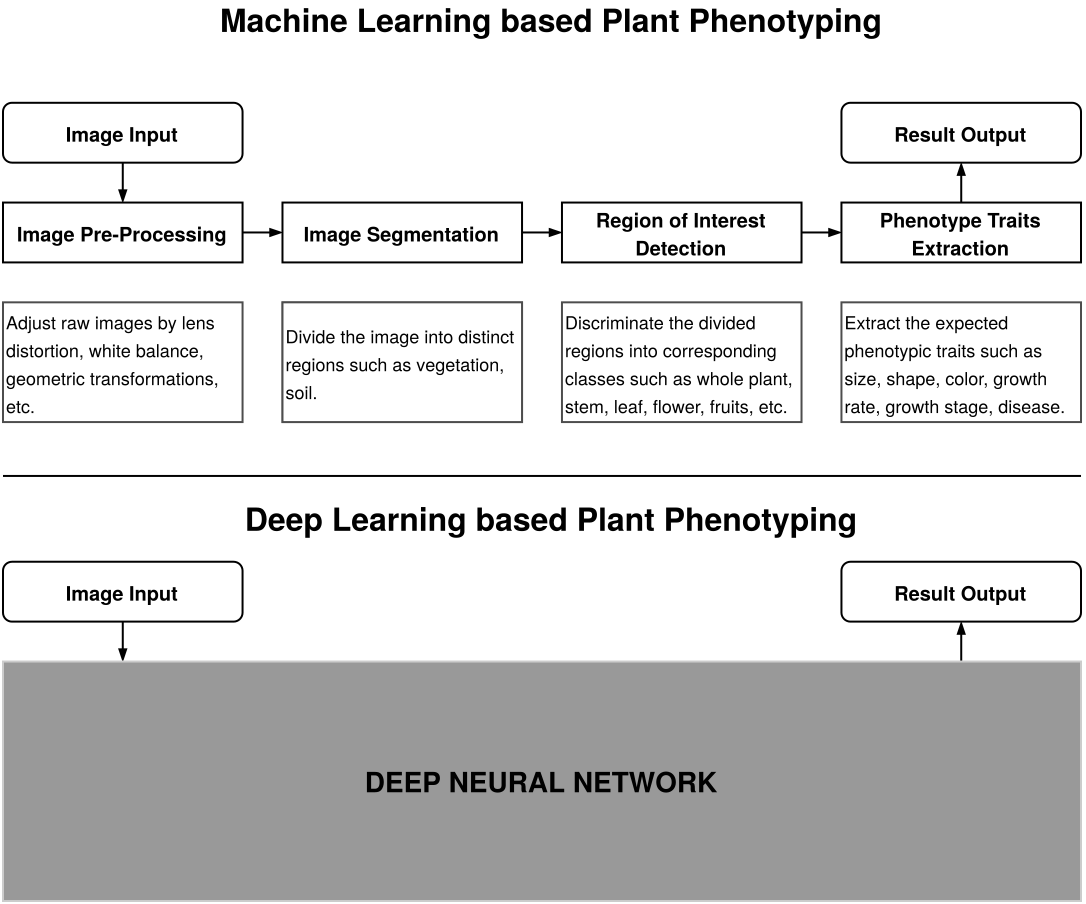

Advancements in deep learning, a sub-field of machine learning which allows for automatic feature extraction and prediction on large scale data, has led to a surge in the development of visual plant phenotyping methods. Deep learning is particularly well-known for its effectiveness in handling vision-based tasks such as image classification, object detection, semantic segmentation, and scene understanding. Coincidentally, many of these tasks form the backbone for various plant phenotyping tasks such as disease detection, fruit detection, and yield estimation. Figure 1 illustrates the difference between machine learning based plant phenotyping and deep learning based plant phenotyping. We believe that the expressive power and robustness of deep learning systems can be effectively leveraged by plant researchers to identify complex patterns from raw data and devise efficient precision agriculture methodologies. The purpose of this survey is to enable the readers to get a bird’s eye view of the advancements in the field of deep learning based plant phenotyping, understand the existing issues, and become familiar with some of the open problems which warrant further research.

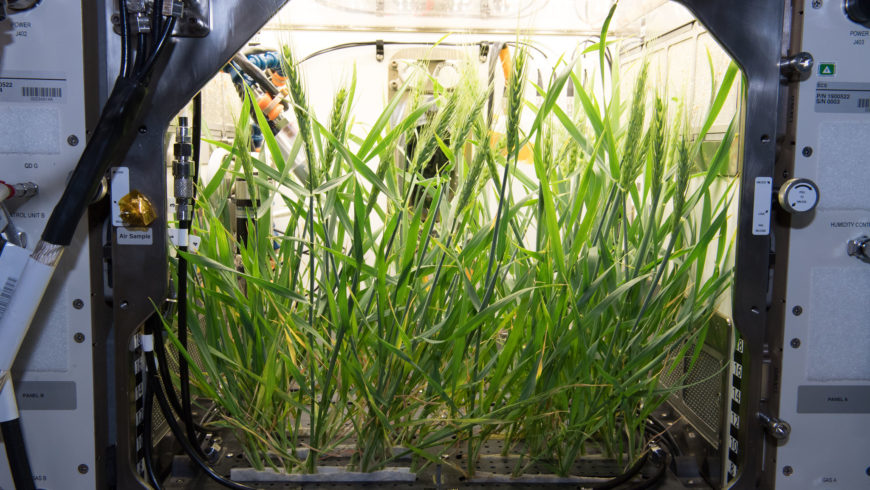

Plant phenotyping is the science of quantifying the physical and physiological traits of a plant. Plant phenotyping mainly benefits two communities: farmers and plant breeders. By better understanding the traits of the crop, a farmer can optimize crop yield by making informed crop management decisions. Similarly, understanding the crop’s behavior is crucial for plant breeders to select the best possible crop variety for a given location and environment. In the past, plant phenotyping was a manual endeavor. The process of manually observing a small set of crop samples and reporting observations periodically was slow, labor intensive and inefficient. The low throughput nature of these methods has impeded the progress in plant breeding research. However, the advent of modern data acquisition methods with various sensors, cameras and UAVs (Unmanned Aerial Vehicles) coupled with advances in machine learning techniques have resulted in the development of high-throughput plant phenotyping methods to be effectively used for precision agriculture.

Depending on the method of data collection, plant phenotyping techniques can be classified into ground based, aerial and satellite based methods. In ground based phenotyping, high precision sensors are embedded in handheld devices or mounted on movable vehicles to measure useful traits such as plant height, plant biomass, crop development stage, crop yield etc. Fig. 6 contracts the discussed classifcations. Movable phenotyping vehicles like BoniRob [#!bonirob!#] have been developed where RGB cameras, hyperspectral cameras, LIDAR sensors, GPS receivers and other sensors can be mounted. Aerial based methods typically involve the usage of Unmanned Aerial Vehicles (UAVs) for crop monitoring. The recent advancements in UAVs and high resolution cameras have allowed the researchers to obtain high quality crop images. Tasks such as weed mapping, crop yield estimation, plant disease detection and pesticide spraying have been effectively carried out by UAVs. Satellite based plant phenotyping involves remote sensing of agricultural plots from satellites such as Landsat-8 and WorldView-3. Satellite based methods have been typically used for crop health monitoring over a large scale area such as a region/country. However, the cost of obtaining satellite images, the effect of clouds and the time gap between capturing and obtaining images inhibits its applicability for high throughput plant phenotyping in precision agriculture.

With a variety of data collection tools at our disposal, large amounts of image and sensor data have been made available for plant phenotyping research. The next section introduces deep learning, a set of methods which can effectively recognize useful patterns in huge datasets.

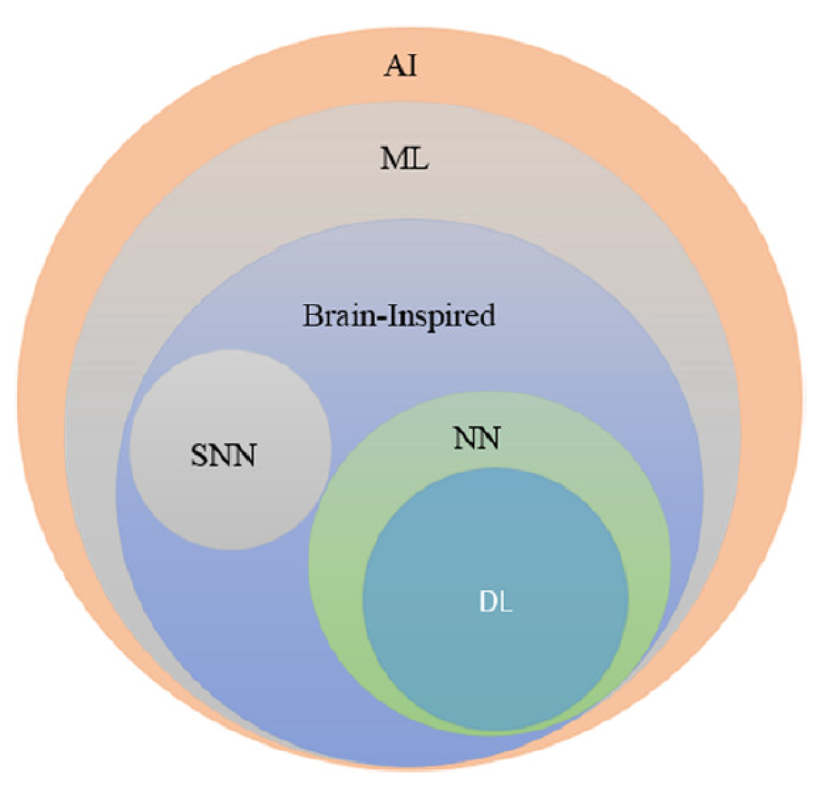

Machine Learning (ML) is a subset of Artificial Intelligence (AI), that deals with an algorithmic approach of learning from observational data without being explicitly programmed. ML has unimaginably revolutionized several fields in the last few decades. Neural Networks (NN) [7, 8, 9] is a sub-field of ML and it was this sub-field that spawned Deep Learning (DL). Among the most prominent factors that contributed to the huge boost of deep learning are the appearance of large, high-quality, publicly available labelled datasets, along with the empowerment of parallel GPU computing, which enabled the transition from CPU-based to GPU-based training thus allowing for signifcant acceleration in deep models’ training. Since its redemption in 2006 [10], DL community has been creating ever more complex and intelligent algorithms, showing better than human performances in several intelligent tasks. The deep in deep learning comes from the deep architectures of learning or the hierarchical nature of its algorithms. DL algorithms stack several layers of non-linear information processing units between input and output layer, called Artificial Neurons (AN). The stacking of these ANs in a hierarchical fashion allows for exploitation of feature learning and pattern recognition through efficient learning algorithms. It is proven that NNs are universal approximator of any function [9], making DL task agnostic [11]. Figure 2 depicts the taxonomy of AI.

Deep learning approaches may be categorized as follows: Supervised, semi-supervised or partially supervised, and unsupervised1 1 Reinforcement Learning (RL) or Deep RL (DRL) is often treated as a semi-supervised or sometimes unsupervised approach. . Supervised learning techniques use labeled data. In supervised DL, the environment includes sets of input and corresponding output pairs (often in large amounts), a criterion that evaluates model performance at all times called cost or loss function, an optimizing algorithm that minimizes the cost function with respect to the given data. Semi-supervised learning techniques use only partially labeled datasets (usually small amounts of label data, large amounts of unlabeled data). The popular Generative Adversarial Networks (GAN) [13] are semi-supervised learning techniques. Unsupervised learning systems function without the presence of labeled data. In this case, the system learns the internal representation or important features to discover unknown relationships or structure within the input data. Often clustering, dimensionality reduction, and generative techniques are considered as unsupervised learning approaches.

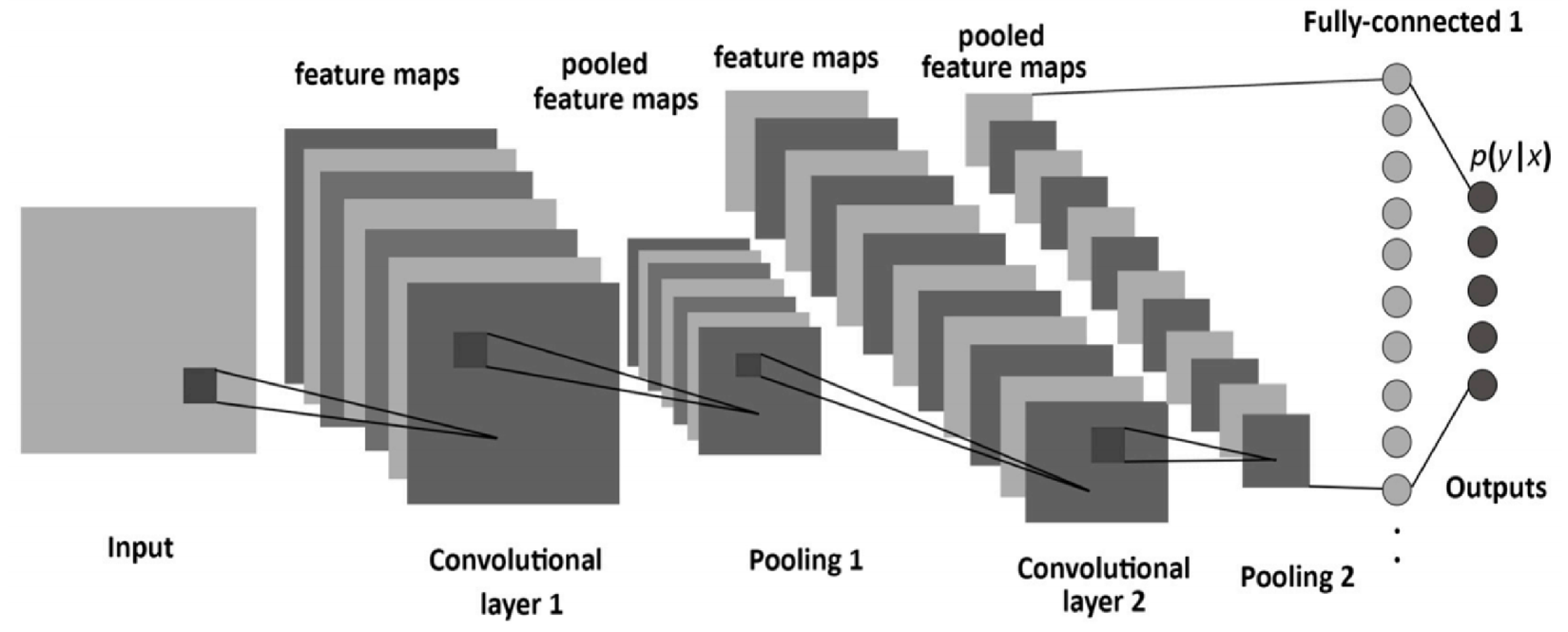

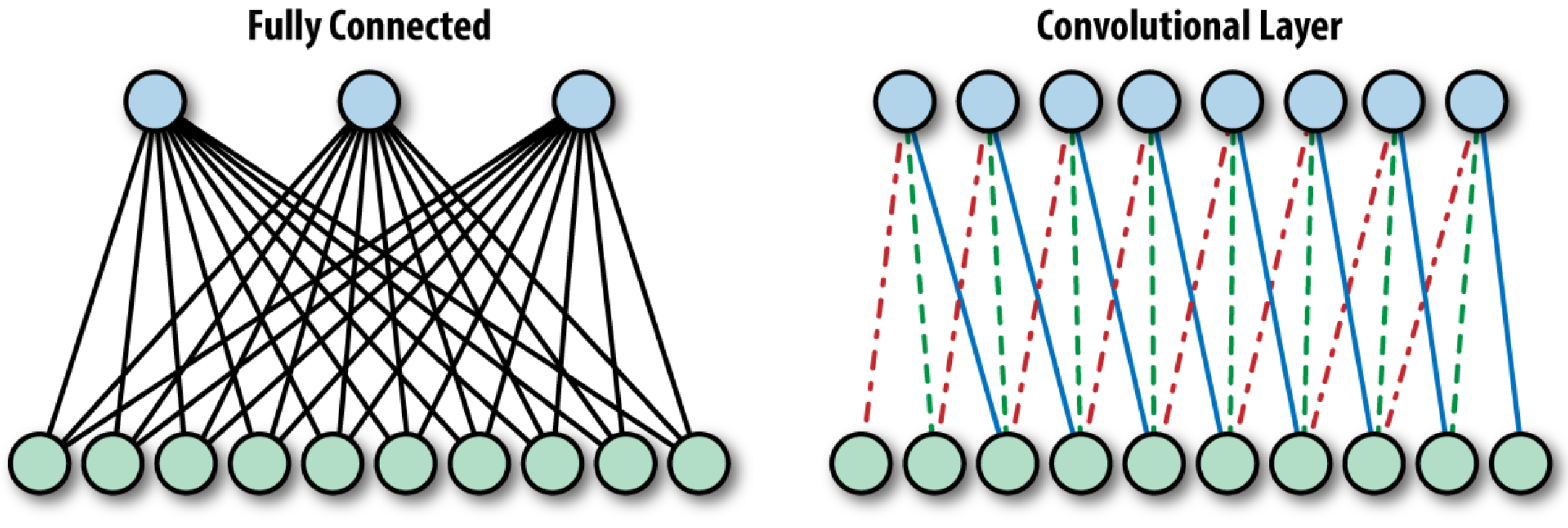

Convolutional Neural Networks (CNN) is a subclass of neural networks that takes advantage of the spatial structure of the inputs. This network structure was first proposed by Fukushima in 1988 [15]. It was not widely used then, however, due to limits of computation hardware for training the network. In the 1990s, LeCun et al. [16] applied a gradient-based learning algorithm to CNNs and obtained successful results for the handwritten digit classification problem. CNNs have been extremely successful in computer vision applications, such as face recognition, object detection, powering vision in robotics, and self-driving cars. CNN models have a standard structure consisting of alternating convolutional layers and pooling layers (often each pooling layer is placed after a convolutional layer). The last layers are a small number of fully-connected layers, and the final layer is a softmax classifier as shown in Figure 3. Every layer of a CNN transforms the input volume to an output volume of neuron activation, eventually leading to the final fully connected layers, resulting in a mapping of the input data to a 1D feature vector. In a nutshell, CNN comprises three main types of neural layers, namely, (i) convolutional layers, (ii) pooling layers, and (iii) fully connected layers. Each type of layer plays a diferent role.

(i) Convolution Layers. In the convolutional layers, a CNN convolves the whole image as well as the intermediate feature maps with different kernels, generating various feature maps. Exploiting the advantages of the convolution operation, several works have proposed it as a substitute for fully connected layers with a view to attaining faster learning times. Difference between a fully connected layer and a convolutional layer is shown in Figure 4.

(ii) Pooling Layers. Pooling layers handle the reduction of the spatial dimensions of the input volume for the convolutional layers that immediately follow. The pooling layer does not affect the depth dimension of the volume. The operation performed by this layer is also called subsampling or downsampling, as the reduction of size leads to a simultaneous loss of information. However, such a loss is beneficial for the network because the network is forced to learn only meaningful feature representation. On top of that, the decrease in size leads to less computational overhead for the upcoming layers of the network, and also it works against overfitting. Average pooling and max pooling are the most commonly used strategies. In [18] a detailed theoretical analysis of max pooling and average pooling performances is given, whereas in [19] it was shown that max pooling can lead to faster convergence, select superior invariant features, and improve generalization.

(iii) Fully Connected Layers. Following several convolutional and pooling layers, the high-level reasoning in the neural network is performed via fully connected layers. Neurons in a fully connected layer have full connections to all activation in the previous layer, as their name implies. Their activation can hence be computed with a matrix multiplication followed by a bias offset. Fully connected layers eventually convert the 2D feature maps into a 1D feature vector. The learned vector representations either could be fed forward for classification or could be used as feature vectors for further processing.

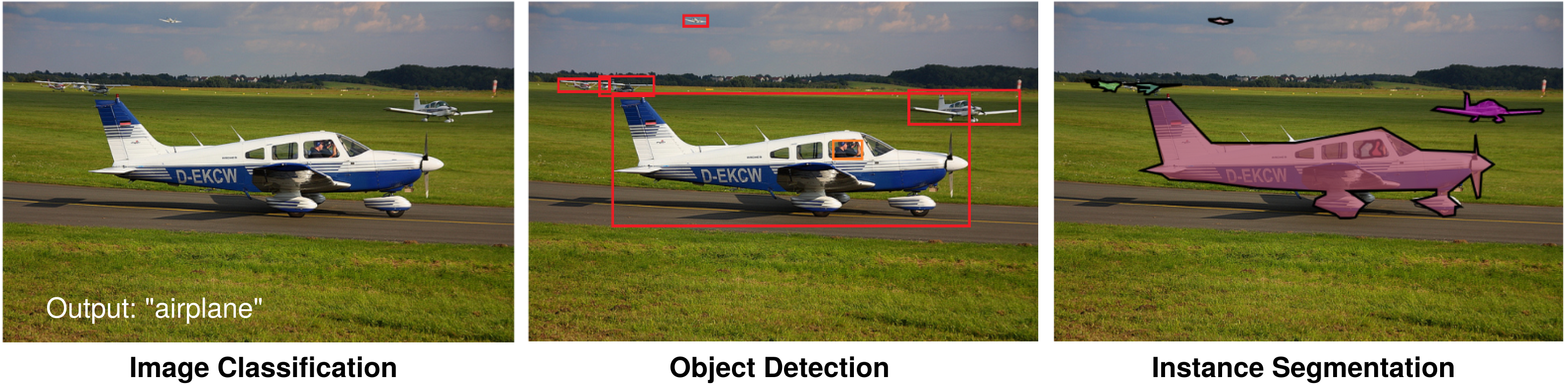

Object detection and segmentation are two of the most important and challenging branches of computer vision, which have been widely applied in real-world applications, such as monitoring security, autonomous driving and so on, with the purpose of locating instances of semantic objects of a certain class. In a nutshell, object detection is the task of identifying locating objects (with bounding boxes) in images. While the task of segmentation is to classify each pixel of images with objects (dog, cat, airplane, etc.). We refer readers to [20, 21] for more information on these tasks. Figure 5 visually contrasts the difference between these tasks.

Automation in agriculture and robotic precision agriculture activities demand a lot of information about the environment, the field, the condition and the phenotype of individual plants. An increase in availability of data allowed for successful usage of such robotic tools in real-world conditions. Taking advantage of the available data, combined with the availability of robots such as BoniRob [6] that navigate autonomously in fields, computer vision with deep learning has played a prominent role in realizing autonomous farming. Previously laborious jobs of actively tracking certain measurements of interest such as plant growth rate, plant stem position, biomass amount, leaf count, leaf area, inter crop spacing, crop plant count and others can now be done almost seamlessly.

A crucial prerequisite for selective and plant-specific treatments is that farming robots need to be equipped with an effective plant identification and classification system providing the robot with the information where and when to trigger its actuators to perform the desired action in real-time. For example, weeds generally have no useful value in terms of food, nutrition or medicine yet they have accelerated growth and parasitically compete with actual crops for nutrients and space. Inefficient processes such as hand weeding has led to significant losses and increasing costs due to manual labour [23], which is why a lot of research is being done on crop vs weed classification and weed identification [24, 25, 26, 27, 28, 29] and plant seedlings classification [30, 31]. This is extremely useful in improving the efficiency of precision farming techniques on weed control by modulating herbicide spraying appropriately to the level of weeds infestation.

Crop detection in the wild is arguably the most crucial step in the pipeline of several farm management tasks such as visual crop categorization [33], real-time plant disease and pest recognition [34], picking and harvesting automatic robots [35], healthy and quality monitoring of crop growing [36] and yield estimation [37]. However, existing deep learning networks achieving state-of-the-art performance in other research fields are not suitable for agricultural tasks of crop management such as irrigation [38], picking [39], pesticide spraying [40], and fertilization [41]. The dominating cause is lack of diverse set of public benchmark datasets that are specifically designed for various agricultural missions. Some of the few rich datasets available are CropDeep [42] for detection, multi-modal datasets like Rosette plant or Arabidopsis datasets [43, 44, 45], Sorghum-Head [37], Wheat-Panicle [46], Crop/Weed segmentation [24], and Crop/Tassle segmentation [47]. Figure 7 contains some examples from the CropDeep [42] dataset. Figure 8 depicts multi-modal annotations provided in the Rosette Plant Phenotyping dataset [43, 44] i.e., annotations for detection, segmentation, leaf center along with otherwise rarely found meta data.

Efficient yield estimation from images is also one of the key tasks for farmers and plant breeders to accurately quantify the overall throughput of their ecosystem. Recent efforts in panicle or spike detection [48, 49, 50, 37], leaf counting [51], fruit detection [52] as well as pixel-wise segmentation-based tasks such as panicle segmentation [53, 54] show very promising results in this direction.

Modern technologies have given human society the ability to produce enough food to meet the demand of more than 7 billion people. However, food security remains threatened by a number of factors including climate change [55], the decline in pollinators [56], plant diseases [57], and others. Plant diseases are not only a threat to food security at the global scale, but can also have disastrous consequences for smallholder farmers whose livelihoods depend on healthy crops. India loses 35% of the annual crop yield due to plant diseases [58]. In the developing world, more than 80 percent of the agricultural production is generated by smallholder farmers [59], and reports of yield loss of more than 50% due to pests and diseases are frequent [60]. Furthermore, the largest fraction of hungry people (50%) live in smallholder farming households [61], making smallholder farmers a group that’s particularly vulnerable to pathogen-derived disruptions in food supply.

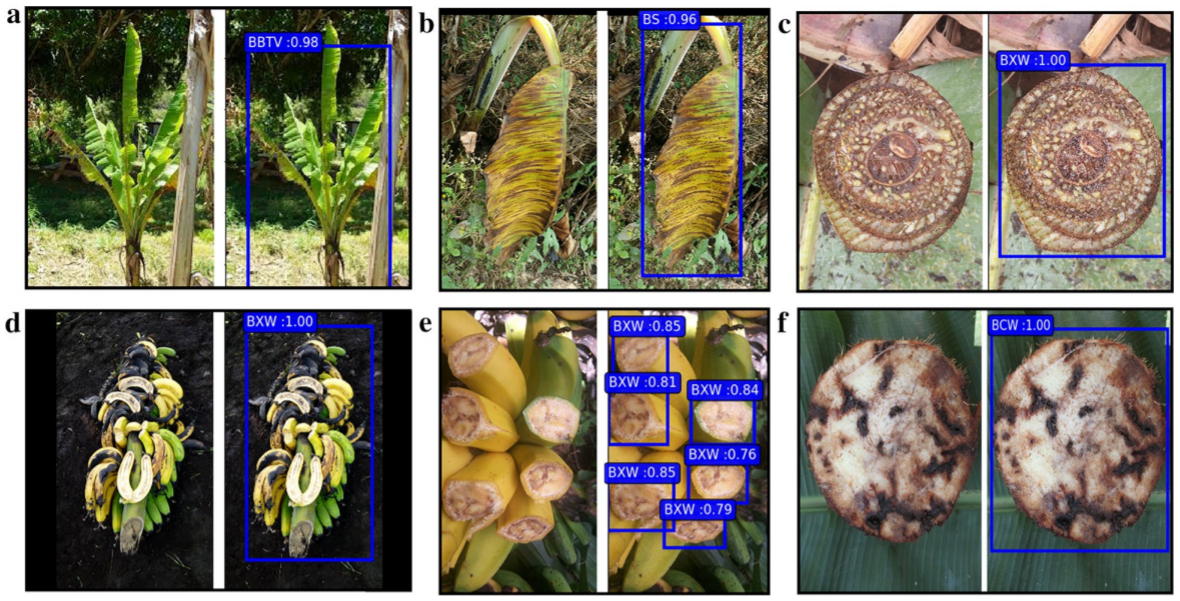

Owing to these factors, timely disease and pest recognition becomes a priority task for farmers. In addition to that, farmers do not have many options other than consulting other fellow farmers or seeking help from government funded helplines [62]. Availability of public datasets such as PlantVillage [63], PlantDoc [58] allowed for progress in the area of disease and pest detection. Recent research works in pest and insect detection [64, 65, 66, 67, 68], invasive species detection in marine aquaculture [69] and disease detection in plant leafs [70, 71, 72, 73, 74], Rice [75, 76, 77], Tomato [34, 78, 79, 80], Banana [81], Grape [82], Sugarcane [83], Eggplant [84], Cucumber [85], Soybean [86], Olive [87], Tea [88], Coffee [89] and other similar works take encouraging steps towards disease-free agriculture. Figure 9 depicts banana diseases and pest detection outputs from [81]. This work [90] reports solutions to extant limitations in plant disease detection.

The past few decades have witnessed the great progress of unmanned aircraft vehicles (UAVs) in civilian fields, especially in photogrammetry and remote sensing. In contrast with the platforms of manned aircraft and satellite, the UAV platform holds many promising characteristics: flexibility, efficiency, high spatial/temporal resolution, low cost, easy operation, etc., which make it an effective complement to other remote-sensing platforms and a cost-effective means for remote sensing. We refer reader to literary works [91, 92] for the detailed reports of techniques and applications of UAVs in precision agriculture, remote sensing, search and rescue, construction and infrastructure inspection and discuss other market opportunities. UAVs can be utilized in precision agriculture (PA) for crop management and monitoring [93, 94], weed detection [95], irrigation scheduling [96], agricultural pattern detection [97], pesticide spraying [93], cattle detection [98], disease detection [99, 100], insect detection [101] and data collection from ground sensors (moisture, soil properties, etc.,) [102]. The deployment of UAVs in PA is a cost-effective and time saving technology which can help for improving crop yields, farms productivity and profitability in farming systems. Moreover, UAVs facilitate agricultural management, weed monitoring, and pest damage, thereby they help to meet these challenges quickly [103].

UAVs can also be utilized to monitor and quantify several factors of irrigation such as availability of soil water, crop water need (which represents the amount of water needed by the various crops to grow optimally), rainfall amount, efficiency of the irrigation system [104]. In this work [105], UAVs are currently being utilized to estimate the spatial distribution of surface soil moisture high-resolution multi-spectral imagery in combination with ground sampling. UAVs are also being used for thermal remote sensing to monitor the spatial and temporal patterns of crop diseases during various disease development phases which reduces crop losses for farmers. This work [106] detects early stage development of soil-borne fungus in UAV imagery. Soil texture can be an indicative of soil quality which in turn influences crop productivity. Hence, UAV thermal images are being utilized to quantify soil texture at a regional scale by measuring the differences in land surface temperature under a relatively homogeneous climatic condition [107, 108]. Accurate assessment of crop residue is crucial for proper implementation of conservation tillage practices since crop residues provide a protective layer on agricultural fields that shields soil from wind and water. In [109], the authors demonstrated that aerial thermal images can explain more than 95% of the variability in crop residue cover amount compared to 77% using visible and near IR images.

Farmers must monitor crop maturity to determine the harvesting time of their crops. UAVs can be a practical solution to this problem [110]. Farmers require accurate, early estimation of crop yield for a number of reasons, including crop insurance, planning of harvest and storage requirements, and cash flow budgeting. In [111], UAV images were utilized to estimate yield and total biomass of rice crop in Thailand. In [112], UAV images were also utilized to predict corn grain yields in the early to midseason crop growth stages in Germany.

There have also been successful efforts that seamlessly combine aerial and ground based system for precision agriculture [113]. With relaxed flight regulations and drastic improvement in machine learning techniques, geo-referencing, mosaicing, and other related algorithms, UAVs can provide a great potential for soil and crop monitoring [114]. More precision agricultural researches are encouraged to design and implement special types of cameras and sensors on-board UAVs, which have the ability of remote crop monitoring and detection of soil and other agricultural characteristics in real time scenarios.

The impact of climate change and its unforeseeable nature, has caused majority of the agricultural crops to be affected in terms of their production and maintenance. With more than seven billion mouths to feed greater demands are being put on agriculture than ever before, at the same time as land is being degraded by factors such as soil erosion, mineral exhaustion and drought. It becomes the utmost priority for governments to support farmers by providing crucial information about changing weather conditions, soil conditions and more. Currently, satellite imagery is making agriculture more efficient by reducing scouting efforts of farmers, by optimizing use of nitrogen based on variable rate of application, by optimizing water schedules, identifying field performance and benchmark fields, etc [115]. India alone has 7 satellites specially designed for benefits of farmers [116].

Satellites and their imagery are being applied to agriculture in several ways, initially as a means of estimating crop yields [117] and crop types [118], soil salinity, soil moisture, soil pH [119, 120, 121]. Optical and radar sensors can provide an accurate picture of the acreage being cultivated, while also differentiating between crop types and determining their health and maturity. Optical satellite sensors can detect visible and near-infrared wavelengths of light, reflected from agricultural land below. It is these wavelengths which combined, can be manipulated to help us understand the condition of the crops. This information helps to inform the market, and provide early warning of crop failure or famine.

By extension, satellites are also used as a management tool through the practice of PA, where satellite images are used to characterise a farmer’s fields in detail, often used in combination with geographical information systems (GIS), to allow more intensive and efficient cultivation practices. For instance, different crops might be recommended for different fields while the farmer’s use of fertiliser is optimised in a more economic and environmentally-friendly fashion. Providing access to satellite imagery also becomes very important for building trust among the involved parties (farmers and government and private bodies involved). Web-based platforms such as Google Earth Engine, Planet.com, Earth Data Search by NASA, LandViewer by Earth Observing System, Geocento [122] and others [123] provide access to past and present (even daily) satellite imagery of your interest.

Agricultural monitoring is also increasingly being applied to forestry, both for forest management and as a way of characterising forests as carbon sinks to help minimise climate change – notably as part of the UN’s REDD programme [124].

While deep learning based plant phenotyping has shown great promise, requirement of large labeled datasets still remains to be the bottleneck. Phenotyping tasks are often specific to the environmental and genetic conditions, finding large datasets with such conditions is not always possible. This results in researchers needing to acquire their own dataset and label it, which is often a arduous and expensive affair. Moreover, small datasets often lead to models that overfit. Deep learning approaches optimized for working with limited labeled data would immensely help the plant phenotyping community, since this would encourage many more farmers, breeders, and researchers to employ reliable plant phenotyping techniques to optimize crop yield. To this end, we list out some of the recent efforts in the area of deep plant phenotyping with limited labeled data.

The computer vision community has long been employing dataset augmentation techniques to grow the amount of data using artificial transformations. Artificially perturbing the original dataset with affine transformations (e.g., rotation, scale, translation) is considered a common practice now. However, this approach has some constraints: the augmented data only capture the variability of the available training set (e.g., if the dataset doesn’t include a unique colored fruit, the particular unique case will never be learnt). To overcome this, several data augmentation methods proposed take advantage of recent advancements in the image generation space. In this work [125], the authors use Generative Adversarial Network (GAN) [126] to generate Arabidopsis plant images (called ARIGAN) with unique desirable traits (over 7 leaves) that were originally less frequent in the dataset. Figure 10 (a) shows examples of images generated by ARIGAN. Other latest works [127, 128] use more advanced variants of GANs to generate realistic plant images with particularly favorable leaf segmentations of interest to boost leaf counting accuracy of the learning models. In [129], the authors proposed an unsupervised image translation technique to improve plant disease recognition performance. LeafGAN [130], an image-to-image translation model, generates leaf images with various plant diseases and boosts diagnostic performance by a great margin. Two sets of example images generated by LeafGAN are shown in Figure 10 (b). Other data enhancement techniques are also being employed by researchers to train plant disease diagnosis models on generated lesions [131].

The effort to provide finely annotated data has enabled great improvement of the state of the art on segmentation performance. Some researches have started working on effectively transferring the knowledge obtained from RGB images on annotated plants either to other species or other modalities of imaging. In this work [132], the authors successfully transfer the knowledge gained from annotated leaves of Arabidopsis thaliana in RGB to images of the same plant in chlorophyll fluorescence imaging.

Fruit/organ counting is a well explored task by the plant phenotyping community. However, many vision-based solutions we have currently require highly accurate instance and density labels of fruits and organs in diverse set of environments. The labeling procedures are often very burdensome and error prone and, in many agricultural scenarios, it may be impossible to acquire a sufficient number of labelled samples to achieve consistent performance that are robust to image noise or other forms of covariate shift. This is why using only weak labels can be crucial for cost-effective plant phenotyping.

Recently, a lot of attention has been placed on engineering weakly supervised learning frameworks for plant phenotyping. In [48], the authors created a weakly supervised framework for the sorghum head detection task where annotators label the data only until the model reaches a desired performance level. After that, model outputs are directly passed as data labels leading to a exponential reduction in annotation costs with minimal loss in model accuracy. In other work [133], the authors proposed a strategy which is able to learn to count fruits without requiring task-specific supervision labels, such as manually labelled object bounding boxes or total instance count. In [134], the authors use a trained CNN on defect classification data and use it’s activate maps to segment infected regions on potatoes. Segmentation task requires really rich labels (each pixel of the image is annotated) so this task effectively bypasses the labeling for segmentation altogether. On another note, rice heading date estimation greatly assists the breeders to understand the adaptability of the crop to various environmental and genetic conditions. Accurate estimation of heading date requires monitoring the increase in number of rice panicles in the crop. Detecting rice panicles from crop images usually requires training an object detection model such as Faster R-CNN or YOLO, which requires costly bounding box annotations. However, a recently proposed method [49] uses a sliding window based detector which requires training an image classifier, for which annotations are much easier to obtain.

Transfer learning is a type of learning that enables using knowledge gained while solving one problem and applying it to a different but related problem i.e., a model trained on one phenotyping task (say potato leaf classification) being able to assist another phenotyping (tomato leaf classification) task. Transfer learning is a very well explored area of machine learning. As part of the first steps of adopting existing transfer learning techniques for plant phenotyping, the authors of [136] use CNNs (AlexNet, GoogleNet and VGGNet) pretrained on ImageNet dataset [137] and fine tune on the plant dataset used in LifeCLEF [138] 2015 challenge. With the help of transfer learning, they were able to beat then existing state-of-the-art LifeCLEF performance by 15% points. Similary in [139], the authors report better than human results in segmentation task with the help of transfer learning where they transfer learn a model trained on peanut root dataset for switchgrass root dataset (they also report results using ImageNet pretrained models). Leaf disease detection and treatment recommendation performance is also shown to be boosted with transfer learning [140]. In [141], the authors interestingly combined a State-of-the-Art weakly-supervised fruit counting model with an unsupervised style transfer method for fruit counting. They used Cycle-Generative Adversarial Network (C-GAN) to perform unsupervised domain adaptation from one fruit dataset to another and train it alongside with a Presence-Absence Classifier (PAC) that discriminates images containing fruits or not and ultimately achieved better performance than fully supervised models.

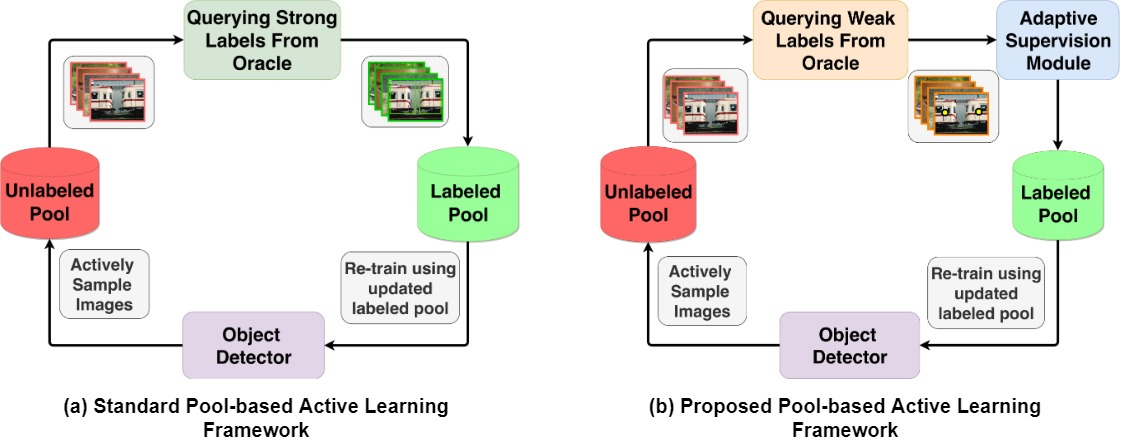

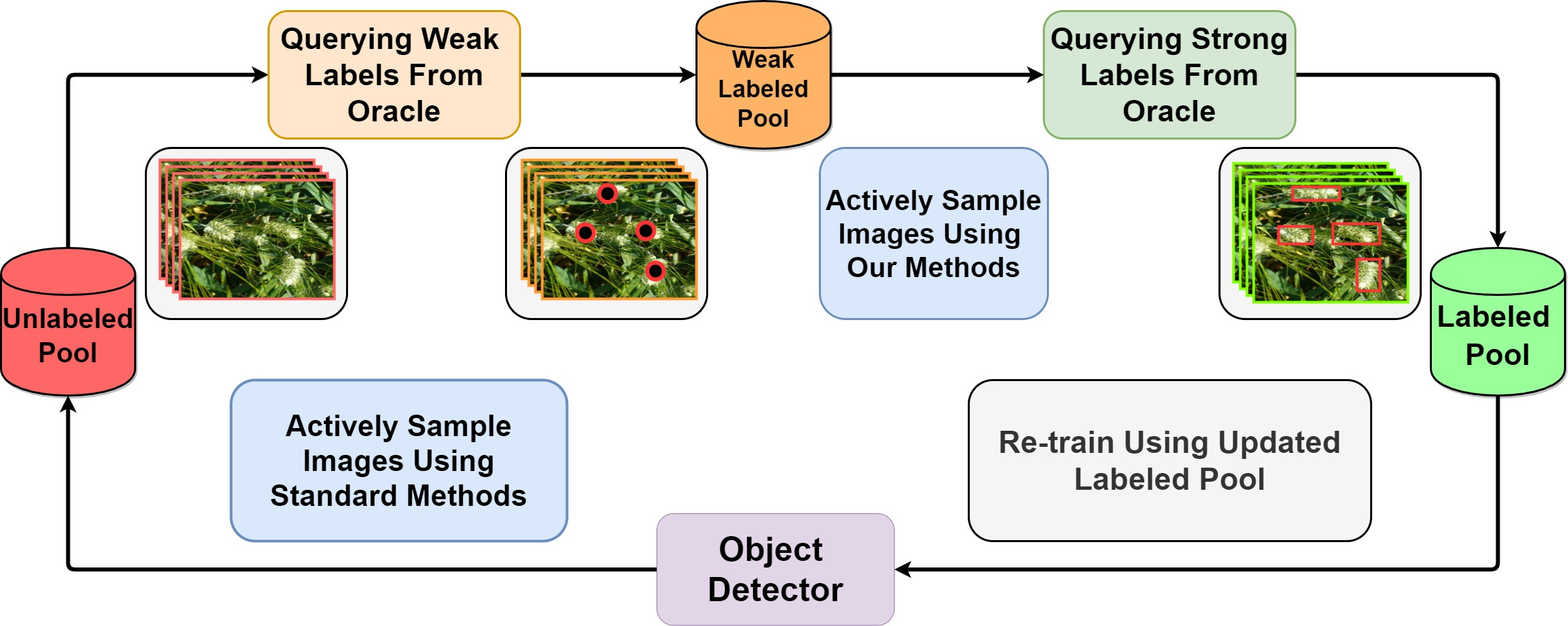

Active learning [143], an iterative training approach that curiously selects the best samples to train, has been shown to reduce labeled data requirement when training deep classification networks [144, 145, 146]. Research in the area of active learning for object detection [147, 148, 149] has been, arguably, limited. However, numerous plant phenotyping tasks such as detection and quantification of crop yield and fruit counting are directly dependent on object detection. Keeping this in mind, an active learning method has been proposed [135] for training deep object detection models where the model can selectively query either weak labels (pointing at the object) or strong labels (drawing a box around the object). By introducing a switching module for weak labels and strong labels, the authors were able to save 24% of annotation time while training a wheat head detection [46] model. Figure 11 illustrates the difference between regular active learning cycle and proposed active learning cycle. This method demonstrates the applicability of active learning to plant phenotyping methods where obtaining labeled data is often difficult. Along the same lines, to alleviate the labeled data requirement for training object detection models for cereal crop detection, a weak supervision based active learning method [142] was proposed recently. In this active learning approach, the model constantly interacts with a human annotator by iteratively querying the labels for only the most informative images, as opposed to all images in a dataset. Figure 12 visually illustrates the proposed framework. The active query method is specifically designed for cereal crops which usually tend to have panicles with low variance in appearance. This training method has been shown to reduce over 50% of annotation costs on sorghum head and wheat spike detection datasets. We expect to see more research works using active learning for limited labeled data based plant phenotyping in the near future.

In this section, we describe some of the challenges present in plant phenotyping methods which warrant further research.

Modern phenotyping methods rely on deep learning which is notorious for requiring large amounts of labeled data. While some progress has been made in developing data efficient models for phenotyping, reducing the labeling efforts for training efficient phenotyping tools is still an open problem. We believe that effectively adapting techniques from deep learning such as unsupervised, self supervised, weakly supervised, active and semi-supervised learning will greatly benefit the phenotyping community in observing plant traits with small datasets.

Deep neural networks are generally considered as black boxes which produce predictions without sufficient justification. This makes debugging a neural network difficult i.e., it can be tough to understand what caused a wrong prediction. Crop management decisions based on incorrect phenotyping results can cause financial losses. Hence, developing explainable models for plant phenotyping is one of the open problems in this field. Obtaining the reasons behind a given set of plant traits using explainable models has the potential to achieve breakthroughs in our understanding of plant behavior in various genetic and environmental conditions.

Vision based plant phenotyping suffers from challenges such as occlusion, inaccuracies in 3D reconstruction of crops and bad lighting conditions caused by the changing weather. It is therefore necessary to develop phenotyping tools which are robust to visual variations.

High throughput plant phenotyping methods have shown great promise in efficiently monitoring crops for plant breeding and agricultural crop management. Research in deep learning has accelerated the progress in plant phenotyping research which resulted in the development of various image analysis tools to observe plant traits. However, wide applicability of high throughput phenotyping tools is limited by some issues such as 1) dependence of deep networks on large datasets, which are difficult to curate, 2) large variations of field environment which cannot always be captured, and 3) capital and maintenance which can be prohibitively expensive to be widely used in developing countries. With many open problems in plant phenotyping warranting further studies, it is indeed a great time to study plant phenotyping and achieve rapid progress by utilizing the advances in deep learning.

1. Hunter, M., Smith, R., Schipanski, M., Atwood, L., Mortensen, D.: Agriculture in 2050: Recalibrating targets for sustainable intensification. BioScience 67 (02 2017)

2. Wu, X., Guo, J., Han, M., Chen, G.: An overview of arable land use for the world economy: From source to sink via the global supply chain. Land Use Policy 76 (2018) 201 – 214

3. Gago, J., Douthe, C., Coopman, R., Gallego, P., Ribas-Carbo, M., Flexas, J., Escalona, J., Medrano, H.: Uavs challenge to assess water stress for sustainable agriculture. Agricultural Water Management 153 (2015) 9 – 19

4. Aubert, B.A., Schroeder, A., Grimaudo, J.: It as enabler of sustainable farming: An empirical analysis of farmers’ adoption decision of precision agriculture technology. Decision Support Systems 54(1) (2012) 510 – 520

5. Sladojevic, S., Arsenovic, M., Anderla, A., Culibrk, D., Stefanovic, D.: Deep neural networks based recognition of plant diseases by leaf image classification. In: Comp. Int. and Neurosc. (2016)

6. Ruckelshausen, A., Biber, P., Dorna, M., Gremmes, H., Klose, R., Linz, A., Rahe, R., Resch, R., Thiel, M., Trautz, D., Weiss, U.: Bonirob: An autonomous field robot platform for individual plant phenotyping. Precision Agriculture 9 (01 2009) 841–847

7. Fitch, F.B.: Warren s. mcculloch and walter pitts. a logical calculus of the ideas immanent in nervous activity. bulletin of mathematical biophysics, vol. 5 (1943), pp. 115–133. Journal of Symbolic Logic 9(2) (1944) 49–50

8. Rosenblatt, F.F.: The perceptron: a probabilistic model for information storage and organization in the brain. Psychological review 65 6 (1958) 386–408

9. Hornik, K., Stinchcombe, M., White, H.: Multilayer feedforward networks are universal approximators. Neural Netw. 2(5) (July 1989) 359–366

10. Bengio, Y., Lamblin, P., Popovici, D., Larochelle, H., Montreal, U.: Greedy layer-wise training of deep networks. Volume 19. (01 2007)

11. Bengio, Y.: Learning deep architectures for ai. Foundations 2 (01 2009) 1–55

12. Alom, M.Z., Taha, T.M., Yakopcic, C., Westberg, S., Sidike, P., Nasrin, M.S., Hasan, M., Van Essen, B.C., Awwal, A.A.S., Asari, V.K.: A state-of-the-art survey on deep learning theory and architectures. Electronics 8(3) (Mar 2019) 292

13. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., eds.: Advances in Neural Information Processing Systems 27. Curran Associates, Inc. (2014) 2672–2680

14. : A framework for designing the architectures of deep convolutional neural networks. Entropy 19(6) (May 2017) 242

15. Fukushima, K.: Neocognitron: A hierarchical neural network capable of visual pattern recognition. Neural Networks 1 (1988) 119–130

16. Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proceedings of the IEEE 86(11) (Nov 1998) 2278–2324

17. Itay Lieder, Yehezkel S. Resheff, T.H.: Learning tensorflow. (2017) Chapter 4

18. Boureau, Y.L., Ponce, J., Lecun, Y.: A theoretical analysis of feature pooling in visual recognition. (11 2010) 111–118

19. Scherer, D., Müller, A., Behnke, S.: Evaluation of pooling operations in convolutional architectures for object recognition. In Diamantaras, K., Duch, W., Iliadis, L.S., eds.: Artificial Neural Networks – ICANN 2010, Berlin, Heidelberg, Springer Berlin Heidelberg (2010) 92–101

20. Jiao, L., Zhang, F., Liu, F., Yang, S., Li, L., Feng, Z., Qu, R.: A survey of deep learning-based object detection. IEEE Access 7 (2019) 128837–128868

21. Lu, Z., Xu, H., Liu, G.: A survey of object co-segmentation. IEEE Access PP (05 2019) 1–1

22. Lin, T.Y., Maire, M., Belongie, S.J., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: Common objects in context. In: ECCV. (2014)

23. Y. Gharde, P. Singh, R.D., Gupta, P.: Assessment of yield and economic losses in agriculture due to weeds in india. Volume 107. (2018) 12–18

24. Haug, S., Ostermann, J.: A crop/weed field image dataset for the evaluation of computer vision based precision agriculture tasks. In: ECCV Workshops. (2014)

25. Binguitcha-Fare, A.A., Sharma, P.: Crops and weeds classification using convolutional neural networks via optimization of transfer learning parameters

26. Fawakherji, M., Youssef, A., Bloisi, D.D., Pretto, A., Nardi, D.: Crop and weeds classification for precision agriculture using context-independent pixel-wise segmentation. 2019 Third IEEE International Conference on Robotic Computing (IRC) (2019) 146–152

27. Guerrero, J.M., Pajares, G., Montalvo, M., Romeo, J., Guijarro, M.: Support vector machines for crop/weeds identification in maize fields. Expert Syst. Appl. 39 (2012) 11149–11155

28. Sa, I., Chen, Z., Popovic, M., Khanna, R., Liebisch, F., Nieto, J., Siegwart, R.: weednet: Dense semantic weed classification using multispectral images and mav for smart farming. IEEE Robotics and Automation Letters 3 (2017) 588–595

29. Rani, K., Supriya, P., Sarath, T.V.: Computer vision based segregation of carrot and curry leaf plants with weed identification in carrot field. 2017 International Conference on Computing Methodologies and Communication (ICCMC) (2017) 185–188

30. Nkemelu, D.K., Omeiza, D., Lubalo, N.: Deep convolutional neural network for plant seedlings classification. ArXiv abs/1811.08404 (2018)

31. Elnemr, H.A.: Convolutional neural network architecture for plant seedling classification. (2019)

32. Xiang, T.Z., Xia, G.S., Zhang, L.: Mini-uav-based remote sensing: Techniques, applications and prospectives (12 2018)

33. Patrício, D.I., Rieder, R.: Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 153 (2018) 69–81

34. Fuentes, A., Yoon, S., Kim, S.C., Park, D.S.: A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. In: Sensors. (2017)

35. Bachche, S.: Deliberation on design strategies of automatic harvesting systems: A survey. Robotics 4 (2015) 194–222

36. Allende, A., Monaghan, J.M., Uyttendaele, M., Franz, E., Schlüter, O.: Irrigation water quality for leafy crops: A perspective of risks and potential solutions. In: International journal of environmental research and public health. (2015)

37. Guo, W., Zheng, B., Potgieter, A.B., Diot, J., Watanabe, K., Noshita, K., Jordan, D.R., Wang, X., Watson, J., Ninomiya, S., Chapman, S.C.: Aerial Imagery Analysis – Quantifying Appearance and Number of Sorghum Heads for Applications in Breeding and Agronomy. Frontiers in Plant Science 9(October) (2018) 1–9

38. Chai, Q., Gan, Y., Zhao, C., Xu, H.l., Waskom, R., Niu, Y., Siddique, K.: Regulated deficit irrigation for crop production under drought stress. a review. Agronomy for Sustainable Development 36 (03 2016)

39. Zhao, D., Liu, X., Chen, Y., Ji, W., Jia, W., Hu, C.: Image recognition at night for apple picking robot. Nongye Jixie Xuebao/Transactions of the Chinese Society for Agricultural Machinery 46 (03 2015) 15–22

40. Yamane, S., Miyazaki, M.: Study on electrostatic pesticide spraying system for low-concentration, high-volume applications. (2017)

41. Oktay, K., Bedoschi, G., Pacheco, F., Turan, V., Emirdar, V.: First pregnancies, livebirth and in vitro fertilization outcomes after transplantation of frozen-banked ovarian tissue with a human extracellular matrix scaffold using robot-assisted minimally invasive surgery. American Journal of Obstetrics and Gynecology 214 (11 2015)

42. Zheng, Y.Y., Kong, J.L., bo Jin, X., Wang, X.Y., Su, T.L., Zuo, M.: Cropdeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. In: Sensors. (2019)

43. Minervini, M., Fischbach, A., Scharr, H., Tsaftaris, S.: Finely-grained annotated datasets for image-based plant phenotyping. Pattern Recognition Letters 81 (11 2015)

44. Scharr, H., Minervini, M., Fischbach, A., Tsaftaris, S.: Annotated image datasets of rosette plants (07 2014)

45. Cruz, J., Yin, X., Liu, X., Imran, S., Morris, D., Kramer, D., Chen, J.: Multi-modality imagery database for plant phenotyping. Machine Vision and Applications 27 (07 2016)

46. Madec, S., Jin, X., Lu, H., de Solan, B., Liu, S., Duyme, F., Heritier, E., Frederic, B.: Ear density estimation from high resolution rgb imagery using deep learning technique. Agricultural and Forest Meteorology 264 (01 2019) 225–234

47. Lu, H., Cao, Z.G., Xiao, Y., Li, Y., Zhu, Y.: Joint crop and tassel segmentation in the wild. (11 2015)

48. Ghosal, S., Zheng, B., Chapman, S.C., Potgieter, A.B., Jordan, D., Wang, X., Singh, A.K., Singh, A., Hirafuji, M., Ninomiya, S., Ganapathysubramanian, B., Sarkar, S., Guo, W.: A weakly supervised deep learning framework for sorghum head detection and counting. (2019)

49. Desai, S.V., Balasubramanian, V.N., Fukatsu, T., Ninomiya, S., Guo, W.: Automatic estimation of heading date of paddy rice using deep learning. Plant Methods 15(1) (2019) 76

50. Hasan, M.M., Chopin, J.P., Laga, H., Miklavcic, S.J.: Detection and analysis of wheat spikes using convolutional neural networks. Plant Methods 14(1) (Nov 2018) 100

51. Ubbens, J., Cieslak, M., Prusinkiewicz, P., Stavness, I.: The use of plant models in deep learning: an application to leaf counting in rosette plants. In: Plant Methods. (2018)

52. Sa, I., Ge, Z., Dayoub, F., Upcroft, B., Perez, T., McCool, C.: Deepfruits: A fruit detection system using deep neural networks. In: Sensors. (2016)

53. Xiong, X., Duan, L., Liu, L., Tu, H., Yang, P., Wu, D., Chen, G., Xiong, L., Yang, W., Liu, Q.: Panicle-seg: a robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 13(1) (Nov 2017) 104

54. Oh, M.h., Olsen, P., Ramamurthy, K.N.: Counting and Segmenting Sorghum Heads. (may 2019)

55. Tai, A., Val Martin, M., Heald, C.: Threat to future global food security from climate change and ozone air pollution. Nature Climate Change 4 (07 2014) 817–821

56. Unknown: Pollinators vital to our food supply under threat. Volume Press Release., Intergovernmental Platform on Biodiversity and Ecosystem Services (2016)

57. Strange, R., Scott, P.: Plant disease: A threat to global food security. Annual review of phytopathology 43 (02 2005) 83–116

58. Singh, D., Jain, N., Jain, P., Kayal, P., Kumawat, S., Batra, N.: Plantdoc: A dataset for visual plant disease detection. In: CoDS COMAD 2020. (2020)

59. Walpole, M., Smith, J., Rosser, A., Brown, C., Schulte-Herbruggen, B., Booth, H., Sassen, M., Mapendembe, A., Fancourt, M., Bieri, M., Glaser, S., Corrigan, C., Narloch, U., Runsten, L., Jenkins, M., Gomera, M., Hutton, J.: Smallholders, food security, and the environment (03 2013)

60. Harvey Celia A., Rakotobe Zo Lalaina, R.N.S.D.R.R.H.R.R.H.R.H., L., M.J.: Extreme vulnerability of smallholder farmers to agricultural risks and climate change in madagascar (04 2014)

61. Sanchez, P., Swaminathan, M.: Cutting world hunger in half. Science (New York, N.Y.) 307 (02 2005) 357–9

62. MinistryOfAgriculture: Government of india 2019. kisaan knowledge management system. (2019)

63. Hughes, D., Salathe, M.: An open access repository of images on plant health to enable the development of mobile disease diagnostics through machine learning and crowdsourcing. (11 2015)

64. Liu, B., Zhuhua, H., Zhao, Y., Bai, Y., Wang, Y.: Recognition of pyralidae insects using intelligent monitoring autonomous robot vehicle in natural farm scene (01 2019)

65. Deng, L., Wang, Y., Han, Z., Yu, R.: Research on insect pest image detection and recognition based on bio-inspired methods. (2018)

66. Javed, M.H., Humair, M., Yaqoob, B., Noor, N., Arshad, T.: K-means based automatic pests detection and classification for pesticides spraying. International Journal of Advanced Computer Science and Applications 8 (01 2017)

67. Liu, T., Chen, W., Wu, W., Sun, C., Guo, W., Zhu, X.: Detection of aphids in wheat fields using a computer vision technique. Biosystems Engineering 141 (01 2016) 82–93

68. Zhong, Y., Gao, J., Lei, Q., Zhou, Y.: A vision-based counting and recognition system for flying insects in intelligent agriculture. In: Sensors. (2018)

69. Galloway, A., Taylor, G.W., Ramsay, A., Moussa, M.A.: The ciona17 dataset for semantic segmentation of invasive species in a marine aquaculture environment. 2017 14th Conference on Computer and Robot Vision (CRV) (2017) 361–366

70. Zhang, S., Wu, X., You, Z.H., Zhang, L.: Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 134 (2017) 135–141

71. Ferentinos, K.P.: Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 145 (2018) 311–318

72. Pallagani, V., Khandelwal, V., Chandra, B., Udutalapally, V., Das, D., Mohanty, S.P.: dcrop: A deep-learning based framework for accurate prediction of diseases of crops in smart agriculture. 2019 IEEE International Symposium on Smart Electronic Systems (iSES) (Formerly iNiS) (2019) 29–33

73. Mohanty, S.P., Hughes, D.P., Salathé, M.: Using deep learning for image-based plant disease detection. In: Front. Plant Sci. (2016)

74. Francis, M., Deisy, C.: Disease detection and classification in agricultural plants using convolutional neural networks — a visual understanding. 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN) (2019) 1063–1068

75. Li, D., Wang, R., Xie, C., Liu, L., Zhang, J., Li, R., Wang, F., Zhou, M., Liu, W.: A recognition method for rice plant diseases and pests video detection based on deep convolutional neural network. Sensors 20 3 (2020)

76. jie Liang, W., Zhang, H., feng Zhang, G., xin Cao, H.: Rice blast disease recognition using a deep convolutional neural network. In: Scientific Reports. (2019)

77. Zhou, G., Zhang, W., Chen, A., He, M., Ma, X.: Rapid detection of rice disease based on fcm-km and faster r-cnn fusion. IEEE Access 7 (2019) 143190–143206

78. Maeda-Gutiérrez, V., Galván Tejada, C., Zanella Calzada, L., Celaya Padilla, J., Galván Tejada, J., Gamboa-Rosales, H., Luna-Garcia, H., Magallanes-Quintanar, R., Carlos, G.M., Olvera-Olvera, C.: Comparison of convolutional neural network architectures for classification of tomato plant diseases. Applied Sciences 10 (02 2020) 1245

79. Fuentes, A., Yoon, S., Lee, J., Park, D.S.: High-performance deep neural network-based tomato plant diseases and pests diagnosis system with refinement filter bank. In: Front. Plant Sci. (2018)

80. Gutierrez, A., Ansuategi, A., Susperregi, L., Tubío, C., RankiÄ, I., Lenža, L.: A benchmarking of learning strategies for pest detection and identification on tomato plants for autonomous scouting robots using internal databases. Journal of Sensors 2019 (05 2019) 1–15

81. Michael Gomez Selvaraj, Alejandro Vergara, H.R.N.S.S.E.W.O.G.B.: Ai-powered banana diseases and pest detection. Plant Methods 2019 (08 2019)

82. Aravind, K.R., Raja, P., Aniirudh, R., Mukesh, K.V., Ashiwin, R., Vikas, G.: Grape crop disease classification using transfer learning approach. (2018)

83. Shadab, M., Dwivedi, M., S N, O., Javed, T., Bakey, A., Raqib, M., Chakravarthy, A.: Disease recognition in sugarcane crop using deep learning (09 2019)

84. Rangarajan, A.K., Purushothaman, R.: Disease classification in eggplant using pre-trained vgg16 and msvm. Scientific Reports 10 (2020)

85. Zhang, S., Wu, X., You, Z.H., Zhang, L.: Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 134 (2017) 135–141

86. Kaur, S., Pandey, S., Goel, S.: Semi-automatic leaf disease detection and classification system for soybean culture. IET Image Processing 12 (2018) 1038–1048

87. Cruz, A.C., Luvisi, A., Bellis, L.D., Ampatzidis, Y.: X-fido: An effective application for detecting olive quick decline syndrome with deep learning and data fusion. In: Front. Plant Sci. (2017)

88. Chen, J., Liu, Q., Gao, L.: Visual tea leaf disease recognition using a convolutional neural network model. Symmetry 11 (2019) 343

89. Esgario, J., Krohling, R., Ventura, J.: Deep learning for classification and severity estimation of coffee leaf biotic stress (07 2019)

90. Arsenovic, M., Karanovic, M., Sladojevic, S., Anderla, A., Stefanovic, D.: Solving current limitations of deep learning based approaches for plant disease detection. Symmetry 11 (2019) 939

91. Shakhatreh, H., Sawalmeh, A.H., Al-Fuqaha, A., Dou, Z., Almaita, E., Khalil, I.M., Othman, N.S., Khreishah, A., Guizani, M.: Unmanned aerial vehicles (uavs): A survey on civil applications and key research challenges. IEEE Access 7 (2019) 48572–48634

92. Xiang, T., Xia, G.S., Zhang, L.: Mini-unmanned aerial vehicle-based remote sensing: Techniques, applications, and prospects. IEEE Geoscience and Remote Sensing Magazine 7 (2019) 29–63

93. Huang, Y., Thomson, S.J., Hoffmann, W.C., Lan, Y., Fritz, B.K.: Development and prospect of unmanned aerial vehicle technologies for agricultural production management. (2013)

94. Dastgheibifard, S., Asnafi, M.: A review on potential applications of unmanned aerial vehicle for construction industry. (07 2018)

95. Kazmi, W., Bisgaard, M., Garcia-Ruiz, F.J., Hansen, K.D., la Cour-Harbo, A.: Adaptive surveying and early treatment of crops with a team of autonomous vehicles. In: ECMR. (2011)

96. V. Gonzalez-Dugo, P. Zarco-Tejada, E.N.P.N.J.A.D.I., Fereres, E.: Using high resolution uav thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precision Agriculture 14(6) (12 2013) 660–678

97. Chiu, M.T., Xu, X., Wei, Y., Huang, Z., Schwing, A.G., Brunner, R., Khachatrian, H., Karapetyan, H., Dozier, I., Rose, G., Wilson, D., Tudor, A.P., Hovakimyan, N., Huang, T.S., Shi, H.: Agriculture-vision: A large aerial image database for agricultural pattern analysis. ArXiv abs/2001.01306 (2020)

98. Barbedo, J., Koenigkan, L., Santos, T., Santos, P.: A study on the detection of cattle in uav images using deep learning. Sensors 19 (12 2019) 5436

99. Garcia-Ruiz, F., Sankaran, S., Maja, J.M., Lee, W.S., Rasmussen, J., Ehsani, R.: Comparison of two aerial imaging platforms for identification of huanglongbing-infected citrus trees. Computers and Electronics in Agriculture 91 (02 2013) 106–115

100. Kerkech, M., Hafiane, A., Canals, R.: Vine disease detection in uav multispectral images with deep learning segmentation approach. (2019)

101. Stumph, B., Virto, M.H., Medeiros, H., Tabb, A., Wolford, S., Rice, K., Leskey, T.C.: Detecting invasive insects with unmanned aerial vehicles. 2019 International Conference on Robotics and Automation (ICRA) (2019) 648–654

102. Mathur, P., Nielsen, R.H., Prasad, N.R., Prasad, R.: Data collection using miniature aerial vehicles in wireless sensor networks. IET Wireless Sensor Systems 6 (2016) 17–25

103. Primicerio, J., Di Gennaro, S., Fiorillo, E., Genesio, L., Lugato, E., Matese, A., Vaccari, F.: A flexible unmanned aerial vehicle for precision agriculture. Precision Agriculture (08 2012)

104. F. M. Rhoads, C.D.Y.: Irrigation scheduling for corn—why and how. National Corn Handbook (2000)

105. Hassan-Esfahani, L., Torres-Rua, A., Jensen, A., McKee, M.: Assessment of surface soil moisture using high-resolution multi-spectral imagery and artificial neural networks. Remote Sensing 7 (03 2015) 2627–2646

106. Calderón Madrid, R., Navas Cortés, J., Lucena, C., Zarco-Tejada, P.: High-resolution airborne hyperspectral and thermal imagery for early detection of verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sensing of Environment 139 (09 2013) 231–245

107. Wang, D.C., Zhang, G.L., Pan, X., Zhao, Y.G., Zhao, M.S., Wang, G.F.: Mapping soil texture of a plain area using fuzzy-c-means clustering method based on land surface diurnal temperature difference. Pedosphere 22 (06 2012) 394–403

108. Wang, D.C., Zhang, G.L., Zhao, M.S., Pan, X., Zhao, Y.G., Li, D.C., Macmillan, B.: Retrieval and mapping of soil texture based on land surface diurnal temperature range data from modis. PLOS ONE 10 (06 2015) e0129977

109. Sullivan, D., Shaw, J., Mask, P., Rickman, D., Guertal, E., Luvall, J., Wersinger, J.: Evaluation of multispectral data for rapid assessment of wheat straw residue cover. Soil Science Society of America Journal 68 (11 2004)

110. Jensen, T., Apan, A., Zeller, L.: Crop maturity mapping using a low-cost low-altitude remote sensing system. (2009)

111. Swain, K., Thomson, S., Jayasuriya, H.: Adoption of an unmanned helicopter for low-altitude remote sensing to estimate yield and total biomass of a rice crop. Transactions of the ASABE 53 (01 2010)

112. Geipel, J., Link, J., Claupein, W.: Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sensing 6 (11 2014) 10335–10355

113. Pretto, A., Aravecchia, S., Burgard, W., Chebrolu, N., Dornhege, C., Falck, T., Fleckenstein, F.V., Fontenla, A., Imperoli, M., Khanna, R., Liebisch, F., Lottes, P., Milioto, A., Nardi, D., Nardi, S., Pfeifer, J., Popovic, M., Potena, C., Pradalier, C., Rothacker-Feder, E., Sa, I., Schaefer, A., Siegwart, R., Stachniss, C., Walter, A., Winterhalter, W., Wu, X.L., Nieto, J.: Building an aerial-ground robotics system for precision farming. ArXiv abs/1911.03098 (2019)

114. Primicerio, J., Di Gennaro, S., Fiorillo, E., Genesio, L., Lugato, E., Matese, A., Vaccari, F.: A flexible unmanned aerial vehicle for precision agriculture. Precision Agriculture (08 2012)

115. Press: How satellites are making agriculture more efficient. Gamaya Blog Post (2017)

116. Press: Satellites designed for benefit of farmers. Government of India, Department of Space (2016)

117. Unknown: Farmers benefit from satellite coverage. ESA Earth Online

118. Rußwurm, M., Lefèvre, S., Körner, M.: Breizhcrops: A satellite time series dataset for crop type identification. ArXiv abs/1905.11893 (2019)

119. Ghazali, M., Wikantika, K., Harto, A., Kondoh, A.: Generating soil salinity, soil moisture, soil ph from satellite imagery and its analysis. Information Processing in Agriculture (08 2019)

120. Sheffield, K., Morse-McNabb, E.: Using satellite imagery to asses trends in soil and crop productivity across landscapes. IOP Conference Series: Earth and Environmental Science 25 (07 2015) 012013

121. Kumar, N., Anouncia, S., Madhavan, P.: Application of satellite remote sensing to find soil fertilization by using soil colour. International Journal of Online Engineering 9 (05 2013)

122. Geocento: Online provider of satellite and drone imagery

123. Unknown: 7 top free satellite imagery sources in 2019. Earth Observatory System (2019)

124. Unknown: Agriculture overview. ESA Earth Online

125. Giuffrida, M.V., Scharr, H., Tsaftaris, S.A.: Arigan: Synthetic arabidopsis plants using generative adversarial network. 2017 IEEE International Conference on Computer Vision Workshops (ICCVW) (2017) 2064–2071

126. Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A.C., Bengio, Y.: Generative adversarial networks. ArXiv abs/1406.2661 (2014)

127. Zhu, Y., Aoun, M., Krijn, M., Vanschoren, J.: Data augmentation using conditional generative adversarial networks for leaf counting in arabidopsis plants. In: BMVC. (2018)

128. Kuznichov, D., Zvirin, A., Honen, Y., Kimmel, R.: Data augmentation for leaf segmentation and counting tasks in rosette plants. In: CVPR Workshops. (2019)

129. Nazki, H., Yoon, S., Fuentes, A., Park, D.S.: Unsupervised image translation using adversarial networks for improved plant disease recognition. Comput. Electron. Agric. 168 (2019)

130. Cap, Q.H., Uga, H., Kagiwada, S., Iyatomi, H.: Leafgan: An effective data augmentation method for practical plant disease diagnosis. (2020)

131. Sun, R., Zhang, M., Yang, K.: Data enhancement for plant disease classification using generated lesions. (2020)

132. Sapoukhina, N., Samiei, S., Rasti, P., Rousseau, D.: Data augmentation from rgb to chlorophyll fluorescence imaging application to leaf segmentation of arabidopsis thaliana from top view images. In: CVPR Workshops. (2019)

133. Bellocchio, E., Ciarfuglia, T., Costante, G., Valigi, P.: Weakly supervised fruit counting for yield estimation using spatial consistency. IEEE Robotics and Automation Letters PP (03 2019) 1–1

134. Marino, S., Beauseroy, P., Smolarz, A.: Weakly-supervised learning approach for potato defects segmentation. Engineering Applications of Artificial Intelligence 85 (07 2019) 337–346

135. Desai, S.V., Chandra, A.L., Guo, W., Ninomiya, S., Balasubramanian, V.N.: An adaptive supervision framework for active learning in object detection. British Machine Vision Conference (2019)

136. Mehdipour-Ghazi, M., Yanikoglu, B.A., Aptoula, E.: Plant identification using deep neural networks via optimization of transfer learning parameters. Neurocomputing 235 (2017) 228–235

137. Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Li, F.F.: Imagenet: a large-scale hierarchical image database. (06 2009) 248–255

138. Joly, A., Goëau, H., Spampinato, C., Bonnet, P., Vellinga, W.P., Planqué, R., Rauber, A., Palazzo, S., Fisher, B., Müller, H.: Lifeclef 2015: Multimedia life species identification challenges. (09 2015)

139. Xu, W., Yu, G., Zare, A., Zurweller, B., Rowland, D., Reyes-Cabrera, J., Fritschi, F.B., Matamala, R., Juenger, T.E.: Overcoming small minirhizotron datasets using transfer learning. ArXiv abs/1903.09344 (2019)

140. Malpe, S.: Automated leaf disease detection and treatment recommendation using transfer learning. (2019)

141. Bellocchio, E., Costante, G., Cascianelli, S., Fravolini, m., Valigi, P.: Combining domain adaptation and spatial consistency for unseen fruits counting: A quasi-unsupervised approach. IEEE Robotics and Automation Letters 5 (01 2020) 1–1

142. Chandra, A.L., Desai, S.V., Balasubramanian, V.N., Ninomiya, S., Guo, W.: Active learning with point supervision for cost-effective panicle detection in cereal crops. BMC Plant Methods (2020)

143. Settles, B.: Active learning literature survey. Technical report, University of Wisconsin–Madison (2010)

144. Gal, Y., Islam, R., Ghahramani, Z.: Deep bayesian active learning with image data. In: ICML. (2017)

145. Sener, O., Savarese, S.: Active learning for convolutional neural networks: A core-set approach. In: ICLR 2018. (2018)

146. Wang, K., Zhang, D., Li, Y., Zhang, R., Lin, L.: Cost-effective active learning for deep image classification. IEEE Trans. Cir. and Sys. for Video Technol. 27(12) (December 2017) 2591–2600

147. Brust, C., Käding, C., Denzler, J.: Active learning for deep object detection. CoRR abs/1809.09875 (2018)

148. Roy, S., Unmesh, A., Namboodiri, V.P.: Deep active learning for object detection. In: BMVC. (2018)

149. Vijayanarasimhan, S., Grauman, K.: Large-scale live active learning: Training object detectors with crawled data and crowds. International Journal of Computer Vision 108(1) (May 2014) 97–114